Harnessing AI to Accelerate Innovation in the Biopharmaceutical Industry

AI has the potential to transform drug development by enhancing productivity across the entire development pipeline, boosting biopharmaceutical innovation, accelerating the delivery of new therapies, and fostering competition to help improve public health outcomes.

KEY TAKEAWAYS

Key Takeaways

Contents

The Drug Development Process 3

The Role of AI in Drug Development 7

Challenges for AI Adoption. 19

Introduction

Drug development is a lengthy, complex, and costly process marked by extensive clinical trials, substantial risks, and stringent regulations. Artificial intelligence (AI) has the potential to transform the entire drug development process—from accelerating drug discovery and optimizing clinical trials to improving manufacturing and supply chain logistics.

An important challenge for innovation policy is to ensure that spending on medicines drives the greatest return to society. Policies that impose price controls or weaken intellectual property (IP) protections may aim to lower drug prices but are known to dampen incentives for future drug research and development (R&D) and fail to improve R&D productivity. A more sustainable approach is to pursue policies that maximize the value of R&D investment. Enhancing R&D productivity, especially in light of declining returns and rising risks that increase the cost of capital—a major factor in drug development costs—offers a more effective path to maximize public health returns, promote equitable access, and drive economic growth.

Technological advances hold promise for improving R&D productivity, accelerating access to novel therapies, and promoting health equity and competitiveness, ultimately delivering greater value for resources invested in medicines without stifling innovation. AI applications across various phases of drug development could enhance R&D productivity, increase drug output, and foster competition, driving innovation and expanding access to novel therapies to improve societal welfare. This increased drug supply can, in turn, drive consumer benefits through market competition. Furthermore, AI could accelerate the discovery of new therapeutic targets and drug candidates for the thousands of diseases that still lack treatment options.[1]

This report explores the potentially transformative role of AI across various phases of drug development, presenting early evidence highlighting how AI can enhance drug discovery, diversify clinical trials, and optimize drug manufacturing processes, ultimately leading to more efficient development with shorter timelines and improved outcomes. Additionally, the report identifies key challenges to broader AI adoption in drug development and offers several policy recommendations to support effective AI adoption, aimed at fostering innovation while ensuring patient safety.

The Drug Development Process

The drug development process is lengthy, risky, and costly.[2] While estimates vary widely across therapeutic areas, recent figures suggest that the R&D cost of bringing a new drug to market could be up to $2.83 billion (uncapitalized), factoring in pre- and post-approval R&D, such as new indications, patient populations, and dosage forms—or, capitalized at an annual rate of 10.5 percent, up to $4.04 billion.[3] According to a 2021 Biotechnology Innovation Organization (BIO) report, it takes more than a decade for a drug to reach the market, and only 7.9 percent of Phase I clinical trial candidates ultimately receive approval.[4]

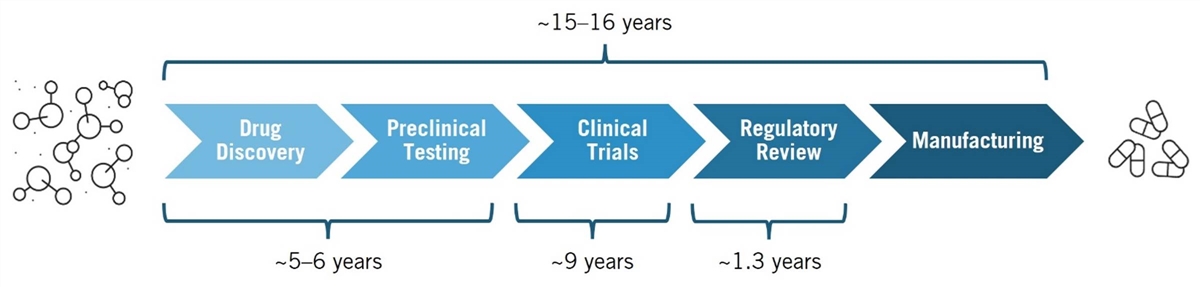

Drug development is a complex, multiphase process that transforms scientific discoveries into safe, effective therapies. Key phases include drug discovery, preclinical testing, clinical trials, regulatory review, and manufacturing. (See figure 1.) In the drug discovery phase, scientists search for and identify therapeutic targets—such as proteins, including receptors and enzymes—involved in a disease. They then screen thousands of potential therapeutic compounds to find those capable of modulating the target’s activity. Promising compounds are refined and advanced to the preclinical testing phase, where lab and animal studies assess safety, efficacy, and pharmacokinetics/dynamics.[5]

The discovery and preclinical phases together typically take about five to six years.[6] After preclinical testing, these candidates move on to human clinical trials, which average 9.1 years.[7] Drugs that successfully complete clinical trials are submitted to regulatory authorities, such as the Food and Drug Administration (FDA), for approval based on evidence from both preclinical and clinical studies.[8] A recent report finds that, on average, it takes 10.5 years for a successful drug candidate to progress from Phase I clinical trials to regulatory approval, following years of early research to discover a therapeutic target and design a drug to effectively modulate it.[9]

Figure 1: The drug development process[10]

A prominent method in modern drug discovery is the target-based approach, which involves designing drugs that interact with specific therapeutic targets implicated in a disease. This method relies on a deep understanding of a target’s role in the disease to develop effective therapies. The process begins with identifying a target based on the hypothesis that modifying its activity will impact disease progression. Target identification involves analyzing extensive genomic, proteomic, and other biological data to pinpoint key molecules involved in disease pathways. Target identification is followed by validation through in vivo and ex vivo models. During this discovery phase, techniques such as high-throughput screening, bioinformatics, and experimental validation are employed to identify and confirm these targets. The process is laborious and complex, requiring significant time and effort. Once validated, drug candidates proceed to clinical trials to evaluate safety and efficacy in humans.[11]

A prominent method in modern drug discovery is the target-based approach, which involves designing drugs that interact with specific therapeutic targets implicated in a disease. This method relies on a deep understanding of a target’s role in the disease to develop effective therapies. The process begins with identifying a target based on the hypothesis that modifying its activity will impact disease progression. Target identification involves analyzing extensive genomic, proteomic, and other biological data to pinpoint key molecules involved in disease pathways. Target identification is followed by validation through in vivo and ex vivo models. During this discovery phase, techniques such as high-throughput screening, bioinformatics, and experimental validation are employed to identify and confirm these targets. The process is laborious and complex, requiring significant time and effort. Once validated, drug candidates proceed to clinical trials to evaluate safety and efficacy in humans.[11]

Drug development is a complex, multi-phase process that transforms scientific discoveries into safe, effective therapies. Key phases include drug discovery, preclinical testing, clinical trials, regulatory review, and manufacturing.

In practice, drug discovery often integrates target-based and phenotypic approaches. In phenotypic drug discovery, compounds are screened for their effects on disease-relevant traits (phenotypes) without prior knowledge of the exact molecular target or underlying mechanism. This method is particularly useful for complex conditions such as cancer and cardiovascular and immune diseases, which involve multiple genes and pathways. By combining these approaches, researchers can leverage their respective strengths to enhance the likelihood of developing effective therapies.[12]

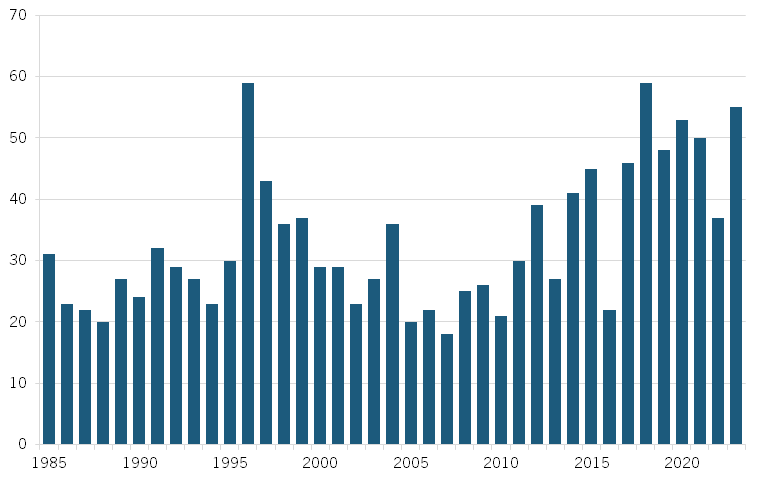

In recent decades, advances in fields such as pharmacology, synthetic biology, and biotechnology—coupled with breakthroughs such as the decoding of the human genome in 2003 and the advent of next-generation sequencing techniques in the mid-2000s—have significantly expanded scientific understanding of health and disease. Over the past decades, biopharmaceutical R&D investments in the United States surged from $2 billion in 1980 to $96 billion in 2023.[13] However, despite the significant increase in resources, several indicators suggest that biopharmaceutical innovation is slowing (or plateauing).[14] Notably, there has as yet been no significant corresponding increase in the number of new drug launches, clinical trial success rates have decreased, and the overall length of drug development has increased. (See figure 2.)[15]

Moreover, over time, it has become more expensive to develop new drugs. A Deloitte report notes, “The average cost to develop an asset, including the cost of failure, has increased in six out of eight years.”[16] The 2019 version of the report concludes that the average cost of bringing a new biopharmaceutical drug to market has increased by 67 percent since 2010 alone.[17] At the same time, Deloitte found that forecast peak sales per asset have already more than halved since 2010. And significantly, the biopharma industry has experienced a downward trend in returns to pharmaceutical R&D: Deloitte found that the rate of return to R&D in 12 large-cap pharmaceutical companies declined from 10.1 percent in 2010 to 4.2 percent in 2015 and then to 1.8 percent in 2019.[18]

Figure 2: FDA new drug approvals, 1985–2023[19]

Several factors have been proposed to explain the decline in R&D productivity and the growing complexity of biopharmaceutical innovation.[20] These include shifts in the nature of science and technology, more stringent regulatory requirements, and a shift in R&D investments toward novel, high-risk, high-value targets that carry more uncertainty and difficulty.[21] Further, advancements in science, particularly in genetics, molecular biology, and systems biology, have revealed that many diseases are highly complex, resulting from a combination of genetic, environmental, and lifestyle factors. Such complexity often requires sophisticated treatments, such as targeted therapies or precision medicine, which require additional research and advanced technologies (e.g., gene editing, biomarker identification, etc.), adding time and cost to drug development.

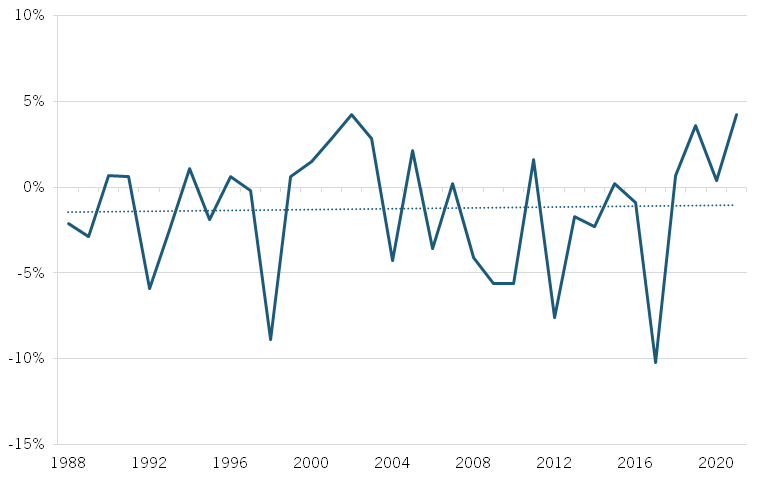

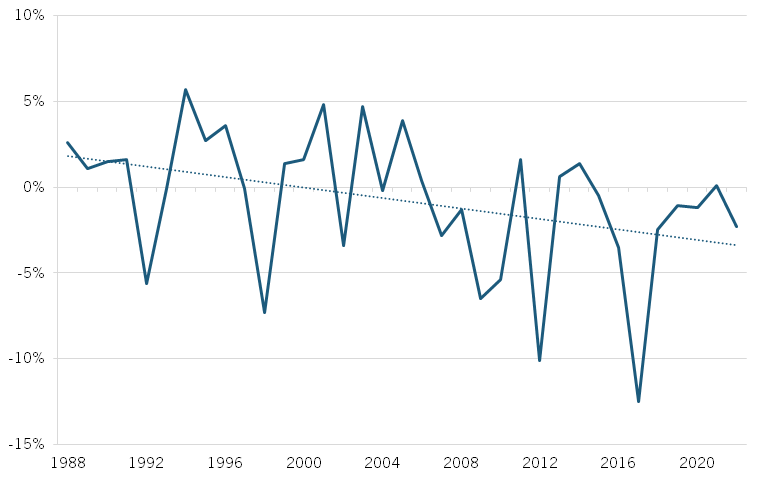

Beyond drug development—the process of discovering, designing, and testing new drugs— biopharmaceutical manufacturing, which involves producing drugs at scale, has also seen declining productivity. Total factor productivity (TFP), which measures the efficiency of all inputs (labor, capital, and materials) in production, decreased by nearly 2 percent annually from 2010 to 2018, indicating reduced output capacity. (See figure 3.) While TFP has since improved, growing at an average annual rate of 2 percent, labor productivity—measured by output per hour worked—has declined by more than 3 percent per year, pointing to an ongoing challenge. (See figure 4.) This decline could stem from factors such as stricter regulatory demands, a need for more specialized handling and monitoring, or greater manufacturing complexity, which may be reducing labor input efficiency, even if overall TFP has improved. These factors are likely linked to the industry’s growing pursuit of more advanced treatments, such as cell and gene therapies, which require more intricate handling and resource-intensive manufacturing processes.

Figure 3: Annual change in biopharmaceutical manufacturing TFP, 1988–2021[22]

Figure 4: Annual change in biopharmaceutical manufacturing labor productivity, 1988–2021[23]

The Role of AI in Drug Development

Amid declining productivity in drug development, emerging technologies offer the potential to boost biopharmaceutical productivity. Advancements such as AI, quantum computing, CRISPR (clustered regularly interspaced short palindromic repeats), 3D bioprinting, organ-on-a-chip, and nanotechnology could significantly reduce the time and cost of bringing new therapies to market, making life-saving therapies more widely accessible to patients. Next-generation sequencing has enabled the production of large biomedical datasets, particularly genomics (the study of an organism’s genes) and transcriptomics (the study of RNA transcripts), among others.[24] Along with data from experimental research and pharmaceutical studies, these vast datasets provide fertile ground for AI, which can analyze these datasets to uncover patterns and make predictions that serve as valuable inputs across different phases of drug development—from target identification and clinical trials to regulatory processes, manufacturing, and supply chain optimization.

Quantum computing, while still in the early stages, can complement AI by solving problems beyond the capabilities of classical computers—such as molecular simulations and protein folding—both critical for modeling biological systems and identifying new drug candidates. Together, these technologies can accelerate biopharmaceutical innovation, offering more efficient pathways to novel therapies.[25]

In Prediction Machines: The Simple Economics of Artificial Intelligence, Ajay Agrawal, Joshua Gans, and Avi Goldfarb described AI as a tool that transforms data into predictions, offering valuable insights to inform decision-making. In the biopharmaceutical industry, AI can analyze vast biological datasets to predict which therapeutic targets are linked to diseases, identify promising drug candidates, and forecast drug responses.[26] Using advanced machine learning techniques, particularly deep learning based on neural networks trained on large datasets, AI excels at recognizing patterns and generating predictions. Importantly, AI complements rather than replaces human scientists. A key AI limitation lies in performing advanced causal inference —an essential task that requires human judgment. While AI is adept at identifying correlations, such as linking a target to a disease, it struggles to grasp the complex underlying biological mechanisms that explain why these links exist—a crucial aspect of effective drug development.

The vast amount of data provides fertile ground for AI, which can analyze datasets to uncover patterns that support each phase of drug development, from target identification and clinical trials to regulatory processes, manufacturing, and supply chain optimization.

Still, AI provides a powerful tool that can significantly advance different phases of drug development, from discovery and preclinical testing to clinical trials, regulatory review, and manufacturing. In drug discovery, AI accelerates the analysis of large datasets to identify promising compounds, reducing the time and cost of finding new drug candidates. During preclinical testing, AI models simulate biological processes to predict how a drug will behave in humans, reducing reliance on animal testing. In clinical trials, AI can optimize patient selection, improve trial design, and speed up data analysis, leading to faster, more accurate outcomes. It can also streamline the regulatory process by assisting in the preparation of complex documentation required by agencies such as America’s FDA and Europe’s European Medicines Agency (EMA). Finally, in manufacturing, AI can enhance production processes, improving efficiency and ensuring consistent quality. By integrating AI throughout these stages, the process of bringing a new drug to market can become more efficient, possibly bringing much-needed therapies to market quicker.

Facilitating Drug Discovery

AI is often hailed as the future of drug discovery, with the ability to reduce the time and cost of identifying therapeutic targets and promising drug compounds, optimize drug chemical structures for greater efficacy, and enhance the molecular diversity of potential targets.[27] Traditional experimental methods for target discovery can be slow and limited in scope, but AI offers a more efficient approach by predicting a set of targets from a large dataset based on properties that suggest their involvement in disease.[28] Scientists can then validate these predictions and develop therapeutic hypotheses about why those targets are linked to disease in order to develop safe and effective therapies.[29]

Illustrative Example: Identifying Therapeutic Targets in Lung Cancer

AI is transforming drug discovery by helping scientists identify potential therapeutic targets in cancer by analyzing vast genomic and clinical data.

Goal: Suppose a lung cancer research team aims to identify new therapeutic targets to guide drug development. The team plans to use AI to predict genes associated with lung cancer that could serve as therapeutic targets. If a gene consistently shows higher expression levels in cancer patients, it could represent a promising target for developing a novel drug.

Data: A dataset of 10,000 individuals, including 5,000 with lung cancer (labeled as 1) and 5,000 without (labeled as 0). Contains data on each individual’s gene expression levels for 20,000 genes, obtained through whole-genome sequencing.

The Role of Human Scientists: Before training the AI model, researchers apply their deep knowledge of biological processes and causal inference to narrow the list of genes from 20,000 to a more relevant subset, say 500 genes. This selection process often involves formulating hypotheses regarding the genes’ causal role in cancer development, employing causal inference methods and reviewing existing scientific literature to identify genes linked to lung cancer progression, drug resistance, and treatment outcomes. By focusing on biologically relevant genes, scientists enhance the likelihood of identifying meaningful drug targets while managing the complexities of high-dimensional genomic data. This approach improves the predictive power of AI models and ensures that the selected genes have a solid biological foundation.

The Role of AI: The AI model employs predictive modeling through statistical models—such as logistic regression, probabilistic regression, bagging (e.g., random forest), boosting, and others— which predict whether a patient has lung cancer based on gene expression levels. The models can identify patterns in the data, assign weights to each gene, and test the accuracy of its predictions on partitions of the dataset.[30]

How It Works: Training the AI model involves analyzing gene expression levels for the subset of 500 genes across the 10,000 individuals and looking for correlations with lung cancer status. AI models assign a weight to each gene, indicating the extent to which each gene contributes to predicting the likelihood of lung cancer. Genes with large positive weights—or higher importance—are flagged as potential drug development targets.

In July 2021, Google DeepMind’s AlphaFold2 system solved a pivotal aspect of the long-standing “protein-folding problem,” a 50-year-old biology challenge. By predicting the 3D structures of nearly all known proteins from their amino acid sequences, AlphaFold2 has transformed a critical field of biological research. The system, using a vast database of known protein structures, reduced the time needed to predict protein structure from months to minutes. In collaboration with the European Molecular Biology Laboratory (EMBL), Google DeepMind made this data freely available to the scientific community.[31] This breakthrough has profound implications for understanding protein function, which is key for designing more effective drugs.[32] This achievement earned Demis Hassabis and John M. Jumper of Google DeepMind the 2024 Nobel Prize in Chemistry “for protein structure prediction.” The prize was also shared with David Baker “for computational protein design.”[33]

AI is often hailed as the future of drug discovery, with the ability to reduce the time and cost of identifying therapeutic targets and promising drug compounds, optimize drug chemical structures for greater efficacy, and enhance the molecular diversity of potential targets.

Additionally, AI can assist with bioactivity prediction—evaluating how effectively a drug interacts with its intended target—by identifying promising compounds from large candidate pools, thereby minimizing the need for costly, time-consuming experiments. In 2024, a research team developed ActFound, an AI model that employs pairwise and meta-learning techniques, trained on millions of data points from ChEMBL, a public bioactivity database maintained by EMBL’s European Bioinformatics Institute (EMBL-EBI). ActFound has been shown to be more accurate and less costly than traditional computational methods.[34]

Genentech’s Lab-in-the-Loop

Genentech, a biotechnology pioneer founded in 1976 and now part of the Roche Group, is dedicated to combating serious, life-threatening diseases. Its notable achievements include the development of the first targeted antibody for cancer and the first drug for primary progressive multiple sclerosis.

In 2023, Genentech partnered with NVIDIA to launch an AI platform named “lab-in-the-loop,” designed to accelerate the use of generative AI in drug discovery and development. Created by Genentech’s Prescient Design accelerator unit, the platform utilizes Genentech’s granular data to train algorithms for designing new drug compounds. These compounds are tested in the lab, and the resulting data is fed back to refine the AI algorithms. This interdisciplinary approach creates a continuous loop between wet lab work and computational methods, evolving experimental and clinical data into predictive models for potential drug candidates, thus accelerating the development of life-saving therapies.[35]

Dr. Aviv Regev, executive vice president and head of Genentech Research and Early Development, described the lab-in-the-loop approach as:

the mechanism by which you bring generative AI to drug discovery and development. When we try to discover drugs, we’re only as good as our data. We take the data and we use it to train algorithms; use these algorithms that we’ve trained to generate new kinds of molecules that we haven’t tested before, which we will take back to the lab, and generate experimental data for them again. And those test results will be sent to the AI, to improve itself, to get a better algorithm, and we repeat this process again and again and again until we reach a molecule that has all the right properties that we need for it to be a real medicine for patients.[36]

In January 2024, Genentech began recruiting patients for a clinical trial to test the effectiveness of its experimental drug vixarelimab in treating ulcerative colitis, an inflammatory bowel disease. The drug had previously been tested only in lung and skin disorders. Traditionally, determining a drug’s potential for different indications can take years of laboratory work, but Genentech’s AI platform expedited this process, helping scientists determine in just nine months that vixarelimab could be a promising drug candidate for treating diseases affecting colon cells.

Genentech leverages AI in drug discovery to deepen scientists’ understanding of health and disease, using these insights to develop more effective therapies. By integrating knowledge from fields such as biology, computation, genomics, and machine learning, Genentech aims to drive biopharmaceutical innovation.

While AI is still in its early stages, several indicators suggest that it is already streamlining drug discovery. A study by the Boston Consulting Group (BCG) examines the research pipelines of 20 AI-focused pharmaceutical companies and finds that 5 out of 15 AI-assisted drug candidates that advanced to clinical trials did so in under four years, compared with the historical average of five to six years.[37] A 2023 report by BCG and the Wellcome Trust projects that AI-enabled efforts could reduce the time and cost of the drug discovery and preclinical stages by 25 to 50 percent.[38] And a 2019 report by the U.S. Government Accountability Office and the National Academy of Medicine notes that one company estimated AI-accelerated drug discovery could save between $300 million and $400 million per drug—stemming from improved R&D productivity, as AI increases the efficiency of capital investment, enabling better drugs to be identified earlier and more quickly.[39]

AI can significantly advance the main phases of drug development—from discovery and preclinical testing to clinical trials, regulatory review, and manufacturing.

Several AI-enabled drug discovery companies have begun reporting significantly accelerated drug discovery timelines. In September 2019, Canadian biotechnology firm Deep Genomics announced its first AI-discovered therapeutic drug candidate aimed at treating Wilson’s disease, a genetic disorder that leads to excess copper in the blood, causing liver and neurological issues.[40] This drug candidate was proposed just 18 months after target discovery efforts began, with the company’s AI platform analyzing over 2,400 diseases and 100,000 pathogenic mutations.[41] In January 2020, British pharmaceutical company Exscientia, in collaboration with Japan’s Sumitomo Dainippon Pharma, reported that its AI-developed compound for obsessive-compulsive disorder (OCD) had reached clinical trials in just 12 months, compared with the typical five- to six-year timeline. This compound was also reportedly the first AI-designed drug to enter clinical trials.[42] In June 2023, Hong Kong- and New York-based Insilico Medicine announced that it had advanced its AI-developed drug for idiopathic pulmonary fibrosis, a chronic lung disease, to clinical trials in under 30 months.[43] While this early evidence suggests that AI has the potential to significantly reduce the speed of drug discovery, it will take longer to determine how AI-developed drugs perform in clinical trials compared with their non-AI counterparts.[44]

The BCG study reveals that AI-enabled drug discovery companies tend to concentrate their pipelines on well-established therapeutic target classes.[45] Over 60 percent of their disclosed targets come from familiar classes, such as enzymes (e.g., kinases) and G-protein-coupled receptors. This focus on well-known targets likely reflects a strategy to mitigate the risks associated with drug development and to demonstrate the viability of their AI platforms. In contrast, large pharmaceutical companies typically maintain more diverse pipelines, balancing both new and established targets.[46]

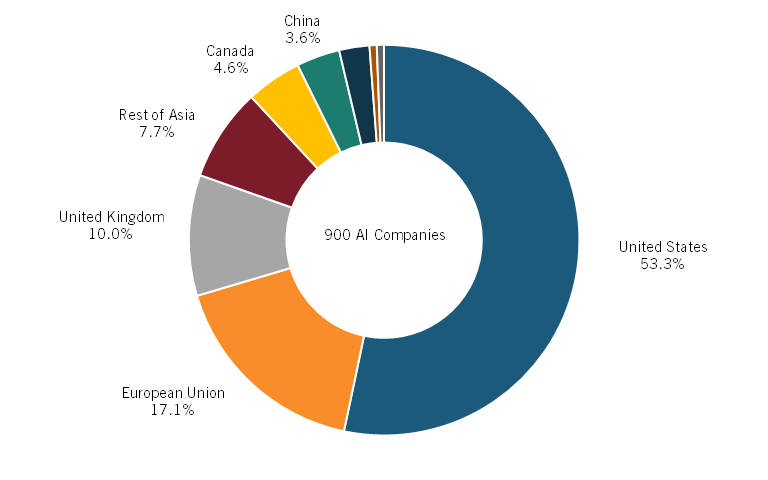

Moreover, a recent report from Deep Pharma Intelligence shows that in the geographical landscape of AI adoption in drug discovery, the United States is a global leader. As of 2023, more than 50 percent of all AI-enabled drug discovery biotechnology companies were based in the United States, followed by 17 percent in Europe and close to 4 percent in China. (See figure 5.)[47]

Figure 5: Share of companies using AI for drug discovery, 2023[48]

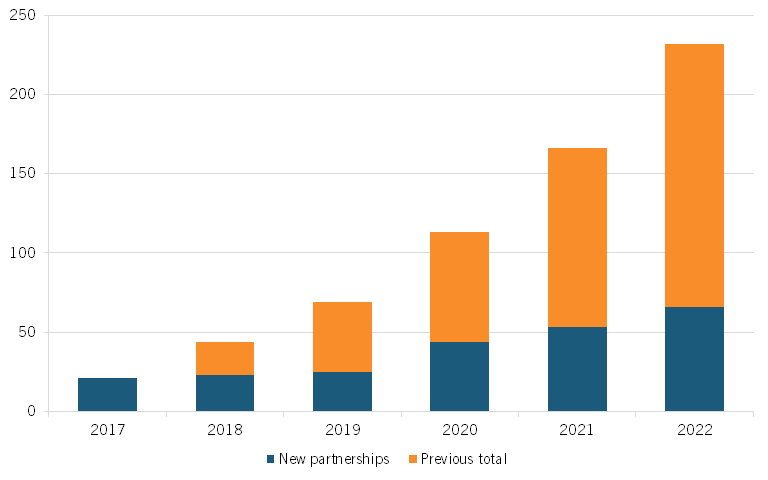

Moreover, the number of partnerships between large pharmaceutical companies and AI companies has surged, from 21 such new partnerships in 2017 to 66 in 2022, a more than threefold increase. (See figure 6, reproduced from the report.)[49] According to a recent study by S&P Global Ratings, examples of such partnerships in drug discovery include AstraZeneca and BenevolentAI, which have partnered for target identification optimization; GSK and Insilico Medicine, which are working together on the identification of novel biological targets and pathways; and Pfizer and Genetic Leap, which are pursuing the development of RNA genetic drug candidates.[50]

Figure 6: Number of AI-focused partnerships for large pharma companies, 2017–2022[51]

Streamlining Clinical Trials

Clinical trials, used to assess the safety and efficacy of proposed new therapies, represent a crucial step in the drug development process. However, conducting them is expensive, time consuming, and risky.[52] As of 2020, the global clinical trial market was valued at $44.3 billion.[53] One study finds that the average clinical development time for a typical drug—from first-in-human trials to regulatory approval—is 9.1 years, though this can vary by therapeutic area, indication, trial design, and patient availability.[54] A recent BIO report details that, on average, Phase I trials take 2.3 years, Phase II 3.6 years, Phase III 3.3 years, and regulatory review 1.3 years.[55] Furthermore, fewer than 8 percent of drug candidates that enter Phase I trials succeed.[56] Given these challenges, AI has the potential to streamline and improve clinical trials in several ways, making them faster and more effective and cost-efficient.[57]

Enhancing Patient Eligibility and Recruitment

One of the most time-consuming aspects of clinical trials is identifying and recruiting eligible patients, a process that can take up to one-third of the total trial duration. Moreover, 20 percent of trials fail to recruit the required number of participants. To address this challenge, researchers have explored relaxing overly strict eligibility criteria while maintaining patient safety.[58] Trial Pathfinder, an AI system developed at Stanford University, analyzes completed clinical trials to assess how modifying criteria, such as blood pressure thresholds, impacts adverse events such as serious illness or death among participants.[59] In a study of non-small cell lung cancer trials, Trial Pathfinder showed that loosening certain criteria—some of which, like lab test results, had little impact on the trial’s outcome—could double the number of eligible patients without increasing the risk of adverse events. This approach proved effective for other cancers, including melanoma and follicular lymphoma, and in some cases, even reduced negative outcomes by including sicker patients who stood to benefit more from the treatment in the trial.[60] These findings suggest that relaxing restrictive eligibility criteria can expand patient access to promising new therapies.

After determining eligibility criteria, the next major challenge is recruiting patients, as failure to do so can produce delays and trial terminations.[61] Typically, patient recruitment involves manual prescreening due to the complexity of clinical criteria text, which often includes confusing abbreviations and terminology.[62] Criteria2Query is an AI system that can streamline this process by parsing eligibility criteria from natural language and converting it into structured, searchable data. Researchers can input inclusion and exclusion criteria in plain language that Criteria2Query translates into formal database queries that sift through electronic health records (EHRs) to find matching participants.[63] This system aims to enhance human–AI collaboration, optimizing cohort generation by combining machine efficiency with human expertise to simplify complex concepts and train algorithms. Criteria2Query can accelerate recruitment and help include populations such as children and the elderly, who are often unnecessarily excluded from trials, thereby speeding up and diversifying clinical trials.

Several AI-enabled drug discovery companies have begun reporting significantly accelerated drug discovery timelines.

Beyond systems such as Criteria2Query, which match trials to patients, AI also improves patient-to-trial matching. TrialGPT, for example, helps patients find suitable trials by predicting their eligibility for specific studies. Patients provide a description of their condition in plain language, and TrialGPT generates a score reflecting their fit for a given trial, along with explanations for each eligibility criterion. In testing, has TrialGPT achieved nearly human-level accuracy and reduced screening time for trial matching by over 40 percent, highlighting the potential of AI to enhance clinical trial efficiency and accelerate patient recruitment.[64] In October 2023, the Dana-Farber Cancer Institute, supported by a Meta grant, began developing a novel open source AI platform to “computationally match patients with cancer to clinical trials.”[65] This initiative is leveraging Meta’s large language model, Llama 3, to analyze unstructured clinical notes and trial eligibility criteria, enabling quicker and more accurate matching of patients to suitable clinical trials.[66]

AI can also play a critical role in identifying biomarkers that predict therapy outcomes and disease progression, which is crucial for matching patients to the most effective clinical trials and therapies. A research team led by Dan Theodorescu, director of Cedars-Sinai Cancer in Los Angeles, developed the Molecular Twin Precision Oncology Platform (MT-POP) to discover biomarkers that predict disease progression in pancreatic cancer, one of the most lethal cancers. Their findings revealed that relying solely on CA 19-9—the only FDA-approved biomarker for pancreatic cancer—was suboptimal for predicting therapy outcomes.[67] Instead, a set of multiple biomarkers provided far more accurate predictions. This multi-omics approach demonstrates the potential to optimize patient selection for clinical trials—as well as approved therapies—by identifying those most likely to benefit from specific treatments.[68] Such advancements could help refine trial designs and improve the likelihood of success in developing new therapies for pancreatic ductal adenocarcinoma and other challenging diseases.

Optimizing Clinical Trial Design

AI can also enhance clinical trial design by optimizing aspects such as drug dosages, patient enrollment numbers, and data collection strategies. At the University of Illinois, researchers developed the HINT (Hierarchical Interaction Network) algorithm to predict trial success based on factors such as the drug molecule, target disease, and patient criteria. Trained on pharmacokinetic and historical trial data, HINT has demonstrated high accuracy across different clinical trial phases and diseases.[69] Building on this foundation, the team created SPOT (Sequential Predictive Modeling of Clinical Trial Outcome), which tracks trial progress in real time and refines predictions as more data becomes available, allowing for timely adjustments during the trial to improve outcomes.[70] Moreover, the Illinois-based company Intelligent Medical Objects has developed SEETrials, which utilizes OpenAI’s GPT-4 to extract safety and efficacy data from clinical trial abstracts, helping clinical researchers evaluate different trial designs.[71]

Further, AI-powered clinical trial design start-up QuantHealth has developed Katina, an AI platform that simulates hundreds of thousands of potential trial protocol combinations—such as various patient groups, treatment parameters (e.g., treatment dose, administration route, duration), and trial endpoints (e.g., tumor shrinkage)—to enhance the likelihood of trial success. Trained on extensive biomedical, clinical, and pharmacological data, the AI-guided workflow aims to enhance trial design and execution.[72] In August 2024, QuantHealth reported that Katina had simulated over 100 trials with 85 percent accuracy. It could predict Phase II trial outcomes with 88 percent accuracy, significantly higher than current success rates of 28.9 percent, and Phase III trial outcomes with 83.2 percent accuracy—compared with 57.8 percent. The platform was also able to reduce trial costs and durations. QuantHealth reported that its collaboration with a pharmaceutical company’s respiratory disease team led to a significant $215 million reduction in clinical trial costs, achieved by shortening trial duration by an average of 11 months, requiring 251 fewer trial participants, and using 1.5 fewer full-time employees to conduct the trial.[73]

Streamlining Clinical Trial Protocols

AI can also streamline the analysis of clinical trial protocols (CTPs), which are typically lengthy, complex documents exceeding 200 pages. CTPs cover everything from trial objectives and design to participant eligibility criteria and statistical methods, serving as a blueprint to conduct trials in a structured, compliant way. By using AI to extract valuable insights from unstructured documents, researchers can enhance participant diversity and reduce dropout rates, ultimately accelerating drug development by improving the efficiency of clinical trials.[74]

For example, Genentech employed Snorkel AI to extract key information from over 340,000 CTP eligibility criteria. This effort led to better study designs and more accurate participant inclusion/exclusion criteria. The process helped visualize how different eligibility criteria could impact the demographics of trial participants, allowing for more informed decisions about trials. Snorkel AI identified patterns in clinically relevant characteristics and performed demographic trade-off analyses, enabling Genentech to adjust criteria to enhance trial diversity and success.[75]

Improving diversity in clinical trials is essential for addressing health inequities. According to the FDA, concerningly, 75 percent of clinical trial participants for drugs approved by the FDA in 2020 were white, while only 11 percent were Hispanic, 8 percent were Black, and 6 percent were Asian.[76] This lack of diversity is problematic not only because certain diseases are more prevalent in specific underrepresented groups, but also because different populations may respond differently to therapies. For instance, Albuterol, the most-prescribed bronchodilator inhaler in the world, is less effective for Black children compared with their white counterparts. Since 95 percent of lung disease studies were conducted on individuals of European descent, this genetic difference went undetected for years.[77] Scientists later linked specific genetic variants involved in lung capacity and immune response to the reduced efficacy of Albuterol in Black children.[78] In response to a lack of diversity in clinical trials, the FDA issued draft guidance in 2022 encouraging more inclusive representation.[79] Pharmaceutical companies are increasingly leveraging AI, including tools such as TrialPathfinder and Criteria2Query, to safely recruit more diverse, representative participants, helping to improve trial outcomes for underrepresented populations.[80]

Johnson & Johnson Leveraging AI to Diversify Clinical Trials and Transform Precision Medicine

Data science and AI are transforming how J&J discovers, develops, and delivers new therapies to bring transformative medicines to people around the world. J&J is leveraging AI tools to build and scale capabilities that enhance the design, execution, and diversification of its clinical trials to facilitate drug development. For clinical trial design, J&J has developed an interactive, AI-enabled platform, Clinical Studio, which uses real-world data (RWD)—including EHRs and patient registries—and internal operational data to enable digitization of protocols, providing transparency into clinical cost, protocol complexity, and patient burden and helping researchers develop fit-for-purpose protocols for clinical trials, without impacting the scientific rigor of the study. For example, the platform can be used to define and shape inclusion/exclusion criteria and associated outcomes, which has a direct impact on the cohort of eligible patients, including diversifying the patient pool.

J&J’s internal platform, Trials360.ai, is helping to guide clinical trial site selection as well as engagement and patient recruitment efforts. The tool, coupled with clinical and operational expertise, aims to accelerate clinical development and trial recruitment by meeting patients where they are. With AI algorithms applied to real-world and clinical data, J&J can more accurately predict trial locations with the highest probability of enrolling patients, enabling it to place trial sites in locations where patients are, rather than establishing sites where trials have historically taken place. Findings show that sites ranked in the top 50 percent by its AI models enroll up to three times more patients than do those ranked in the bottom 50 percent. J&J has leveraged AI to review clinical data to identify potential data anomalies and query sites and resolve data discrepancies in an ongoing manner.

Further, across therapeutic areas, J&J is leveraging a data-driven, AI approach to support the advancement of diversity, equity, and inclusion (DEI) in clinical trials—from trial planning to execution—aiming to ensure trial results are generalizable to diverse, real-world populations impacted by the diseases J&J is tackling. In immunology, J&J has set ambitious goals for several trials, and as of late 2023, it had surpassed its year-end diversity goals for those trials, five months ahead of schedule—supported by robust recruitment tactics and AI-supported site selection. In oncology, J&J is leveraging data science, coupled with clinical and operational expertise and community engagement, to help advance DEI in its multiple myeloma (MM) studies through clinical trial site selection and patient recruitment.

A recent meta-analysis of 431 MM studies conducted between 2012 and 2022 across the industry shows that only 4 percent of enrolled patients were Black.[81] Early enrollment data for five ongoing J&J MM trials indicate that a combination of human expertise and AI-driven insights has led twice as many Black patients to consent and enroll in clinical trials in the United States as compared with prior trials.

Optimizing Regulatory Submissions

The regulatory submission phase of clinical trials is both resource intensive and time consuming. Regulatory affairs teams serve as the critical link between pharmaceutical companies and regulatory bodies, working to secure approvals in accordance with current guidelines. These teams compile the necessary data and documents for submission while ensuring that applications meet all regulatory requirements. AI can support this process by reducing the time needed to gather and standardize data, as well as by generating drafts based on templates and guidelines. Furthermore, AI can enhance workflows by providing timely insights, automating tasks such as data monitoring, document management, and adverse event detection as well as ensuring compliance with evolving regulations.[82]

For example, Medidata’s Clinical Data Studio uses AI to monitor clinical trial data in real time, streamlining clinical data management, operations, and safety. The platform automates data reviews, identifies patterns through visualizations, monitors trial site performance and compliance, and mitigates data quality risks by detecting anomalies such as inconsistencies or outliers in patient data. This proactive approach enables clinical researchers to address potential issues early, helping to ensure data integrity throughout the trial and reducing the risk of compromising regulatory compliance. Ultimately, this helps improve trial efficiency and reliability, expediting the regulatory review process.[83]

Another notable example is Veeva Vault RIM (Regulatory Information Management), an AI-powered platform developed by Veeva Systems. The platform offers a comprehensive suite of services designed to manage submissions, ensure compliance, and streamline regulatory processes across regions. With its compliance tracking features, Veeva Vault RIM continuously monitors and updates evolving regulatory guidelines worldwide, enhancing the efficiency of the regulatory submission process.[84] When new guidelines are issued by regulatory bodies such as the FDA or EMA, the platform alerts clinical trial teams, analyzes the changes, and recommends adjustments to trial procedures or documentation in real time to ensure ongoing compliance. Additionally, the platform automates the regulatory submission process by aggregating data from various sources, formatting it according to the latest guidelines, and generating submission-ready documents, which reduces manual effort and the risk of errors.[85]

Enhancing Manufacturing and Supply Chains

AI can also enhance drug manufacturing and strengthen supply chains by optimizing production processes, reducing downtime through predictive maintenance, improving demand forecasting, and streamlining inventory management, ultimately leading to greater efficiency.

AI in Manufacturing

In addition to its role in drug discovery, clinical trials, and regulatory submissions, AI can enhance manufacturing and help strengthen supply chains. It can optimize drug manufacturing by streamlining production processes, improving quality control, and reducing costs. Predictive analytics can identify efficient production pathways, forecast equipment failures, anticipate necessary maintenance, and improve resource allocation. AI can also support the development of continuous manufacturing systems, enabling more flexible, responsive drug production, and AI-driven demand forecasting can help reduce both stockouts and overproduction.[86]

Asimov—Innovating Gene Therapy Design and Manufacturing With AI

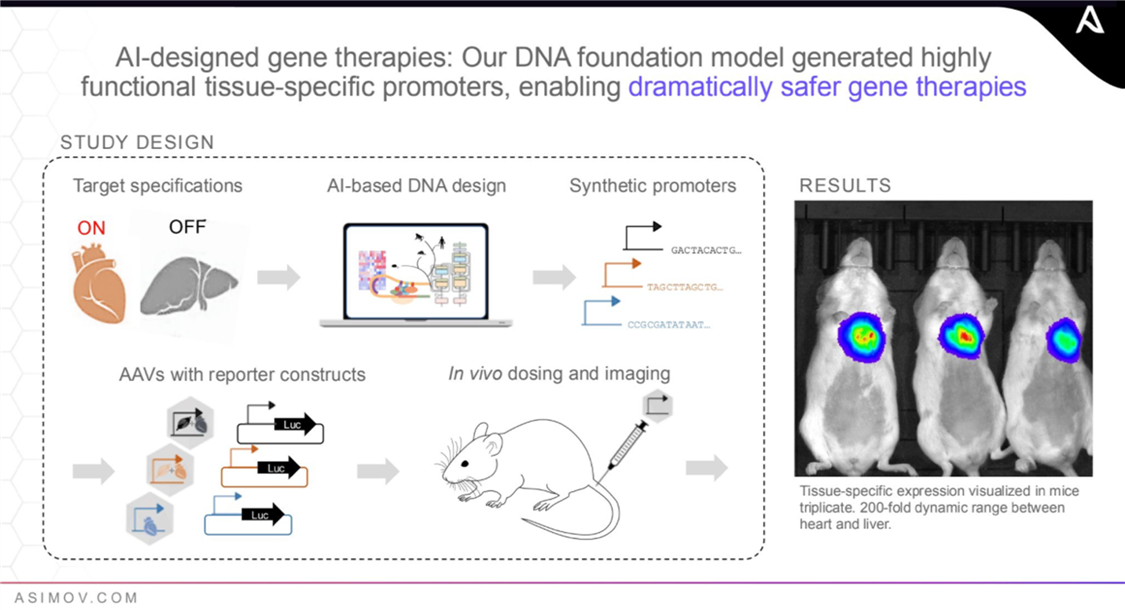

Asimov, a Boston-based pioneering bioengineering company, uses AI and synthetic biology to transform the design and production of therapies. Genetic engineering, the process of modifying DNA, holds immense potential for drug development by targeting diseases at their genetic roots. The process is vital for developing biologics, cell and gene therapies such as CAR-T, and precision medicine to tackle complex conditions such as cancer and inherited genetic disorders. Asimov’s AI-driven molecular simulations and computational biology accelerate the traditionally slow, labor-intensive, and trial-and-error genetic engineering process, making drug development faster, cheaper, and more reliable.

Asimov’s AI-driven bioengineering tools apply to both drug design and manufacturing. In drug design, AI generates novel DNA sequences and simulates complex biological processes, expediting the identification of promising drug candidates. In manufacturing, these tools can increase the precision and efficiency of therapeutics production, supporting the creation of complex biologics such as antibodies and viral vectors. This is critical for improving the scalability, reliability, and cost effectiveness of drug manufacturing, especially for targeted cell and gene therapies that require customized production methods.

Asimov’s AAV Edge platform is an example of the company’s innovation in gene therapy manufacturing. The platform optimizes the production of adeno-associated viruses (AAV), a type of virus used in gene therapies. Its AI-designed tissue-specific promoters restrict gene expression to intended tissues, ensuring, for example, that a therapy targeting heart disease is “on” in heart tissue but “off” in liver tissue to reduce toxicity. This is critical because while gene therapies hold significant potential, a key challenge is that they can cause liver toxicity. AAV Edge helps increase the precision, effectiveness, and safety of gene therapies while reducing side effects.[87]

Currently, Asimov focuses on drug manufacturing, particularly drugs enabled by genetic engineering, including antibodies, protein biologics, cell and gene therapies, and RNA vaccines. By employing AI tools, Asimov’s suite of services seeks to improve drug efficiency and accelerate manufacturing timelines, yielding higher, faster, cheaper, and better therapeutic production. Asimov’s future vision is to extend its AI-driven bioengineering solutions to the entire biotech space, including agriculture and food, industrial biotechnology, and environmental biotechnology, to accelerate innovation in these areas by automating traditionally slow, manual processes, increasing precision, and enabling customized solutions.

AI in Supply Chains

Drug shortages are a growing public health concern, with significant financial impacts on health-care systems. In the United States alone, these shortages cost an estimated $230 million a year due to health-care expenses, productivity losses, and adverse patient outcomes. The root causes of such shortages include supply chain management challenges, inadequate business continuity planning, and market dynamics such as fluctuating demand.[88]

The global pharmaceutical supply chain constitutes an elaborate network of manufacturers, suppliers, and distributors spanning multiple countries, rendering it vulnerable to disruptions from natural disasters, transportation delays, and regulatory hurdles. AI can help mitigate these risks by optimizing supply chain processes.[89] It can analyze transportation costs, lead times, and supplier performance, enabling better route planning, cost saving, and improved delivery times. For example, the United States Pharmacopeia’s (USP’s) Medicine Supply Map employs AI to analyze millions of data points worldwide to predict disruptions, allowing timely interventions to prevent shortages.[90]

Overall, AI can drive improvements in manufacturing efficiency, supply chain management, and the scalability of advanced therapies, contributing to faster, more cost-effective drug production and supply chain resilience.

Merck employs an AI system developed by Aera to optimize its supplier network through data analysis and proactive recommendations. This system enables Merck to anticipate supply chain fluctuations, adjust production schedules, and reroute shipments, thereby enhancing efficiency and improving supply chain resilience.[91]

Overall, AI can drive improvements in manufacturing efficiency, supply chain management, and the scalability of advanced therapies, contributing to faster, more cost-effective drug production and supply chain resilience.

Challenges for AI Adoption

AI adoption in drug development faces several significant challenges. Data access and privacy issues present substantial hurdles, as stakeholders—including health-care providers, pharmaceutical companies, researchers, and federal agencies—must collect and share vast amounts of data to develop effective AI models. Improved coordination, both within and across organizations, is crucial to achieve this goal. Additionally, validating AI algorithms is essential to address concerns about the “black box” nature of how AI systems arrive at decisions and to mitigate possible biases, thereby building trust in these technologies. Integrating AI with existing workflows can also be challenging, especially in the face of unclear regulatory pathways. Misaligned incentives, including fears of job displacement, may further slow adoption.[92] However, with proactive strategies and enhanced collaboration between public and private sectors, these challenges can be effectively addressed, paving the way for the successful integration of AI in drug development.

Data Access, Quality, and Privacy

AI can play a crucial role in supporting drug development, but its effectiveness hinges on the availability and quality of training data. Several types of data are essential for AI applications in this area. First, clinical data, including EHRs, provides information on patient medical histories, diagnoses, lab results, imaging data, and treatment plans. Second, genomic data, obtained through sequencing techniques, helps identify genetic variants and provides insights into gene function and regulation, which are vital for understanding disease mechanisms. Third, pharmaceutical data includes information on drug efficacy, adverse reactions, pharmacokinetics, and pharmacodynamics. Fourth, chemical and structural data is important for identifying and optimizing drug candidates from extensive compound libraries. Finally, data from scientific literature—such as research papers, clinical guidelines, clinical trial outcomes, and patents—helps synthesize existing knowledge and identify gaps for new drug development.[93] Together, these different types of data create a comprehensive picture that enhances the ability to identify promising therapeutic targets and drug candidates, target treatments to individual patients, and streamline the drug development process. Importantly, linking genotypes (genetic information found in genomic records) to phenotypes (observable traits found in clinical records) enables researchers to better understand how genetic variations influence disease risk and treatment responses and disease processes. This connection is particularly important for developing targeted therapies and advancing precision medicine. Yet, challenges remain in collecting, sharing, and linking this data to support AI applications.

Two decades ago, the Human Genome Project (HGP) successfully decoded the human genetic code. A core principle that contributed to the success of this international scientific effort was data sharing.[94] HGP leadership established the Bermuda Principles, which committed all project participants to electronically share data and make human genome sequences publicly available in order to advance scientific progress.[95] Since then, advances in sequencing techniques have generated vast amounts of genomic data from millions of individuals, now stored in repositories around the world. The Bermuda Principles, adopted by journals and funding agencies, aim to ensure that published genome study data remains accessible to all, fostering further scientific discoveries.[96]

However, the vast influx of diverse and sensitive data has prompted governments, funding agencies, and research consortia working with them to develop custom databases for managing this information. The existence of incompatible and non-shareable datasets further complicates efforts to link genomic and phenotypic data, which is key for breakthroughs.[97] Uncovering the genetic causes of complex diseases such as cancer and cardiovascular disorders requires pinpointing multiple genetic risk factors across the genome. This is achieved through genome-wide association studies (GWAS), which analyze the genotypes of hundreds of thousands of individuals, both with and without the disease, to identify relevant genetic variations.[98] These studies integrate both genomic and phenotypic data, which often come from EHRs and medical cohort studies—such as the Framingham Heart Study, which tracked multiple generations to investigate cardiovascular risk factors—providing insights into how genetic variations contribute to disease. Yet, data integration problems present challenges for GWAS, as the success of these studies relies on the integration of large-scale genomic and phenotypic datasets.

Since 2005, over 10,700 GWAS have been conducted, generating vast datasets largely stored in controlled-access databases to protect personal information for legal and ethical reasons. Researchers must navigate strict vetting processes to access such data. For example, National Institutes of Health (NIH) grant recipients are required to deposit their GWAS data into an official repository, the Database for Genotypes and Phenotypes (dbGaP), while European researchers are encouraged to use the European Genome-Phenome Archive (EGA) housed at EMBL-EBI. Yet, despite such efforts, the process of accessing such publicly available data remains cumbersome.[99] Moreover, a growing number of countries around the world have initiated large-scale genomic sequencing efforts on their own populations, including America’s All of Us Research Program, Genomas Brasil, the Qatar Genome Program, the Turkish Genome Project, and the Korean Genome Project, among others. Such global genomic sequencing initiatives are crucial for capturing genetic diversity across populations, which can lead to more inclusive and effective biopharmaceutical research. For now, though, how the data from these initiatives will be shared and integrated into existing workstreams and consortia remains unclear, diluting the potential of such large-scale datasets.

Initiatives such as the Global Alliance for Genomics and Health (GA4GH) work to create technical standards to link disparate genomic databases globally.[100] Additionally, the GWAS Catalog, an open-access collaboration between EMBL-EBI and the National Human Genome Research Institute (NHGRI), is working to standardize and centralize GWAS data to support both a deeper understanding of disease mechanisms and the discovery of new therapeutic targets and causal variants.[101] Other efforts, such as the Human Cell Atlas (HCA), a global consortium creating publicly accessible, detailed reference maps of human cells, further seek to support AI-enabled drug development and advance our understanding of health and disease.[102]

Electronic Health Records

In addition to genomic data, clinical data—often stored in EHRs—provides crucial details about patients’ medical histories and plays a vital role in deepening our understanding of disease mechanisms. Together, these two types of data are essential for uncovering how genetic variations (genotypes) influence observable traits (phenotypes) and disease outcomes, forming the foundation for building effective AI models for drug development.

The push for electronic health information exchange began in 2009 with the signing of the American Recovery and Reinvestment Act (ARRA), which established the Health Information Technology for Economic and Clinical Health (HITECH) Act. This provision promoted the digitization of patient records to improve health-care delivery, allocating over $35 billion to support the adoption of EHRs by hospitals and clinics.[103] Today, EHR adoption is widespread, with 96 percent of U.S. hospitals and almost 80 percent of office-based physicians using them.[104] Some of the largest EHR systems include Epic Systems, Oracle Cerner, and MEDITECH. Epic holds the largest market share at 37.7 percent, followed by Oracle Cerner at 21.7 percent, and MEDITECH at 13.2 percent.[105] Epic, the leading provider in the United States, is used by many prominent hospital systems and academic medical centers, including the Cleveland Clinic, the Mayo Clinic, and Johns Hopkins Medicine.

Beyond the issues posed by privacy concerns, the presence of hundreds of EHR systems across the United States, each with distinct clinical terminologies and technical standards, complicates interoperability. True interoperability requires not just the exchange but also the use of standardized data, a long-standing issue in the U.S. health-care system that continues to limit electronic data sharing. Moreover, achieving interoperability depends on collaboration between many stakeholders, including patients, providers, software vendors, legislators, and health information technology (IT) professionals. Yet, the health-care system remains fragmented, with data often treated as a commodity for competitive advantage rather than a shared resource for improving patient care.[106]

Recently, the FDA pushed for an increased use of RWD—including EHRs, billing data, and administrative claims—in drug development. This data, rich in patient details including disease status, treatments, procedures, and outcomes, could enhance drug development. With the growing EHR adoption across the United States, the FDA has issued guidance on using EHR data in clinical research and regulatory submissions.[107] However, integrating RWD with AI presents several challenges. EHRs are often inconsistently documented by clinicians, making it difficult to extract data, such as treatment outcomes, uniformly. Issues including missing data and selection bias further complicate the analysis. Additionally, the lack of standardization and harmonization across different data sources hinders both replication and reproducibility.[108]

Despite these challenges, the creation of large research networks such as the national Patient-Centered Clinical Research Network (PCORnet), the Observational Health Data Sciences and Information (OHDSI) consortium, and the Clinical and Translational Science Award Accrual to Clinical Trials (CTSA ACT) network have improved data sharing. These networks span multiple sites around the world, employing standardized data infrastructure, and provide access to a diverse patient population, enabling large-scale studies to explore factors influencing health and disease.[109]

EHR-driven genomic research is valuable, as an integrated approach can augment GWAS by replicating the studies and extending conventional GWAS findings to underrepresented populations.

In 2013, the National Science Foundation (NSF) convened a workshop to identify challenges and set a research agenda to achieve a national-scale Learning Health System (LHS). An LHS is an infrastructure that enables rapid data sharing and knowledge generation to inform health-care decisions, ultimately improving health outcomes. The agenda emphasized the need for systematic integration of data, evidence, and practice, highlighting the importance of standardizing data stored in EHRs. By facilitating access to comprehensive patient data, EHRs enable health-care providers to engage in continuous learning. The agenda noted that, to maximize the potential of EHRs in fostering a collaborative, well-functioning LHS, establishing standardized data-sharing protocols and ensuring interoperability are essential for adaptation and innovation in response to emerging health-care challenges.[110]

Linking EHRs with other data sources, including genomic data, can further enable the study of drug-phenotype and drug-gene interactions. Researchers from the Vanderbilt Electronic Systems for Pharmacogenomic Assessment (VESPA) project have shown that EHR-based biobanks—repositories of human biological materials—provide cost-effective tools for biomedical discoveries, as they allow for the reuse of biological samples across multiple studies and enhance research efficiency.[111] Such EHR-driven genomic research (EDGR) is valuable, as an integrated approach can augment GWAS by replicating the studies and extending conventional GWAS findings to underrepresented populations. This is because EDGR cohorts reflect the populations cared for in clinical settings, which are much more diverse than those typically represented in genomic research.[112] EHR-linked genomic studies can also enable phenome-wide association studies (PheWAS), which analyze the effect of genetic variants across multiple diseases and traits captured in EHRs. A major obstacle, however, remains the need for the development and adoption of international standardized consent models from patients for the use of their clinical data in biomedical research, as consent regimens vary significantly across regions.[113]

Lucia Savage, chief privacy and regulatory officer at Omada Health and former chief privacy officer at the U.S. Department of Health and Human Services (HHS) Office of the National Coordinator for Health IT, has highlighted that regulations governing the use of data collected through traditional health-care processes vary widely across countries. In the United States, there is more leeway for using patient data in tertiary research applications, such as developing AI models for drug development, as the Health Insurance Portability and Accountability Act (HIPAA) allows the use of patients’ health data for research without explicit consent when that data is de-identified. In contrast, many other countries, including those governed by the EU’s General Data Protection Regulation (GDPR), impose stricter restrictions on such uses and the international transfers of personal data. These differences in legal frameworks make cross-border data sharing and access more challenging. Therefore, it is crucial to consider the regulatory environment in which the data was collected and explore possibilities for interoperability to navigate such challenges.[114]

Gilead Using AI to Identify Underdiagnosed Hepatitis C Individuals[115]

Gilead is leveraging AI to identify underdiagnosed individuals, particularly for diseases such as HIV and hepatitis C virus (HCV), where traditional screening methods are expensive and burdensome for patients. In diagnosis, there is a trade-off between privacy and data usability, as often, the higher the privacy standard, the less usable the data. Hence, striking a balance between privacy and usability is key. Too much privacy can make it difficult to identify patients, particularly from underserved communities. Greater data access, sharing, and interoperability, supported by privacy-enhancing techniques, could help with more accurate diagnoses.

Gilead’s machine learning algorithm, trained on ambulatory electronic medical records (EMRs), aims to predict initial HCV diagnoses and identify undiagnosed HCV patients, prioritizing them for screening. The EMRs used to train the algorithm include data on age, gender, HCV-related predictors such as birth cohort, opioid usage, laboratory test results, diagnosis codes, treatments, data on social determinants of health, chronic health conditions, and other variables.

HCV, one of the most common blood-borne viruses and a leading cause of liver-related illness in the United States, is the target of a World Health Organization (WHO) initiative to eradicate it as a public health threat by 2030. The National Academies of Science, Engineering, and Medicine (NASEM) have highlighted improved detection of undiagnosed HCV cases as central to eliminating the virus. Universal one-time screening is recommended in the United States, but it is difficult to implement in practice, and screening rates remain low. Gilead’s AI approach, trained on a large EMR dataset, requires fewer patients to be screened compared with traditional methods while improving precision. The AI model can prioritize patients for HCV screening, and has the potential to make resource allocation more efficient, reduce clinician workload, prevent disease progression, and lower health-care costs.

Integrating AI into EMR systems and clinical workflows shows significant potential for accelerating HCV elimination efforts. Effective targeting could improve diagnosis rates, reduce morbidity and mortality through earlier detection, and identify patients often overlooked by risk-based screening. The AI algorithm generates continuous risk scores, allowing for a more nuanced triage process and targeted screening—patients with higher scores calculated from their EMR data can be prioritized for screening, diagnosis, and linkage to care. This data-based approach can help identify harder-to-find patients in a way that does not stigmatize individuals. It could also improve the allocation of finite health-care resources and the return on investment of screening programs, as well as the rates of HCV diagnoses, treatment, and transmission.

Gilead’s AI use case demonstrates how machine learning and EMRs, when combined, present new opportunities to improve population health management and achieve better clinical outcomes. Moreover, it supports public policies that promote EMR adoption and interoperability, which could play a critical role in identifying underdiagnosed individuals, particularly within underserved communities. By enhancing data sharing and integration, these policies could not only streamline clinical workflows, but also help reduce health disparities, supporting efforts to improve health equity and ensure timely, effective care for all patients.

Beyond RWD, there is growing interest in synthetic data—artificially generated by computer algorithms to simulate the statistical properties of RWD—as a scalable, cost-effective, and privacy-preserving alternative for training AI models. However, challenges remain: Synthetic data may not fully capture the complexity and variability of real clinical populations, such as diverse demographics and the intricate biological and clinical interactions that influence treatment responses and side effects. There are also questions about how well synthetic data reflects the evolving nature of diseases, treatment protocols, and patient populations. The use of synthetic data in drug development remains an emerging area of research.[116]

Pharmaceutical Data

Pharmaceutical data, alongside genomic and clinical data, plays a key role in biopharmaceutical innovation. While biopharmaceutical companies often prefer to keep their data private for competitive reasons, collaboration between companies and research institutions could significantly speed up drug development. Public-private partnerships (PPPs) that bring together academia, industry, and government offer a path forward, particularly in precompetitive research, where the risks are lower. One notable example is the Innovative Health Initiative (IHI), the largest biomedical PPP in the world, launched in 2008 by the European Union and the European Federation of Pharmaceutical Industries and Associations (EFPIA) to accelerate the development of next-generation therapies.

By encouraging the sharing of data among academia, industry, and government, particularly in precompetitive research, PPPs are producing valuable training data for AI-enabled drug development.

Other examples include the Alzheimer’s Disease Neuroimaging Initiative (ADNI), launched by the NIH’s National Institute on Aging (NIA) to advance Alzheimer’s research; Project Data Sphere, launched by the CEO Roundtable on Cancer to accelerate drug development by facilitating access to de-identified oncology clinical trial data; Open Targets, which uses biological data to identify and validate therapeutic targets; and the Structural Genomics Consortium, focused on advancing knowledge of human protein structures.[117] By encouraging the sharing of data among academia, industry, and government, particularly in precompetitive research, PPPs are producing valuable training data for AI-enabled drug development.

Beyond PPPs, alternative payment models can provide incentives for pharmaceutical companies to share data. Data monetization allows companies to profit from their data by providing access to anonymized datasets on a subscription basis. For example, Flatiron Health, acquired by Roche, operates under this model by aggregating and analyzing de-identified oncology RWD to offer insights that enhance cancer care and accelerate drug development. This model treats data as a product, creating a continuous revenue stream for companies.[118]

Role of Privacy-Enhancing Technologies

When sharing data, a key consideration is ensuring that it can be done in a secure, privacy-enhancing manner, given the confidential and sensitive nature of the data involved in drug development. Traditional methods of sharing data between different entities involve sending data to third parties, such as by creating copies of data for each entity or aggregating data in a single repository that all entities can access. Entities can maintain privacy of shared data through access controls, oversight, and legal mechanisms. However, newer decentralized methods for data sharing allow multiple entities to collaborate on AI model training without sharing raw internal data. Decentralized data sharing keeps data distributed across multiple locations, eliminating the need to transfer or aggregate it in a central repository. This method is particularly valuable in privacy-sensitive settings, as it enables collaboration while safeguarding sensitive information. In drug development, decentralized approaches enable different research institutions and companies to share data, supporting the development of safer, more effective therapies.[119]

PETs can support the training of AI algorithms on vast biological, chemical, and clinical datasets, accelerating AI-enabled drug development.

Key aspects of decentralized data sharing include a strong emphasis on data privacy, collaboration, and security. In this approach, each party retains control over its data, lowering the risk of leaks from third parties and minimizing the threat of a single point of failure that could compromise the entire dataset. Decentralized approaches are often supported by privacy-enhancing technologies (PETs), such as secure multiparty computation (SPMC), federated learning (FL), fully homomorphic encryption (FHE), and differential privacy (DP), which enable privacy-enhancing access to and analysis of diverse data sources.[120] PETs can support the training of AI algorithms on vast biological, chemical, and clinical datasets, accelerating AI-enabled drug development.[121]

Secure Multiparty Computation

SPMC is a cryptographic method that enables multiple participants to collaborate on computations using private data without revealing it to each other, allowing teams to work together with internal data while maintaining its confidentiality. SPMC ensures that only the final results of a computation are revealed to participants without disclosing any intermediate information from a joint analysis, thereby providing a higher level of security.[122]

In drug discovery, SPMC has several important applications. For instance, it can be used to predict interactions between therapeutic targets and drugs based on genomic and chemical data. This is a critical step in developing promising drugs, and large datasets are required to train AI models to generate accurate predictions. Public datasets, including ChEMBL—a database of bioactivity data for drug-like compounds—often rely on contributions from academic institutions, pharmaceutical companies, and collaborative projects and help train such models on vast data. But they are only one piece of the puzzle. The integration of other sensitive data, including EHRs, could further enhance algorithms. Methods such as SMPC can help, and feasible SMPC protocols have been deployed for GWAS and drug-target interaction prediction problems. However, efforts to develop comprehensive SMPC protocols for AI-enabled drug discovery are still a work in progress.[123]

Consider how SMPC can be useful in drug discovery. Pharmaceutical companies, even competitors, benefit from collaboration but must protect their proprietary data. For example, Company A, which specializes in high-throughput screening of small molecules, and Company B, which has comprehensive datasets of biological targets, can use SMPC to securely combine their datasets to discover compounds that interact with certain biological targets. In this manner, they can jointly identify potential drug candidates for further exploration, all while safeguarding each company’s proprietary data and enhancing their research.[124]

Federated Learning

FL emphasizes collaborative training of AI models while keeping the training data decentralized and local to each participant. In this approach, each participant trains the AI model on their own data and shares only the model parameters—rather than raw data—with a central server, which aggregates these parameters to enhance the global AI model. The refined model is then returned to the participants for further local training, enabling collaborative model development without exchanging sensitive data. Unlike SMPC, which allows multiple parties to jointly perform computations on private data, FL centers on the collaborative building of models. For instance, hospitals could use FL to collaboratively train a model that predicts patient outcomes based on their EHRs without exchanging any actual patient data.[125]

In drug discovery, FL is particularly dynamic and offers numerous applications. A notable example is IHI’s MELLODDY (MachinE Learning Ledger Orchestration for Drug DiscoverY) project, which embodies a blend of cooperation and competition. This initiative emerged from a joint call by 10 of the world’s largest pharmaceutical companies aimed at developing predictive AI models for drug discovery while safeguarding data privacy. The collective dataset for this project will encompass over 10 million small molecules and more than 1 billion activity labels measured in biological assays, making it one of the largest FL-based efforts in the field.[126]

Fully Homomorphic Encryption

FHE is another powerful technique that enables computations on encrypted data without requiring decryption, ensuring that data remains secure.[127] In 2017, experts from industry, government, and academia established the Homomorphic Encryption Standardization Consortium, which developed a standard in 2018 outlining security requirements for FHE applications.[128]

In drug discovery, FHE presents a promising solution to privacy concerns by allowing scientists to perform computations on encrypted data, thus protecting sensitive information such as the structures of new drug compounds and genomic data.[129] While algorithmic improvements have already enhanced the efficiency of FHE, widespread implementation still faces challenges, including high computational demands and integration with existing data workflows. Advances in hardware acceleration and optimized algorithms could, however, enhance its use in privacy-enhancing decentralized AI model building.[130]

Differential Privacy

DP, introduced by Cynthia Dwork in 2006, is a mathematical concept that enables data sharing while preserving individual privacy. The core principle of DP is that the outcome of a computation should remain nearly the same whether a single data point is included or excluded. This is achieved by adding calibrated noise to the results, effectively hiding individual contributions while maintaining the overall accuracy of the analysis. Although DP results are approximate and may vary with repeated analyses, sensibly calibrated noise enables AI models to balance privacy and utility. Still, DP requires careful tuning of noise levels to ensure that data utility is not compromised.[131]

DP has proven particularly useful in applications such as drug sensitivity prediction. For example, a 2022 study shows that combining DP with deep learning, a technique known as differentially private deep learning, can effectively predict breast cancer status, cancer type, and drug sensitivity using genomic data, all while preserving individual privacy.[132]

Beyond the importance of advancing the development of PETs—including SPMC, FL, FHE, and DP—to enable secure data sharing in AI-enabled drug development, it is crucial to make these tools accessible and user friendly for a wide range of scientists working in biopharmaceutical innovation. Organizations at the forefront of PET development include U.S.-based OpenDP, Duality Technologies, and Actuate, as well as the United Kingdom’s OpenMined.[133] For example, Duality Technologies has collaborated with leading research institutions such as the Dana-Farber Cancer Institute to enhance oncology outcomes.[134]

Algorithm Validation and Bias Mitigation