Picking the Right Policy Solutions for AI Concerns

Some concerns are legitimate, but others are not. Some require immediate regulatory responses, but many do not. And a few require regulations addressing AI specifically, but most do not.

Contents

Pursue Regulation That Is….. 3

Pursue Nonregulatory Policies That Are….. 4

Overview of Policy Needs for AI Concerns 4

Issue 1.1: AI May Expose Personal Information in a Data Breach. 6

Issue 1.2: AI May Reveal Personal Information Included in Training Data. 8

Issue 1.3: AI May Enable Government Surveillance 9

Issue 1.4: AI May Enable Workplace Surveillance 9

Issue 1.5: AI May Infer Sensitive Information. 11

Issue 1.6: AI May Help Bad Actors Harass and Publicly Shame Individuals 12

Issue 2.1: AI May Cause Mass Unemployment 14

Issue 2.2: AI May Displace Blue Collar Workers 15

Issue 2.3: AI May Displace White-Collar Workers 15

Issue 3.1: AI May Have Political Biases 18

Issue 3.2: AI May Fuel Deepfakes in Elections 18

Issue 3.3: AI May Manipulate Voters 19

Issue 3.4: AI May Fuel Unhealthy Personal Attachments 21

Issue 3.5: AI May Perpetuate Discrimination. 22

Issue 3.6: AI May Make Harmful Decisions 23

Issue 4.1: AI May Exacerbate Surveillance Capitalism. 25

Issue 5.1: AI May Enable Firms With Key Inputs to Control The Market 27

Issue 5.2: AI May Reinforce Tech Monopolies 28

Issue 6.1: AI May Make It Easier To Build Bioweapons 30

Issue 6.2: AI May Create Novel Biothreats 30

Issue 6.3: AI May Become God-Like and “Superintelligent” 31

Issue 6.4: AI May Cause Energy Use to Spiral Out of Control 32

7. Intellectual Property Concerns 34

Issue 7.1: AI May Unlawfully Train on Copyrighted Content 35

Issue 7.2: AI May Create Infringing Content 36

Issue 7.3: AI May Infringe on Publicity Rights 37

Issue 8.1: AI May Enable Fraud and Identity Theft 39

Issue 8.2: AI May Enable Cyberattacks 40

Issue 8.3: AI May Create Safety Risks 41

Appendix: The Right Policy Solutions for AI Concerns 43

Introduction

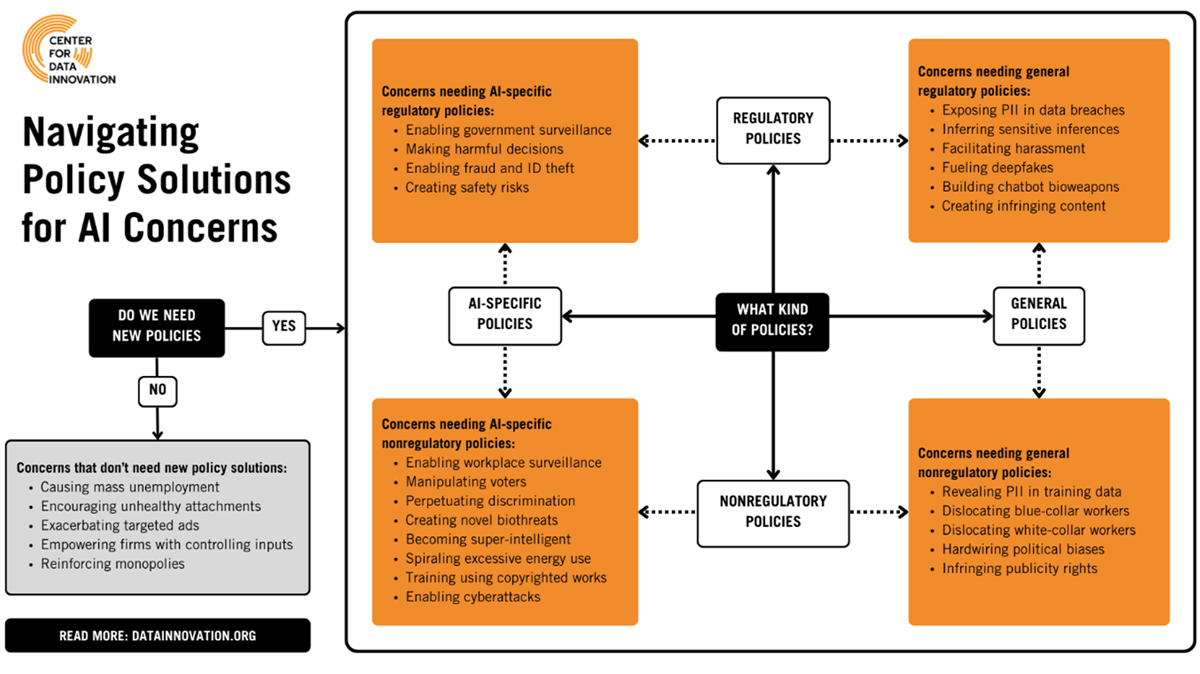

Policymakers find themselves amid a chorus of calls demanding that they act swiftly to address risks from artificial intelligence (AI). Concerns span a spectrum of social and economic issues, from AI displacing workers and fueling misinformation to threatening privacy, fundamental rights, and even human civilization. Some concerns are legitimate, but others are not. Some require immediate regulatory responses, but many do not. And a few require regulations addressing AI specifically, but most do not. Discerning which concerns merit responses and what types of policy action they warrant is necessary to craft targeted, impactful, and effective policies to address the real challenges AI poses while avoiding unnecessary regulatory burdens that will stifle innovation.

This report covers 28 of the prevailing concerns about AI, and for each one, describes the nature of the concern, if and how the concern is unique to AI, and what kind of policy response, if any, is appropriate. To be sure, there are additional concerns that could have been included and others that will be raised in the future, but from a review of the literature on AI and the growing corpus of AI regulatory actions, these are the major concerns that policymakers have to contend with. This report takes 28 of the concerns du jour and groups them into 8 sections: privacy, workforce, society, consumers, markets, catastrophic scenarios, intellectual property, and safety and security. Each concern could warrant a report of its own, but the goal here is to distill the essence of each concern and offer a pragmatic, clear-eyed response.

For each issue, we categorize the appropriate policy response as follows:

Pursue Regulation That Is…

AI-specific: Some concerns about AI are best addressed by enacting or updating regulation that specifically targets AI systems. These regulations may prohibit certain types of AI systems, create or expand regulatory oversight of AI systems, or impose obligations on the developers and operators of AI systems, such as requiring audits, information disclosures, or impact assessments.

General: Some concerns about AI are best addressed by enacting or updating regulation that does not specifically target AI but instead creates broad legal frameworks that apply across various industries and sectors. Examples of these regulations include data privacy laws, political advertising laws, and revenge porn laws.

Pursue Nonregulatory Policies That Are…

AI-specific: Some concerns about AI are best addressed by implementing nonregulatory policies that target AI. Examples of these policies include funding AI research and development or supporting the development and use of AI-specific industry standards.

General: Some concerns about AI are best addressed by implementing nonregulatory policies that do not target AI but instead focus on the broader technological and societal context in which AI systems operate. Examples of these policies include job dislocation policies to mitigate the risks of a more turbulent labor market or policies to improve federal data quality.

No Policy Needed

Some concerns are best addressed by existing policies or by allowing society and markets to adapt over time. Policymakers do not need to implement new regulatory or nonregulatory policies at this time.

Overview of Policy Needs for AI Concerns

Concerns that warrant AI-specific regulations:

▪ 1.3. AI may enable government surveillance.

▪ 3.6. AI may make harmful decisions.

▪ 8.1. AI may enable fraud and identity theft.

▪ 8.3. AI may create safety risks.

Concerns that warrant general regulations:

▪ 1.1. AI may expose PII in a data breach.

▪ 1.5. AI may infer sensitive information.

▪ 1.6. AI may help bad actors harass and publicly shame individuals.

▪ 3.2. AI may fuel deepfakes in elections.

▪ 6.1. AI may make it easier to build bioweapons.

▪ 7.3. AI may infringe on publicity rights.

Concerns that warrant AI-specific nonregulatory policies:

▪ 1.4. AI may enable workplace surveillance.

▪ 3.3. AI may manipulate voters.

▪ 3.5. AI may perpetuate discrimination.

▪ 6.2. AI may create novel biothreats.

▪ 6.3. AI may become God-like and “superintelligent.”

▪ 6.4. AI may cause energy use to spiral out of control.

▪ 7.1. AI may unlawfully train on copyrighted content.

▪ 8.2. AI may enable cyberattacks.

Concerns that warrant general nonregulatory policies:

▪ 1.2. AI may reveal PII included in training data.

▪ 2.2. AI may dislocate blue collar workers.

▪ 2.3. AI may dislocate white collar workers.

▪ 3.1. AI may have political biases.

▪ 7.2. AI may create infringing content.

Concerns that do not warrant new policies:

▪ 2.1. AI may cause mass unemployment.

▪ 3.4. AI may fuel unhealthy personal attachments.

▪ 4.1. AI may exacerbate surveillance capitalism.

▪ 5.1. AI may enable firms with key inputs to control the market.

▪ 5.2. AI may reinforce tech monopolies.

Figure 1: Summary of analysis

1. Privacy

|

# |

Risk |

Policy needs |

Policy solution |

|

1.1 |

AI may expose personally identifiably information in a data breach. |

General regulations |

Policymakers should require companies to publish security policies to promote transparency with consumers. Congress should pass federal data breach notification legislation. |

|

1.2 |

AI may reveal sensitive information included in training data. |

General nonregulatory policies |

Policymakers should fund research for privacy- and security-enhancing technologies and there should be support for industry-led standards for responsible web-scraping. |

|

1.3 |

AI may enable government surveillance. |

AI-specific regulations |

Congress should direct the Department of Justice (DOJ) to establish guidelines for use by state and local law enforcement in investigations that outline specific use cases and capabilities, including when a warrant is necessary for use, as well as transparency guidelines for when to notify the public of law enforcement using AI. |

|

1.4 |

AI may enable workplace surveillance. |

AI-specific nonregulatory policy |

Policymakers should help set the quality and performance standards of AI technologies used in the workplace |

|

1.5 |

AI may infer sensitive information. |

General regulations |

Policymakers should craft and enact comprehensive national privacy legislation that addresses the risks of data-driven inference in a tech-neutral way. |

|

1.6 |

AI may help bad actors harass and publicly shame individuals. |

General regulations |

Congress should outlaw the nonconsensual distribution of all sexually explicit images, including deepfakes that duplicate individuals’ likenesses in sexually explicit images, and create a federal statute that prohibits revenge porn, including those with computer-generated images. |

Issue 1.1: AI May Expose Personal Information in a Data Breach

The issue: Data breaches occur when someone gains unauthorized access to data. For instance, an attacker might circumvent security measures to obtain sensitive data, or an insider might inappropriately access confidential information. Users may share personally identifiable information (PII) with AI systems, such as chatbots, offering legal, financial, or health services. In the event of a data breach, the transcripts of these conversations could be exposed and accessed improperly, revealing sensitive information. An example of a data breach is a much-reported incident that occurred with OpenAI’s ChatGPT chatbot in March 2023. Due to a bug in an open-source library the system uses, some users were able to see titles from other users’ chat histories.[1]

The issue: Data breaches occur when someone gains unauthorized access to data. For instance, an attacker might circumvent security measures to obtain sensitive data, or an insider might inappropriately access confidential information. Users may share personally identifiable information (PII) with AI systems, such as chatbots, offering legal, financial, or health services. In the event of a data breach, the transcripts of these conversations could be exposed and accessed improperly, revealing sensitive information. An example of a data breach is a much-reported incident that occurred with OpenAI’s ChatGPT chatbot in March 2023. Due to a bug in an open-source library the system uses, some users were able to see titles from other users’ chat histories.[1]

While it is true that AI systems could be subject to data breaches, just like any IT system, they have not created or exacerbated the underlying privacy and security risks. Data breaches have been an unfortunate, yet regular, occurrence for the past two decades. In 2022, there were nearly 1,800 data breaches in the United States impacting hundreds of millions of Americans.[2]

The solution: Policymakers should address the larger problem of data breaches rather than focus exclusively on data breaches involving AI systems. One thing Congress can do is require companies to publish security policies to promote transparency with consumers. Most companies publish privacy policies, which create a transparent and accountable mechanism for regulators to ensure companies are adhering to their stated policies. But no such practice exists for information security practices, which has resulted in vague standards, regulation by buzzword, and information asymmetry in markets. By publishing security policies, companies would be motivated to describe the types of security measures they have in place rather than just make claims of taking “reasonable security measures.” This is a concrete step that policymakers can take to improve security practices in the private sector.[3]

Moreover, Congress should pass data breach notification legislation that preempts conflicting state laws.[4] All 50 states, as well as the District of Columbia, Guam, Puerto Rico, and the Virgin Islands, have data breach laws; however, each jurisdiction has its own set of rules on how quickly to report a data breach or to whom a security incident should be reported. This patchwork quilt of differing requirements provides decidedly uneven protection for consumers and creates an unnecessarily complex situation for companies, which must spend more time navigating this murky legal terrain than actually protecting consumer data.[5]

Issue 1.2: AI May Reveal Personal Information Included in Training Data

The issue: Data leaks occur when AI systems reveal private information included in training data. For example, an AI model trained on confidential user data, such as private contracts or medical records, may unintentionally reveal this private information to users. A case in point was the incident wherein popular chatbot ChatGPT appeared to reveal some of the bits of data it had been trained on when researchers prompted it to repeat random words forever.[6] Similarly, AI systems may disclose private information when it is inadvertently included in training data, such as personal information scraped from public websites.[7]

The issue: Data leaks occur when AI systems reveal private information included in training data. For example, an AI model trained on confidential user data, such as private contracts or medical records, may unintentionally reveal this private information to users. A case in point was the incident wherein popular chatbot ChatGPT appeared to reveal some of the bits of data it had been trained on when researchers prompted it to repeat random words forever.[6] Similarly, AI systems may disclose private information when it is inadvertently included in training data, such as personal information scraped from public websites.[7]

While data leaks are a legitimate privacy concern, they are not unique to AI. Data leaks were an early concern about search engines too, as attackers could use search engines to discover a trove of sensitive data, such as credit card information, Social Security numbers, and passwords, that was scattered across the Internet, often without the affected individuals’ awareness.[8] Internet search engines also widely deploy web crawlers, which are automated programs that index the content of webpages, and to address risks in this area in the past, nongovernment solutions to the risks posed by the scraping of publicly available data have been successful.

The solution: Policymakers can help minimize or eliminate the need for AI-enabled services to process confidential data while still maintaining the benefits of those services by investing in research for privacy- and security-enhancing technologies. These are not specific to AI, but they will have important uses for AI. For instance, policymakers should support additional research on topics such as secure multiparty computation, homomorphic encryption, differential privacy, federated learning, zero-trust architecture, and synthetic data.[9] They should also fund research exploring the use of “data privacy vaults” to isolate and protect sensitive data in AI systems.[10] In this scenario, any PII would be replaced with deidentified data so that large language models (LLMs) would not have access to any sensitive data, thereby preventing data leaks during training and inference and ensuring only authorized users could access the PII. Regarding AI systems that scrape publicly available data, policymakers should support the already burgeoning set of industry-led standards for web scraping.[11] The private sector is already taking steps to give website operators more control over whether AI web crawlers scrape their sites.[12] Indeed, many websites can use the existing Robots Exclusion Protocol to restrict web crawlers from popular AI companies.

There may be instances when PII ends up on public websites that AI systems scrape and consumers don’t want this information there. Federal data privacy legislation would create a baseline set of consumer rights for how organizations collect and use personal data. This legislation should preempt state laws, ensure reliable enforcement, streamline regulation, and minimize the impact on innovation.[13]

Issue 1.3: AI May Enable Government Surveillance

The issue: AI makes it easier to analyze large volumes of data, including about individuals, which may lead to increased government surveillance. For instance, governments can track individuals in public spaces, such as through facial recognition technology, or infer sensitive information about individuals based on less-sensitive data.

The issue: AI makes it easier to analyze large volumes of data, including about individuals, which may lead to increased government surveillance. For instance, governments can track individuals in public spaces, such as through facial recognition technology, or infer sensitive information about individuals based on less-sensitive data.

There can be legitimate reasons for this concern. Governments in certain countries have disturbing histories of intruding into the private lives of their citizens and many fear that they may revert to this type of activity in the future. And some countries, such as China, significantly limit the personal freedoms of their citizens and use surveillance to threaten human rights. Indeed, critics point out that China uses AI-enabled tracking and emotion-recognition technology as part of its domestic surveillance activities, most notably against its Uyghur population, and argue that democratic nations should not use the same technology.[14] They fear a slippery slope wherein Western governments might exploit AI for nefarious purposes that trample on citizens’ basic rights.

The solution: Law enforcement agencies should take preemptive steps to recognize the potential impacts of AI on perceptions of acceptable government use of technology for law enforcement activities. Congress should direct DOJ to establish guidelines for use by state and local law enforcement in investigations that outline specific use cases and capabilities, including when a warrant is necessary for use, as well as transparency guidelines for when and how to notify the public of AI use by law enforcement officials. The Facial Recognition Technology Warrant Act introduced in 2019, which requires federal law enforcement to obtain a court order before using facial recognition technology to conduct targeted ongoing public surveillance of an individual, could serve as a useful model to establish limitations on use, legal requirements for appropriate use, transparency, and approval processes for other AI-enabled law enforcement technologies.[15] In addition, as new AI products for law enforcement become available, they should undergo a predeployment review to ensure they meet First and Fourth Amendment protection standards, just as any new technology should. Such assessments should be conducted by federal officials familiar with existing legal requirements and potential applications. DOJ should also conduct independent testing of police tech, as the National Institute of Standards and Technology (NIST) has done for facial recognition algorithms during its Face Recognition Vendor Test, to ensure the technology is accurate and unbiased.[16] The General Services Administration (GSA) should establish guidelines to assist agencies in complying with existing government-wide privacy requirements when implementing AI solutions. These guidelines should address different government use cases, including for training, service provision, and research.

Issue 1.4: AI May Enable Workplace Surveillance

The issue: One concern about the use of AI in the workplace is that employee monitoring may become unduly invasive, stemming in part from the fact that workers may not know how or when their employers are using the technology. For instance, the Trades Union Congress (TUC), a national trade union center representing 48 unions across the United Kingdom, published a report in 2020 that finds that 50 percent of U.K. employees believe their companies may be using AI systems they are not aware of.[17] A more complex concern is that the data AI systems collect can reveal or enable employers to infer information with varying sensitivity levels, which, if misused, risks autonomy violations. Consider an AI system with eye-tracking technologies, which monitors the behavior of delivery drivers by tracking their gaze patterns. Many studies have found that people with autism react differently to stimuli when driving so an employer may infer from eye-tracking AI software which drivers have autism, even though employees may want to keep this information private.[18]

The issue: One concern about the use of AI in the workplace is that employee monitoring may become unduly invasive, stemming in part from the fact that workers may not know how or when their employers are using the technology. For instance, the Trades Union Congress (TUC), a national trade union center representing 48 unions across the United Kingdom, published a report in 2020 that finds that 50 percent of U.K. employees believe their companies may be using AI systems they are not aware of.[17] A more complex concern is that the data AI systems collect can reveal or enable employers to infer information with varying sensitivity levels, which, if misused, risks autonomy violations. Consider an AI system with eye-tracking technologies, which monitors the behavior of delivery drivers by tracking their gaze patterns. Many studies have found that people with autism react differently to stimuli when driving so an employer may infer from eye-tracking AI software which drivers have autism, even though employees may want to keep this information private.[18]

However, as a general rule, employees in the United States have little expectation of privacy while on company grounds or using company equipment, including company computers or vehicles, according to judicial rulings by U.S. courts and existing federal laws.[19] Addressing AI surveillance concerns with AI-specific regulation would not align with the current legal framework for employee privacy and therefore any legal reforms should address employee privacy expectations more broadly.

The solution: Policymakers should support the responsible adoption of AI in the workplace, including by helping set the quality and performance standards of AI technologies used in the workplace.[20] For instance, they should fund independent testing of commercial systems that measure behaviors and performance of employees, much like the U.S. Department of Commerce did when it launched a multistakeholder process for commercial use of facial recognition, and in June 2016, a group of stakeholders reached a consensus on a set of best practices that offered guidelines for protecting consumer privacy.[21] Doing so would help fill knowledge gaps ranging from the accuracy of different workplace tools to the efficacy of these tools to the potential uses of these technologies in specific workforce-related applications.

Additionally, the Equal Employment Opportunity Commission (EEOC) should investigate the potential autonomy violations from processing employee data as part of its AI and algorithmic fairness initiative. There Is currently no comprehensive understanding of the adoption, design, and impact of AI tools that process employee data.[22] The EEOC’s agency-wide initiative currently focuses on potential harms from bias and discrimination, but the work it is doing to hold listening sessions with key stakeholders about algorithmic tools and their employment ramifications would be valuable for gaining insights from potential autonomy violations.

Issue 1.5: AI May Infer Sensitive Information

The issue: AI can infer information about people’s identities, habits, beliefs, preferences, and medical conditions, including information that individuals may not know themselves based on other data about those individuals. AI systems can use computational techniques, such as machine learning, to make data-driven inferences. For instance, an AI system may be able to detect rare genetic conditions from an image of a child’s face, or AI-enabled online advertising may infer information about users, such as predicting their age or political leanings based on their online activity. Disclosure of such information without a user’s consent or knowledge can lead to significant reputational harm or embarrassment socially, politically, or professionally when the nature of the inferred information is particularly sensitive or highly personal. While data-driven inferences may present novel risks, these types of inferences can also occur in the absence of AI systems using standard statistical methods.

The issue: AI can infer information about people’s identities, habits, beliefs, preferences, and medical conditions, including information that individuals may not know themselves based on other data about those individuals. AI systems can use computational techniques, such as machine learning, to make data-driven inferences. For instance, an AI system may be able to detect rare genetic conditions from an image of a child’s face, or AI-enabled online advertising may infer information about users, such as predicting their age or political leanings based on their online activity. Disclosure of such information without a user’s consent or knowledge can lead to significant reputational harm or embarrassment socially, politically, or professionally when the nature of the inferred information is particularly sensitive or highly personal. While data-driven inferences may present novel risks, these types of inferences can also occur in the absence of AI systems using standard statistical methods.

The solution: Policymakers should craft and enact comprehensive national privacy legislation that addresses the risks of data-driven inference in a tech-neutral way. This would better position regulators and developers alike to ensure necessary safeguards are consistently implemented as these technologies continue to evolve.[23] Policymakers should enact privacy legislation that establishes clear guidelines for the collection, processing, and sharing of various types of data with consideration for varying levels of sensitivity; implements user data privacy rights and safeguards against risks of harm; and strengthens notice, transparency, and consent practices to ensure users can make informed decisions about the data they choose to share, including sensitive biometric and biometrically derived information.

Because biometric information is central to many emerging tech use cases and has inference-related risks, any privacy regulations should include clear definitions of biometric identifying and biometrically derived data and present transparency, consent, and choice requirements consistent with the purpose of its collection and risks of harm.

The relevant federal agencies and regulatory bodies that oversee existing privacy regulations should also provide explicit guidance on their application for any new questions arising from AI. For example, the Department of Health and Human Services could offer guidance on when predictions made by AI systems constitute “protected health information” under HIPAA (the Health Insurance Portability and Accountability Act).

Issue 1.6: AI May Help Bad Actors Harass and Publicly Shame Individuals

The issue: AI makes it easier to create fake images, audio, and videos of individuals, which can be used to harass them and harm their personal and professional reputations. Deepfakes, a portmanteau of “deep learning” and “fake,” have been around since the end of 2017, created mostly by people editing the faces of celebrities into pornography. As with all types of nonconsensual pornography, deepfake revenge porn that portrays an individual in a sexual situation that never actually happened can have devastating consequences for victims’ lives and livelihoods. More recently, deepfakes have raised risks for noncelebrities too. In one recent case, students at a New Jersey high school allegedly used AI image generators to produce fake nude images of their female classmates.[24] Deepfakes present a unique challenge, as they can fool both humans and computers, which makes it difficult to moderate this content.

The issue: AI makes it easier to create fake images, audio, and videos of individuals, which can be used to harass them and harm their personal and professional reputations. Deepfakes, a portmanteau of “deep learning” and “fake,” have been around since the end of 2017, created mostly by people editing the faces of celebrities into pornography. As with all types of nonconsensual pornography, deepfake revenge porn that portrays an individual in a sexual situation that never actually happened can have devastating consequences for victims’ lives and livelihoods. More recently, deepfakes have raised risks for noncelebrities too. In one recent case, students at a New Jersey high school allegedly used AI image generators to produce fake nude images of their female classmates.[24] Deepfakes present a unique challenge, as they can fool both humans and computers, which makes it difficult to moderate this content.

While the private sector is taking this concern seriously, and companies such as Google, Adobe, and Meta have announced significant partnerships with academic researchers to explore technical solutions, current deepfake detection technologies such as digital watermarks, embedding metadata, and uploading media to a public blockchain have limited effectiveness.[25] This makes non-technical solutions focused on limiting the spread of deepfakes key.

The solution: Policymakers should implement policies that seek to stop the distribution of this content. There is currently no federal law criminalizing nonconsensual pornography, though such laws exist in 48 states and the District of Columbia and the Violence Against Women Act Reauthorization Act of 2022 allows victims of nonconsensual pornography to sue for damages in federal court.[26] Additionally, 16 states have laws addressing deepfakes.[27] Congress should outlaw the nonconsensual distribution of all sexually explicit images, including deepfakes that duplicate individuals’ likenesses in sexually explicit images, and also create a special unit in the Federal Bureau of Investigation (FBI) to provide immediate assistance to victims of actual and deepfake nonconsensual pornography. Moreover, most of the laws criminalizing revenge porn—intimate images and videos of individuals shared online without their permission—do not include computer-generated images and only about a dozen states have updated their laws to close this loophole. Here too Congress has an opportunity to act by creating a federal statute that prohibits such activity. The Preventing Deepfakes of Intimate Images Act introduced in May 2023 would update the Violence Against Women Act to extend civil and criminal liability to anyone who discloses or threatens to disclose digitally created or altered media containing intimate depictions of individuals with the intent to cause them harm or with reckless disregard to potential harm.[28]

2. Workforce

|

# |

Risk |

Policy needs |

Policy solution |

|

2.1 |

AI may cause mass unemployment. |

No policies needed |

Policymakers do not need to focus on concerns about mass unemployment from AI adoption because the economic evidence does not support this materializing. |

|

2.2 |

AI may dislocate blue collar workers. |

General nonregulatory policies |

Policymakers should support full employment, nationally and regionally, not just with macro-economic stabilization policies, but also with robust regional economic development policies; ensure as many workers as possible have needed education and skills before they are laid off; reduce the risk of income loss and other financial hardships when workers are laid off; and provide better transition assistance to help laid off workers find new employment. |

|

2.3 |

AI may dislocate white collar workers. |

General nonregulatory policies |

Policymakers should ensure that job dislocation policies and programs support all workers whose jobs are impacted by automation so they can train for new jobs. They should also proactively support IT modernization in the public sector, including the adoption of generative AI. |

Issue 2.1: AI May Cause Mass Unemployment

Issue: In a 2023 discussion with British Prime Minister Rishi Sunak, tech entrepreneur Elon Musk predicted that AI would make all jobs obsolete, stating that “you can have a job if you want a job … but the AI will be able to do everything.”[29] Some economists, such as Anton Korinek, economics professor at the Darden School of Business at the University of Virginia, share in Musk’s belief of a potentially jobless future. In a 2023 testimony before the U.S. Senate, Korinek warned that AI systems, if able to match human cognitive abilities, could lead to the obsolescence of human workers.[30] Korinek further argued that there is about a 10 percent chance AI systems reach artificial general intelligence (AGI) in the near future, which could lead to widespread devaluation of human work in all areas.[31]

Issue: In a 2023 discussion with British Prime Minister Rishi Sunak, tech entrepreneur Elon Musk predicted that AI would make all jobs obsolete, stating that “you can have a job if you want a job … but the AI will be able to do everything.”[29] Some economists, such as Anton Korinek, economics professor at the Darden School of Business at the University of Virginia, share in Musk’s belief of a potentially jobless future. In a 2023 testimony before the U.S. Senate, Korinek warned that AI systems, if able to match human cognitive abilities, could lead to the obsolescence of human workers.[30] Korinek further argued that there is about a 10 percent chance AI systems reach artificial general intelligence (AGI) in the near future, which could lead to widespread devaluation of human work in all areas.[31]

However, many concerns about traditional AI leading to mass unemployment are typically based on the “lump of labor fallacy,” the idea that there is a fixed amount of work, and thus productivity growth will reduce the number of jobs.[32] The logic goes, if there is a fixed amount of work and workers can now produce twice as much as before, half of the previous workforce becomes jobless. But the data shows this is not the case. Labor productivity has grown steadily for the past century (even if that growth has been slower recently) and unemployment is near an all-time low.[33] AI will likely bring changes to the types of work people do and create disruptions, but the economy has mechanisms and institutions in place to adapt and maintain overall employment levels, as long as policymakers effectively manage these transitions. The challenge of AI is therefore not mass unemployment, but greater levels of worker transition.

The concerns about joblessness from AGI hinge on the existence of AGI, which is a speculative scenario that may take decades or may never fully materialize. There is no scientific consensus saying it will or is likely to.

The solution: Policymakers do not need to focus on concerns about mass unemployment from traditional AI adoption because the economic evidence does not support this materializing.

Issue 2.2: AI May Displace Blue Collar Workers

The issue: Before the very recent advent of generative AI, concerns about job dislocation centered around AI-enabled automation and robotics. The main concern has been that these technologies will lead to the elimination of certain blue collar jobs because machines can perform repetitive and routine tasks more efficiently than humans, with jobs in industries such as manufacturing, data entry, and customer service being particularly vulnerable.

The issue: Before the very recent advent of generative AI, concerns about job dislocation centered around AI-enabled automation and robotics. The main concern has been that these technologies will lead to the elimination of certain blue collar jobs because machines can perform repetitive and routine tasks more efficiently than humans, with jobs in industries such as manufacturing, data entry, and customer service being particularly vulnerable.

It is true that AI-enabled automation will eliminate some blue collar jobs, much like earlier general-purpose technologies such as the steam engine or electricity-automated jobs of the past, but the first thing to note is that the current evidence of adoption shows that there is not a tsunami of destruction as some fear. Few companies that have blue collared jobs currently use AI in a significant way. In manufacturing, where the advent of AI can transform how firms design, fabricate, operate, and service products, as well as the operations and processes of manufacturing supply chains, 89 percent of manufacturers report that they are not using AI at all according to a 2022 report from the National Science Foundation (NSF).[34] In key manufacturing industries such as machinery, electronic products, and transportation equipment, less than 7 percent of companies report using AI as a production technology in any capacity. The same is true in nonmanufacturing industries; less than 3 percent of companies in retail trade reported using AI.[35] While these numbers will grow, the rate of adoption, such as all other technologies in the past, is likely to be slow. But more importantly, AI-enabled automation will be a net good if there are policies in place to ensure those who are dislocated transition easily into new jobs and new occupations. AI-enabled automation—indeed all automation—allows workers to be more productive, and more productivity growth is a path to economic and income growth that benefits society. This is because better tools enable companies to produce better products and provide services more efficiently. By boosting productivity, workers can earn more and companies can lower prices, both of which increase living standards.

The solution: Policymakers should ensure that workers are better positioned to navigate a potentially more turbulent, but ultimately beneficial, labor market.[36] Policymakers should support full employment, nationally and regionally, not just with macro-economic stabilization policies but also with robust regional economic development policies; ensure as many workers as possible have needed education and skills before they are laid off; reduce the risk of income loss and other financial hardships when workers are laid off; and provide better transition assistance to help laid off workers find new employment.

Issue 2.3: AI May Displace White-Collar Workers

The issue: Some people are concerned that generative AI will eliminate white collar jobs. A headline from The New York Times in August 2023 encapsulates this sentiment: “In Reversal Because of A.I., Office Jobs Are Now More at Risk.”[37]

The issue: Some people are concerned that generative AI will eliminate white collar jobs. A headline from The New York Times in August 2023 encapsulates this sentiment: “In Reversal Because of A.I., Office Jobs Are Now More at Risk.”[37]

But policymakers should not mistake technical feasibility for economic viability.[38] Just because a job is exposed to LLM automation, doesn’t determine whether the technology is likely to replace white collar workers or merely augment their skills.[39] Tools such as ChatGPT might be able to draft a legal document in half the time a human legal secretary can, but that doesn’t necessarily mean law firms can or should substitute their staff in favor of LLMs, as these tools are still at a stage where they can misrepresent key facts and cite evidence that doesn’t exist; they still need humans to verify and check their outputs. Instead, AI can revalorize the jobs still performed best by humans such as nursing and teaching, making people’s skills more valuable and supplement a diminishing workforce.[40] David Autor, an economics professor at Massachusetts Institute of Technology (MIT) who has spent his career exploring how technological change affects jobs, wages, and inequality, underscored this point, when he wrote that “the unique opportunity that AI offers to the labor market is to extend the relevance, reach, and value of human expertise.”[41] Moreover, other MIT researchers published a recent paper examining the productivity effects of ChatGPT on mid-level professional writing tasks and found that using the chatbot not only increased productivity but job satisfaction too.[42]

The solution: Policymakers should ensure that job dislocation policies and programs support all workers whose jobs are impacted by automation so they can train for new jobs, including through regional economic development policies, skills retraining policies, and transition assistance policies.

Policymakers should also proactively support the IT modernization in white collar roles in the public sector, including the adoption of generative AI, to ensure workers reap the productivity, efficiency, and societal gains. The federal government struggles with a variety of challenges, such as slow services and backlogs, significant administrative burden and bureaucratic processes, and impending budget constraints. Taking advantage of new tools at their disposal, including generative AI, will boost mission delivery and help reduce the perceived risk of the technology and boost domestic demand for AI.[43]

3. Society

|

# |

Risk |

Policy needs |

Policy solution |

|

3.1 |

AI may have political biases. |

General nonregulatory policy |

Policymakers should treat chatbots like the news media, which is subject to market forces and public scrutiny, but is not directly regulated by the government when it comes to expressing political perspectives. |

|

AI may fuel deepfakes in elections. |

General regulation |

Policymakers should update state election laws to make it unlawful for campaigns and other political organizations to knowingly distribute materially deceptive media. |

|

|

3.3 |

AI may manipulate voters. |

AI-specific nonregulatory policy |

Policymakers should update digital literacy programs to include AI literacy, which teaches individuals to understand and use AI-enabled technologies. |

|

3.4 |

AI may fuel unhealthy personal attachments. |

No policy needed |

Not enough evidence of impacts to society yet. |

|

3.5 |

AI may perpetuate discrimination. |

AI-specific nonregulatory policy |

Policymakers should support the development of tools that help organizations provide structured disclosures about AI models and related data. |

|

3.6 |

AI may make harmful decisions. |

AI-specific regulation |

Policymakers should consider prohibiting the government from using AI systems in certain high-risk, public sector contexts. They should upskill regulators with better AI expertise and develop tools to monitor and address sector-specific AI risks, as the United Kingdom has done. |

Issue 3.1: AI May Have Political Biases

The issue: Both sides of the aisle accuse AI companies of designing tools that reflect the partisan views of the leadership of the companies. The most pervasive concerns come from conservatives who argue generative AI systems display a liberal bias, and cite plenty of anecdotal evidence to back up their claims. One of the most oft-reported anecdotes in early 2023 was a claim made on microblogging site X that said ChatGPT wrote an ode to President Biden when prompted but declined to write a similar poem about former President Donald Trump.[44] More recently, Google decided to block the ability of its AI image generator Gemini from generating images of people after it was criticized for depicting specific white figures, such as the U.S. Founding Fathers or German soldiers, as people of color.[45]

The issue: Both sides of the aisle accuse AI companies of designing tools that reflect the partisan views of the leadership of the companies. The most pervasive concerns come from conservatives who argue generative AI systems display a liberal bias, and cite plenty of anecdotal evidence to back up their claims. One of the most oft-reported anecdotes in early 2023 was a claim made on microblogging site X that said ChatGPT wrote an ode to President Biden when prompted but declined to write a similar poem about former President Donald Trump.[44] More recently, Google decided to block the ability of its AI image generator Gemini from generating images of people after it was criticized for depicting specific white figures, such as the U.S. Founding Fathers or German soldiers, as people of color.[45]

However, there is limited academic research into whether generative AI systems display anti-conservative bias, and some of the research supporting concerns of anti-conservative biases have been heavily critiqued. For instance, when the prompts from a paper published in the social science journal Public Choice found that ChatGPT was more predisposed to answer in ways that aligned with liberal parties internationally were replicated in a different order by other researchers, ChatGPT exhibited bias in the opposite direction, in favor of Republicans.[46] That is not to say chatbots may not exhibit political biases. They very well might lean toward certain ideologies or orientations in their answers either intentionally or inadvertently, but it would be impossible to build an “unbiased” chatbot because bias itself is relative—what one person considers neutral, another might not.[47] Some bias in generative AI systems may be the unintentional result of attempts to implement technical safeguards. Google’s AI generator, for instance, was designed to maximize diversity in an effort to subvert the system from amplifying racial and gender stereotypes but resulted in an overcorrection.[48]

The solution: First amendment protections place limits on what policymakers can do to regulate AI chatbots’ answers on political speech. The best course of action is for policymakers to treat chatbots like the news media, which is subject to market forces and public scrutiny but is not directly regulated by the government when it comes to expressing political perspectives.[49] The availability of open source AI models means people of all political backgrounds can create their own custom AI models and evaluate potential biases in their responses. Independent third-party testers can also evaluate proprietary chatbots to see the extent to which they are biased, much like media watchdog organizations scrutinize the news media. For instance, in January 2023, a team of researchers at the Technical University of Munich and the University of Hamburg posted a preprint of an academic paper explaining how they had prompted ChatGPT with 630 political statements and claimed to uncover the chatbot’s “pre-environmental, left-libertarian ideology.”[50] Policymakers can foster oversight and accountability by funding more research into how to measure political bias in AI models through NSF.

Issue 3.2: AI May Fuel Deepfakes in Elections

The issue: Individuals or organizations seeking to influence elections, including foreign adversaries, may exploit advances in generative AI to create realistic media that appears to show people doing or saying things that never happened—a type of media commonly referred to as “deepfakes.” Deepfakes have the potential to influence elections. For example, voters may believe false information about candidates based on fake videos that depict them making offensive statements they never made, thus hurting their electoral prospects. Similarly, a candidate’s reputation could be harmed by deepfakes that use other people’s likeness, such as a fake video showing a controversial figure falsely supporting that candidate.[51] For example, in June 2023, Florida Governor Ron DeSantis’s campaign shared an attack ad showing fake AI-generated images of his primary opponent former President Donald Trump hugging former health official expert Dr. Anthony Fauci. [52] Finally, if deepfakes become commonplace in elections, voters may simply no longer believe their own eyes and ears, and they may distrust legitimate digital media showing a candidate’s true past statements or behaviors.

The issue: Individuals or organizations seeking to influence elections, including foreign adversaries, may exploit advances in generative AI to create realistic media that appears to show people doing or saying things that never happened—a type of media commonly referred to as “deepfakes.” Deepfakes have the potential to influence elections. For example, voters may believe false information about candidates based on fake videos that depict them making offensive statements they never made, thus hurting their electoral prospects. Similarly, a candidate’s reputation could be harmed by deepfakes that use other people’s likeness, such as a fake video showing a controversial figure falsely supporting that candidate.[51] For example, in June 2023, Florida Governor Ron DeSantis’s campaign shared an attack ad showing fake AI-generated images of his primary opponent former President Donald Trump hugging former health official expert Dr. Anthony Fauci. [52] Finally, if deepfakes become commonplace in elections, voters may simply no longer believe their own eyes and ears, and they may distrust legitimate digital media showing a candidate’s true past statements or behaviors.

Policymakers are rightfully concerned that bad actors will exploit advances in generative AI to influence elections. The public and private sectors have already launched multiple initiatives to create technical solutions to address deepfakes, including research to identify fake content and developing standards to improve attribution for authentic content.[53] But focusing exclusively on technical interventions, as many proposed legislative bills seek to do, will not comprehensively address the risk—though some technical interventions are worthwhile.

The solution: State lawmakers should update state election laws to make it unlawful for campaigns and other political organizations to knowingly distribute materially deceptive media that uses a person’s likeness to injure a candidate’s reputation or manipulate voters into voting against that candidate without a clear and conspicuous disclosure that the content they are viewing is fake. Such a requirement would prevent, for example, an opposing campaign from running advertisements using deepfakes without full transparency to potential voters that the media is fake. This transparency requirement should apply to all deceptive media in elections, regardless of whether it is produced with AI. State election laws should focus on setting rules for political organizations that create and share deepfakes, not on the intermediaries, such as email providers, streaming video providers, or social media networks, used by political operatives to share this content.[54] Policymakers should pair these rules with effective enforcement mechanisms. Otherwise, a campaign could spread deepfakes about an opponent a few days before an election knowing that no oversight and consequences would occur until after people had voted.55

Issue 3.3: AI May Manipulate Voters

The issue: AI is changing how candidates for elected office conduct their campaigns. In 2023, there were a smattering of examples of generative AI being used in U.S. political ads, raising concerns that AI-driven political persuasion could lead to the dissemination of manipulative content. The Democratic Party tested the use of generative AI tools to write first drafts of some fundraising messages in March 2023.[55] Some worry that political operatives could use AI to craft personalized messages to manipulate voters at scale with targeted disinformation.[56] For example, campaigns could flood voters’ social media feeds with AI-created political propaganda designed around their interests. However, while AI may make this problem more acute, the core of the issue is electoral harms from deceptive political outreach and advertising, not specific technologies.

The issue: AI is changing how candidates for elected office conduct their campaigns. In 2023, there were a smattering of examples of generative AI being used in U.S. political ads, raising concerns that AI-driven political persuasion could lead to the dissemination of manipulative content. The Democratic Party tested the use of generative AI tools to write first drafts of some fundraising messages in March 2023.[55] Some worry that political operatives could use AI to craft personalized messages to manipulate voters at scale with targeted disinformation.[56] For example, campaigns could flood voters’ social media feeds with AI-created political propaganda designed around their interests. However, while AI may make this problem more acute, the core of the issue is electoral harms from deceptive political outreach and advertising, not specific technologies.

The solution: First amendment protections place limits on what policymakers can do. The best course of action at this time is for policymakers to update digital literacy programs to include AI literacy.[57] AI literacy teaches individuals to understand and use AI-enabled technologies. Whereas existing digital literacy programs might teach individuals how to use a search engine effectively, how to evaluate different sources, and how to interpret statistics, AI literacy would help individuals understand how to spot deepfakes and whether to verify the results of a ChatGPT prompt are necessarily factual or not.

Furthermore, there are existing federal laws against fraudulent misrepresentation in campaign communications and existing federal civil rights laws that prohibit the use of misinformation to deprive people of their right to vote.[58] DOJ and states’ attorneys general should commit to enforcing existing civil rights protections related to the electoral process for AI—just as U.S. law-enforcement agencies committed to enforcing existing laws for civil rights, fair competition, consumer protection, and equal opportunity to AI systems in early 2023.[59] Congress and state policymakers should support these efforts by allocating funding for law enforcement to explore how best to safeguard the electoral process in new technological contexts.

Issue 3.4: AI May Fuel Unhealthy Personal Attachments

The issue: AI companions, which are AI systems designed to interact with humans in a way that mimics companionship or friendship in the form of chatbots, virtual assistants, or even physical robots, are raising concerns about isolation and the formation of unrealistic societal expectations. Some experts are concerned that relying on AI companions may hinder individuals from forming genuine human relationships, leading to increased social isolation. This isolation could have negative effects on mental health and well-being.[60] Other experts, such as Dorothy Leidner, who teaches business ethics at the University of Virginia, worry that the idealized representations in physical appearance and emotional responses that AI companions present could lead to a distorted perception of what is considered normal or desirable in human interactions, impacting broader cultural expectations in relationships and behavior.[61]

The issue: AI companions, which are AI systems designed to interact with humans in a way that mimics companionship or friendship in the form of chatbots, virtual assistants, or even physical robots, are raising concerns about isolation and the formation of unrealistic societal expectations. Some experts are concerned that relying on AI companions may hinder individuals from forming genuine human relationships, leading to increased social isolation. This isolation could have negative effects on mental health and well-being.[60] Other experts, such as Dorothy Leidner, who teaches business ethics at the University of Virginia, worry that the idealized representations in physical appearance and emotional responses that AI companions present could lead to a distorted perception of what is considered normal or desirable in human interactions, impacting broader cultural expectations in relationships and behavior.[61]

Speculating about the role of AI in loneliness is not surprising, as a Washington Post series on technology and loneliness states that “one of our national pastimes is guessing who or what is responsible for loneliness, the ancient human condition. Is it social media? Remote work? The nuclear family? Not enough sidewalks?”[62] But the question of whether any technology, including AI, impacts loneliness is too broad and lacks the necessary nuance to understand its specific effects. It’s crucial to consider specific types of technology, who is using them, and their purposes. For instance, a 2023 study from Stanford University researchers finds that about 50 percent of older adults believe using virtual reality (VR) alongside their caregivers is “very or extremely” beneficial to their relationship.[63] Meanwhile, social media apps such as TikTok have become a resource for parents to discuss loneliness, online dating apps have become the most common way romantic couples meet, and friend-making apps are becoming a boon for young adults.

The solution: AI companions are not inherently detrimental to social well-being. Policymakers should recognize the diverse ways in which this technology could impact loneliness and social connections. There is little to no research on which segments of society are using AI companions and for what purposes, and therefore, to get enough of a sense of the impacts to society yet. Without sufficient data to understand the full scope of impacts on society, policymakers should exercise caution in their approach, lest they inadvertently hinder unforeseen benefits.

Issue 3.5: AI May Perpetuate Discrimination

The issue: A concern about AI systems is that they may mirror and amplify existing biases and discrimination in society, leading to unfair and unjust outcomes. Biased algorithms may produce results or decisions that systemically treat certain individuals less favorably than similarly situated individuals due to a protected characteristic such as their race, sex, religion, disability, or age.[64] There have long been calls for policymakers to mitigate these risks by requiring algorithmic transparency, explainability, or both; or to create a master regulatory body to oversee algorithms.

The issue: A concern about AI systems is that they may mirror and amplify existing biases and discrimination in society, leading to unfair and unjust outcomes. Biased algorithms may produce results or decisions that systemically treat certain individuals less favorably than similarly situated individuals due to a protected characteristic such as their race, sex, religion, disability, or age.[64] There have long been calls for policymakers to mitigate these risks by requiring algorithmic transparency, explainability, or both; or to create a master regulatory body to oversee algorithms.

While the concern of biased AI is legitimate, U.S. regulators have acknowledged that existing civil rights laws apply to AI systems and new authorities are not necessary to effectively oversee the use of this technology at this time.[65] Many new regulatory solutions proposed thus far would be inadequate. Some are impractical, such as those that would require audits for all high-risk AI systems because the ecosystem for AI audits is still immature, while some others stifle innovation, such as by prohibiting the use of algorithms that cannot explain their decision-making—despite being more accurate than those that can.[66]

The solution: Policymakers should focus on supporting the development of tools, which would help organizations provide structured disclosures about AI models and related data to bolster much-needed information flows along the AI value chain that could identify and remedy harmful bias and generally foster AI accountability.[67] The National Telecommunications and Information Administration’s (NTIA’s) AI Accountability Report in 2024 rightly recommends that federal agencies improve standard information disclosures using artifacts such as datasheets, model cards, system cards, technical reports, and data nutritional labels.[68] Policymakers should also help mitigate issues of bias emanating from source data by mandating specific data for AI training, as some countries have proposed, although doing so is problematic and typically at odds with the technical realities faced by AI developers. At the same time, policymakers should proactively improve datasets by ensuring the fair and equitable representation and use of data for all Americans, including improving federal data quality by developing targeted outreach programs for underrepresented communities; enhancing data quality for non-government data; directing federal agencies to update or establish data strategies to ensure data collection is integrated into diverse communities; and amending the Federal Data Strategy to identify data divides and direct agency action.[69] Federal agencies should support the development of best practices for dataset labeling and annotation, and aid the development of high-quality, application-specific training and validation data in sensitive and high-value contexts, such as in healthcare and transportation.

Issue 3.6: AI May Make Harmful Decisions

The issue: As the public and private sectors increasingly rely on algorithms in high-impact sectors such as consumer finance and criminal justice, a flawed algorithm may potentially cause harm at higher rates. When these algorithms make mistakes, the sheer volume of their decisions could end up significantly amplifying the potential negative impact of these flaws. Consider a human decision-maker at a bank evaluating loan applications. Their output is only a handful of loan applications per week, routinely making errors while evaluating them. However, a flawed algorithm misevaluating hundreds of loan applications per week across an entire bank branch would clearly cause harm on a much larger scale.

The issue: As the public and private sectors increasingly rely on algorithms in high-impact sectors such as consumer finance and criminal justice, a flawed algorithm may potentially cause harm at higher rates. When these algorithms make mistakes, the sheer volume of their decisions could end up significantly amplifying the potential negative impact of these flaws. Consider a human decision-maker at a bank evaluating loan applications. Their output is only a handful of loan applications per week, routinely making errors while evaluating them. However, a flawed algorithm misevaluating hundreds of loan applications per week across an entire bank branch would clearly cause harm on a much larger scale.

In many cases, flawed algorithms hurt the organization using them. Banks making loans would be motivated to ensure their algorithms are accurate because, by definition, errors such as granting a loan to someone who should not receive one or not granting a loan to someone who is qualified costs banks money. However, using an AI system to make decisions in certain contexts may introduce more potential for harm when multiple entities use the same ones, even if an algorithmic tool is more accurate than human evaluators and less error prone than other tools on the market.[70] This is somewhat analogous to monoculture in agriculture, wherein a lack of diversity in crops can make the entire system vulnerable to widespread failures. For example, imagine multiple banks using the same algorithmic model to screen and assess loan applications. Even though it might be rational for each bank in isolation to adopt an algorithm, accuracy can become lower than using human evaluators when multiple entities use the same one. While this seems counterintuitive, the potential for this result derives from how probabilistic properties of rankings work. The key thing is, in some contexts, independence may be more important than accuracy for reducing errors. That said, algorithmic monoculture could be desirable in some settings. It may be the case that in other high-risk areas, multiple decision-makers using a single centralized algorithmic system may reduce errors. In education, for instance, economists have found outcomes have improved as algorithms for school assignment have become more centralized.[71] Perhaps in healthcare, the allocation of scarce resources by different hospitals would be best done if they all used the same algorithmic systems. Perhaps not. It isn’t known because it has not been studied yet.

The solution: Policymakers should investigate how different factors affect desired outcomes such as fairness in high-stakes public sector contexts, where market forces are muted and the cost of the error falls largely on the subject of the algorithmic decision. Where there is evidence that consumer welfare is significantly lowered, regulators should consider prohibiting the government from using AI systems for such decisions. They should invest in upskilling regulators in AI expertise and developing tools to monitor and address sector-specific AI risks, as the United Kingdom has done, which will better equip policymakers to establish and enforce sector-specific rules for AI where necessary, such as potential transparency or reporting requirements.[72]

4. Consumer Concerns

|

# |

Risk |

Policy needs |

Policy solution |

|

4.1 |

AI may exacerbate surveillance capitalism. |

No policy needed |

Rather than pushing for restrictions on targeted advertising, policymakers and civil society should allow the private sector to do what it does best: innovate and develop novel technologies that improve welfare. |

Issue 4.1: AI May Exacerbate Surveillance Capitalism

The issue: A November 2023 op-ed in the Financial Times reads, “We must stop AI replicating the problems of surveillance capitalism.”[73] It warns that AI is making it easier for large tech companies to monetize and profit from the collection, analysis, and use of personal data and user behaviors—an issue dubbed “surveillance capitalism,” as detailed in Shoshana Zuboff’s book of the same name.[74] When it comes to AI, the concern is that companies will be able to better commodify user data and exploit consumers even more than they already do because algorithms will enable them to better analyze user data, better anticipate user preferences, and better personalize user experiences. One of the chief ways powerful companies are doing this, critics say, is by using algorithms and personal data for targeted advertising, trampling consumer privacy and rights.

The issue: A November 2023 op-ed in the Financial Times reads, “We must stop AI replicating the problems of surveillance capitalism.”[73] It warns that AI is making it easier for large tech companies to monetize and profit from the collection, analysis, and use of personal data and user behaviors—an issue dubbed “surveillance capitalism,” as detailed in Shoshana Zuboff’s book of the same name.[74] When it comes to AI, the concern is that companies will be able to better commodify user data and exploit consumers even more than they already do because algorithms will enable them to better analyze user data, better anticipate user preferences, and better personalize user experiences. One of the chief ways powerful companies are doing this, critics say, is by using algorithms and personal data for targeted advertising, trampling consumer privacy and rights.

But despite claims that targeted ads are a massive intrusion on consumer privacy, most ad platforms deliver these ads to Internet users without revealing consumers’ personal data to the advertisers. And critics of targeted advertising do not acknowledge the ample benefits of personalization to advertisers, publishers, and consumers alike, especially how these ads fund the Internet economy.[75] Indeed, targeted online ads form an essential part of the digital economy: Advertisers can link consumers to specific queries and interests and then show them relevant ads as they visit different websites. This has three positive effects: First, consumers see ads for items that are likelier to be relevant to them than the nontargeted ads they encounter in traditional media. Second, advertisers spend their marketing budgets on ads that are likelier to generate a response from the audience, which makes their ad spend more cost-effective and affordable than traditional forms of marketing. This is why personalized ads have been a godsend to small businesses: Millions of enterprises benefit from being able to show their wares to interested customers, rather than wasting money on ads shown to uninterested audiences. Third, websites and app publishers can sell inventory on their sites to advertisers, earning them valuable income and allowing them to offer content and services to users for free.

The solution: Policymakers should not introduce laws that ban targeted advertising, as doing so would hurt consumers, businesses, and publishers. Rather than pushing for restrictions on targeted advertising, policymakers and civil society should allow the private sector to do what it does best: innovate and develop novel technologies that improve welfare for everyone, including publishers (who can continue to earn billions in advertising income), consumers (who can obtain the benefits of free, ad-supported apps and websites, plus prefer to see ads tailored to their needs rather than being blanketed with irrelevant messages), and advertisers (who can continue to access affordable, effective ads, instead of relying on the kinds of pre-digital marketing that only helps large brands).[76]

5. Market Concerns

|

# |

Risk |

Policy needs |

Policy solution |

|

5.1 |

AI may enable firms with key inputs to control the market. |

No policy needed |

There is no evidence of significant entry barriers to the AI market. If this should change, antitrust policy is already capable of handling most clear threats to competition. |

|

5.2 |

AI may reinforce tech monopolies. |

No policy needed |

Antitrust agencies already have the powers they need to stop problematic acquisitions and partnerships, but they should recognize that vertically integrated AI ecosystems are not inherently problematic and can have procompetitive effects that benefit consumers overall. |

Issue 5.1: AI May Enable Firms With Key Inputs to Control The Market

The issue: The top U.S. antitrust regulators, Federal Trade Commission (FTC) Chair Lina Khan and DOJ’s antitrust chief Jonathan Kanter, recently argued that government action may be warranted to prevent large technology companies from using anticompetitive tactics to protect their standing in the emerging AI market.[77] For example, Kanter warned that the AI industry has a “greater risk of having deep moats and barriers to entry.”[78] Similar, FTC staff penned an article in June 2023 arguing that generative AI depends on a set of necessary inputs—such as access to data, computational resources, and talent—and “incumbents that control key inputs or adjacent markets, including the cloud computing market, may be able to use unfair methods of competition to entrench their current power or use that power to gain control over a new generative AI market.”[79]

The issue: The top U.S. antitrust regulators, Federal Trade Commission (FTC) Chair Lina Khan and DOJ’s antitrust chief Jonathan Kanter, recently argued that government action may be warranted to prevent large technology companies from using anticompetitive tactics to protect their standing in the emerging AI market.[77] For example, Kanter warned that the AI industry has a “greater risk of having deep moats and barriers to entry.”[78] Similar, FTC staff penned an article in June 2023 arguing that generative AI depends on a set of necessary inputs—such as access to data, computational resources, and talent—and “incumbents that control key inputs or adjacent markets, including the cloud computing market, may be able to use unfair methods of competition to entrench their current power or use that power to gain control over a new generative AI market.”[79]

However, the generative AI market is still in its early stages, and as of now, there is no evidence of significant entry barriers. Concerns about data being an entry barrier in AI are speculative and unsubstantiated. Firms seeking to create generative AI models can use data from various sources, including publicly available data on the Internet, government and open-source datasets, datasets licensed from rightsholders, data from workers, and data shared by users. They also have the option to generate synthetic data to train their models.[80] Some firms, such as OpenAI, Anthropic, and Mistral AI, have succeeded in creating leading generative AI models despite not having access to the large corpus of user data held by social media companies such as Meta and X.com. Additionally, companies with internal data can leverage it to build specialized models tailored to specific tasks or fields, such as financial services or healthcare. Similarly, compute resources required for training generative AI models have not proven to be an entry barrier. There are numerous players in the cloud server market that provide the necessary infrastructure for training and running AI models. For example, Anthropic used Google Cloud to train its Claude AI models.[81] In terms of chips, Nvidia’s graphics processing units (GPUs) are popular but face meaningful potential competition from firms such as AMD and Intel.[82] Other firms are also investing in chip design and manufacturing, fostering competition in the market.[83] For example, Google has invested heavily in Tensor Processing Units (TPUs), which are specialized chips designed to train and run AI models.

The solution: Competition regulations should allow the AI industry to continue to develop new and innovative products without unwarranted restrictions so that both businesses and consumers can access the benefits of AI. If there are documented cases of AI companies engaging in anticompetitive behavior, resulting in harm to consumers, antitrust authorities already can—and should—act. Antitrust policy is already capable of handling most clear threats to competition, and as the FTC itself notes, it is no stranger to dealing with emerging technologies.[84]

Issue 5.2: AI May Reinforce Tech Monopolies

The issue: A brewing concern is that large, vertically integrated firms that control the entire AI stack, from cloud infrastructure to applications, may engage in anticompetitive practices, such as excluding downstream rivals. This could involve restricting access to essential cloud resources or copying and integrating features from competitors, which results in effectively squeezing them out of the market due to their own scale and reach. Additionally, these firms might prefer their own AI products and services within their ecosystem, further limiting market access for new entrants. Instead, several competition authorities would like to see “mix-and-match” competition at and between all layers of the vertical chain rather than vertical integration.

The issue: A brewing concern is that large, vertically integrated firms that control the entire AI stack, from cloud infrastructure to applications, may engage in anticompetitive practices, such as excluding downstream rivals. This could involve restricting access to essential cloud resources or copying and integrating features from competitors, which results in effectively squeezing them out of the market due to their own scale and reach. Additionally, these firms might prefer their own AI products and services within their ecosystem, further limiting market access for new entrants. Instead, several competition authorities would like to see “mix-and-match” competition at and between all layers of the vertical chain rather than vertical integration.

However, a mix-and-match environment may not drive the same level of competition between generative AI models as ones with vertical ecosystems.[85] Imagine a cloud provider and an AI model developer partnering in a vertically integrated system. In this setup, if the integrated system loses customers downstream (using AI models), it not only loses those specific sales but also faces reduced scale and revenue potential for its other services higher up in the chain (e.g., cloud services). This means that a loss in one part of the system affects the entire chain more significantly than a system wherein different parts operate independently. Vertical integration can result in a competitive AI market in which several ecosystems exert pressure on each other, and supporting the emergence of new vertical ecosystems at this early stage of AI industry could help ensure the AI market does tip to the monopoly. [86] It is also important that there are developments in both closed source (proprietary) and open source (accessible to the public) ecosystems, which further contributes to stimulating competition.

The solution: Antitrust agencies already have the powers they need to stop problematic acquisitions and partnerships, but they should recognize that vertically integrated AI ecosystems are not inherently problematic and can have procompetitive effects that benefit consumers overall. They should base decisions on a detailed understanding of markets, including current and future sources of innovation, and focus on increasing social welfare. Agency guidelines explain that nonprice terms also matter when evaluating a merger or acquisition, including “reduced product quality, reduced product variety, reduced service, or diminished innovation.”[87] Vertical ecosystems in the AI industry often prioritize differentiation over price competition, emphasizing offering unique features, innovative solutions, and high-quality services to distinguish themselves in the market. Regulators should consider this focus on differentiation when evaluating the competitive landscape of AI ecosystems.

6. Catastrophic Scenarios

|

# |

Risk |

Policy needs |

Policy solution |

|

6.1 |

AI may make it easier to build bioweapons. |

General regulation |