Secrets From Cloud Computing’s First Stage: An Action Agenda for Government and Industry

Cloud computing drives innovation and productivity across the economy, just as the electric grid did a century ago—yet it is more capable and dynamic, and it still in its early stages. Cloud is important not just at the firm level, but also for economic growth and global competitiveness.

KEY TAKEAWAYS

Key Takeaways

Contents

Why Cloud Computing Matters to Economic Success 6

Cloud Economics, Industry Structure, And Market Dynamics 8

Cloud Adoption Is in Early Stages—Adoption Is More Broad Than Deep. 8

There Are Multiple Market Segments With Different Customer Needs 9

Cloud Providers Have Large Economies of Scale and High Fixed Costs 9

Customers Benefit From Declining Prices and Improved Price Transparency 10

The Top Three Providers Have 60 Percent Global Market Share. 12

The Cloud Is a Platform With a Partner Innovation Ecosystem. 13

Products Are Differentiated “Up the Stack” 14

Integration Across the Industry Value Chain Is Low, But Increasing. 15

Profitability Is Similar to Large Software Companies 16

The Cloud Is a Good Fit for Most, But Not All, Applications 16

Where Cloud Computing Excels 17

Cloud Drives a Better Business and Technology Model 17

Cloud Is a Rich and Capable Platform, Not Just a Collection of Services 18

Cloud Lowers Switching Costs Despite Lock-In Concerns, Multi-cloud Best Practices 20

Cloud Boosts Agility, Speed, and Innovation. 22

Cloud Infrastructure-as-Code Enables Automation, Productivity, and Specialization. 23

Challenges for Cloud Success 24

Achieving Stronger Security and Compliance: Automation and Management 24

Enhancing Governance, Managing IT and Data, and Reducing Complexity 26

Building New Skills and Inclusivity 27

Making Cloud Adoption Easier 27

Adapting to Hybrid Cloud, the Emerging Edge, and 5G. 29

A Policy Agenda for Cloud Computing. 30

Federal Cloud Modernization Moon Shot 31

Spurring Adoption by the Private Sector 32

2. Improving Security and Resilience: Government-Industry Collaboration Program. 33

4. Strengthening Data Governance: Cross-Border Data Confidence-Building Measures 36

5. Promoting Skills and Inclusivity via a Public-Private Training Partnership. 37

Introduction

Cloud computing is a powerful and disruptive technology that is now breaking out into the mainstream economy, more than a decade after launching in 2006. Millions of companies are using some form of cloud computing, often from Amazon Web Services (AWS), Microsoft Azure, Google Cloud, or other providers. Successfully adopting cloud computing will be a key determinant of which countries will prosper in the global economy. Cloud computing lowers costs, creates technical and business agility, and enables innovation and digital transformation. In 2020, the global market for cloud services was $270 billion and cloud companies listed on U.S. markets had more than $1 trillion in capitalization.[1] Hundreds of new cloud services are being introduced and thousands of new venture-backed cloud companies are being formed.[2]

Yet, cloud computing is still in its early days, given more than $3 trillion in annual global information technology (IT) spending and decades of accumulated investment in traditional IT infrastructure. In short, cloud adoption is more broad than deep. Most companies use the cloud for only a small share of their IT needs, and spending on cloud computing is just 7.2 percent of annual global IT spending.[3] It’s early in this disruptive shift, and it’s important to better understand cloud computing’s successes and challenges. Cloud computing is important not only for individual companies’ success, but also for economic growth and global competitiveness. It’s what economists call a “general-purpose technology” that is pervasively used across most sectors. The cloud is becoming a platform that drives innovation and productivity across the broader economy. It represents the digital equivalent of the electric grid, only one that is more capable and dynamic.

Cloud computing is important not only for individual companies’ success, but also for economic growth and global competitiveness.

After a brief introduction to what cloud computing is, this report focuses on the economic impact of cloud. It addresses why cloud computing matters for a country’s economic success—which has not received as much focus as the cloud’s technical and business impact. It then analyzes the economics of the cloud sector, including industry structure and market dynamics. The report recommends five areas where cloud computing excels and five challenges that need to be met to fully capture the benefits of the cloud. It concludes with a policy agenda for cloud computing. Governments will inevitably address cloud-related policy issues as cloud’s reach and power expand. Recommendations for policymakers include accelerating adoption with a federal cloud-modernization moon shot; improving security and resilience; preserving competition by encouraging application portability; strengthening governance and enabling cross-border data flows; and building workforce skills and inclusivity.

What Is Cloud Computing?

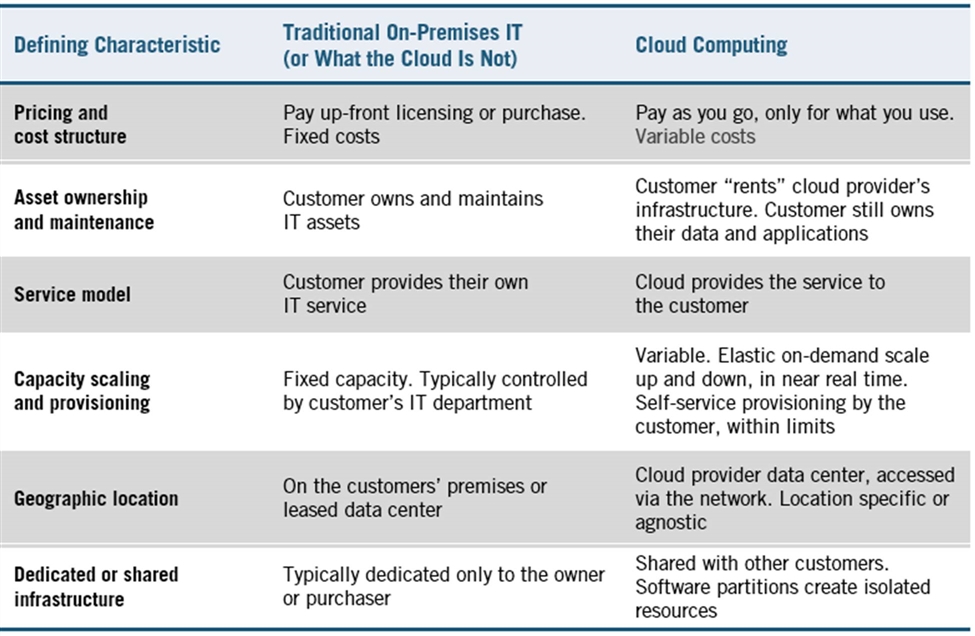

Cloud computing is a powerful technical architecture for IT, driven by technologies such as software virtualization. Just as important, cloud architecture enables new operating and business models. As a starting point, cloud computing provides IT resources (e.g., compute, storage) as a pay-only-for-what-you-use service delivered over the communications network by a third party. This is in contrast to the predominant model in which users directly “own and operate” on-premises physical IT equipment. The U.S. National Institute of Standards and Technology (NIST) states “cloud computing is a model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources … that can be rapidly provisioned and released with minimal management effort or service provider interaction.”[4] An important capability of the cloud is that IT resources can be scaled up when demand increases, or scaled down and turned off, delivering agility gains and cost savings. We break down the cloud’s defining characteristics in figure 1.

Figure 1: What is cloud computing?[5]

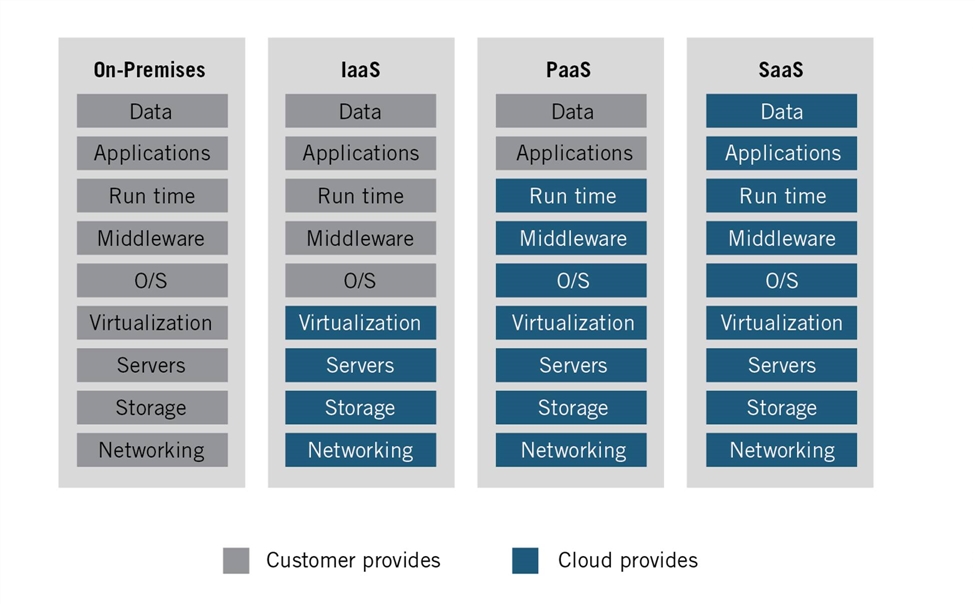

Three Layers of the Cloud: IaaS, PaaS, SaaS. Cloud computing is typically broken into three major parts, each covering a collection of services. Infrastructure as a service (IaaS) provides the underlying IT infrastructure such as compute, storage, and networking. Platform as a service (PaaS) provides middleware, databases, and developer and management tools to build and support applications. Software as a service (SaaS) provides a fully managed application that customers directly use such as email, financial applications, or supply chain management. While all three layers operate together, each represents a different scope of adoption of the cloud by the user (see figure 2). Each involves a different technical part (often called a “layer of the stack”) of the technology solution. As users move from IaaS to PaaS to SaaS, they shift more responsibility for IT to the cloud provider. This typically provides lower costs and more agility, but entails less control over individual IT resources. Users can and do adopt different services independently, as each user takes their own journey of cloud adoption.[6] This reflects different customer priorities and skill levels. There is no one right starting point for adopting the cloud.

For example, at the IaaS level, customers store data, run web servers, and provide back-up and recovery services in the cloud. At the PaaS level, customers often run databases in the cloud for high scalability and availability, while also shifting database maintenance to the cloud. At the SaaS level, customers run applications such as email and collaboration, human resources management, and accounting systems in the cloud. In practice, the lines between these approaches and services blur, and cloud providers’ offerings overlap across markets. There are also new so-called “XaaS” markets emerging such as Function as a Service (FaaS). These XaaS markets are important, but mostly smaller markets in earlier stages of adoption.

Figure 2: Different approaches to the cloud have different scopes of adoption[7]

Another distinction is between public and private clouds, which use similar technology.[8] The major difference is public clouds offer services to the general public and the same cloud infrastructure is shared by many different entities, whereas private clouds are typically limited to a single organization. Private clouds are usually smaller in scale and can trail the cost and performance of public clouds. “Hybrid cloud” refers to combining traditional on-premises IT with public clouds. We discuss the benefits and limits of hybrid cloud.

This paper focuses on public cloud IaaS and PaaS. The primary providers of IaaS and PaaS are the same. IaaS and PaaS also share similar characteristics: Both are underlying technical resources that support the application, and both are predominantly used by IT managers and software developers rather than end-users. The IaaS and PaaS markets are moving closer together as providers offer services that combine their functionality.[9] In contrast, SaaS is led by a different set of software providers (e.g., Salesforce, Workday, ServiceNow), and end users interact directly with the application. While SaaS takes advantage of and runs on top of IaaS and PaaS infrastructure, SaaS’s core value added is the application itself.[10]

This paper also focuses on enterprise cloud markets that serve businesses, governments, and organizations—rather than individual consumers. While companies such as Facebook and Twitter use similar cloud technologies and run, in part, on top of enterprise cloud providers, they are consumer-focused companies. For the most part, they do not offer their services “wholesale” for other businesses to build on, but rather effectively offer SaaS applications to consumers. The consumer cloud companies also have different business models, often driven by advertising spending. This is not to say only enterprise providers define the cloud; there are a wide array of services offered by many others that compose the cloud. For the purposes of this paper, enterprise and consumer raise different issues.

Why Cloud Computing Matters to Economic Success

IT is the foundation of a modern economy. IT underpins the operations and workflows across every facet of an organization. Companies in every industry use IT to develop products, orchestrate supply chains, drive the selling process, run operations, and manage every step of the business. Every government uses IT to provide citizen services, from health care to education, and to manage back-office operations. In fact, 28 percent of U.S. business investment is in IT, and the U.S. federal government spends over $100 billion annually on IT.[11]

Cloud computing is an emerging technology architecture that is driving growth, productivity, and innovation. New computing architectures come in waves.[12] The mainframe dominated in the 1960s and 1970s, the PC in the 1980s, client-server in the 1990s, the Internet in the 2000s, and cloud computing took off in 2010 and is now gaining scale. Previously, new technology waves drove productivity gains and economic growth.[13] Each new architecture builds on the prior one and delivers lower cost and more capability. Each wave deepens IT penetration of the economy, reaching more users and integrating IT with more economic activity. While cloud computing technology is new, it builds on a past technical lineage that includes network computing, grids, and distributed computing. Further, cloud computing is integral to new IT-driven business developments that have broad economic impact. For example, technologies such as artificial intelligence and machine learning (AI/ML) and Internet of Things (IoT) depend on robust computing and are being built directly into the cloud infrastructure and offered as cloud services. The cloud will become the platform for AI/ML.

Cloud computing is a better economic model for IT. It’s the digital equivalent of the electric grid—and is more powerful. Cloud computing finally delivers on the promise of IT by shifting the hard work of operating IT infrastructure to the cloud—with lower costs and better performance. It represents the industrialization of IT wherein cloud effectively standardizes and outsources the IT infrastructure to a cloud service provider. Cloud computing applies modern statistical process controls to IT similar to modern manufacturing. Customers no longer need to buy, deploy, operate, maintain, and own their IT infrastructure. The public cloud operates IT better, so customers don’t have to.

First, cloud computing has lower costs. An International Data Corporation (IDC) study sponsored by Amazon Web Services (AWS) shows cloud infrastructure has 31 percent lower operational costs than comparable on-premises infrastructure, and has even greater savings when people and downtime costs are included.[14] Greater scale and technical innovation lead to rapid quality-adjusted price declines, according to economists Byrne, Corrado, and Sichel, of 7 percent per year for computing power, 12 percent per year for database, and 17 percent per year for storage.[15] The cloud is also more automated, enabling new capabilities and whole IT infrastructures to be deployed and managed with only a few key strokes. IDC estimated, in an AWS sponsored report, that IT staff efficiency is 62 percent greater in the cloud, while developer productivity is 25 percent greater.[16] Second, cloud has better capital efficiency. It drives greater capacity utilization by aggregating demand across many customers and sectors, which each have their own usage profile. On-premises servers are typically used 20 percent of the time, whereas in the cloud, utilization typically well exceeds 50 percent. This reduces server over-provisioning and drives better asset efficiency. Third, the cloud has better energy efficiency and a lower carbon footprint. Google says its data centers use about six times less overhead energy for every unit of IT equipment than the average data center does.[17] This progress is increasing.[18]

On an operating level, even large, sophisticated organizations have challenges implementing and upgrading IT.[19] IT is not most companies’ or governments’ core competency, or even purpose. Each customer has to implement and operate their own IT system. For large mission-critical systems such as finance, customer records, and supply chains, implementation can take years. For example, HP implemented an application upgrade in 34 systems around the world, but the 35th failed, causing the company to miss its quarterly financial goals and its stock price collapsed.[20] In another example, the U.S. Department of Veterans Affairs initially spent $1.1 billion over five years on an electronic health record system that it was not able to implement.[21] In large part, this is because each enterprise’s IT environment is different, complicated, and brittle. Over $1 trillion globally is spent on people-based IT services each year to make these complex systems work. Even when successfully deployed and operated, traditional on-premises IT rapidly becomes out of date, causing a build-up of “technical debt” that needs to be modernized and replaced. Users then have to re-implement IT all over again. And IT implementation and operations are the easy part, relative to the challenge of getting real business value or government-mission value from IT. As discussed in more detail below, cloud computing is simply a better way for most of IT.

Cloud computing drives specialization and the division of labor, enabling the business, operations, services, and everything that uses IT to become much better. The cloud is not only a cheaper and better way of operating IT, it enables businesses and governments to focus on their customers, their core business, and the value added of satisfying needs. One of the most powerful ideas in economics is specialization and division of labor.[22] The cloud takes specialization and division of labor to a new level in IT, wherein the cloud provider specializes in the IT infrastructure so users doesn’t have to. The JPMorgan Chase bank has stronger IT capabilities than most, but it requires an army of 40,000 people in its IT organization with a $9 billion annual budget.[23] As an apt historical analogy, in the early industrial revolution, manufacturing mills were built on rivers to provide water power.[24] Each manufacturer had to generate, distribute, maintain, and own their power, similar to how most enterprises today own and operate their IT. However, after electric-power technology became good enough, manufacturing mills began outsourcing electricity to the power grid, so they could focus on running their core manufacturing processes. They specialized in what they were good at boosting economic productivity.[25]

Cloud computing drives innovation across the economy. Because cloud is a variable cost that can be turned on and off when needed, it lowers the cost of experimentation and failure. This reduces risk and enables faster time to market for new products. The entire economy benefits, but this is especially important for smaller companies that may not be able to afford larger up-front capital purchases. The cloud also drops barriers to company formation, a key source of innovation and job growth.[26] Indeed, start-ups were some of the first users of the cloud. Most of the new Internet-based companies are cloud-native and, because they are starting fresh, their entire businesses are built on top of cloud IT infrastructure.

The larger significance of cloud computing is it’s becoming the IT platform on which the economy is built. The distributed electric grid enabled the creation of hundreds of new products such as consumer appliances, and whole new industries such as radio and television broadcasting. As we will discuss, companies today (e.g., Twitter, Snap, Netflix) are building their whole business on the cloud, and large enterprises (e.g., Bosch, Providence Health, Procter & Gamble) are migrating existing IT to the cloud.[27] We’ll show how cloud lowers cost, boosts productivity, and shifts scarce talent to higher value-added applications and business processes. It enables enterprises to move faster and be more agile. Cloud computing is spreading across every industry, providing flexible enabling technologies from basic computing to ML. The cloud enables businesses to reinvent each step of the business, including marketing, product development, manufacturing, selling, and financial management. Cloud computing is more than just the latest IT innovation. It underpins the broader economy directly enabling the “digital transformation” of the economy. The digital economy is powered by the cloud.

Cloud Economics, Industry Structure, And Market Dynamics

Cloud Adoption Is in Early Stages—Adoption Is More Broad Than Deep

The market for cloud services is developing quickly but is still in the early stages. IaaS officially started in 2006, and in the last 10 years, adoption has accelerated beyond early adopters and is now breaking into mainstream users. Millions of organizations are using the cloud for specific applications and workloads. By number count, cloud computing is crossing over into the mainstream. However, measured by share of total use, market penetration is low, especially for new or advanced services. AWS CEO Andy Jassy recently pegged overall cloud adoption at 4 percent of global IT spending, and Gartner data shows 7.2 percent.[28] The latest National Science Foundation (NSF) business survey data shows similar low penetration.[29]

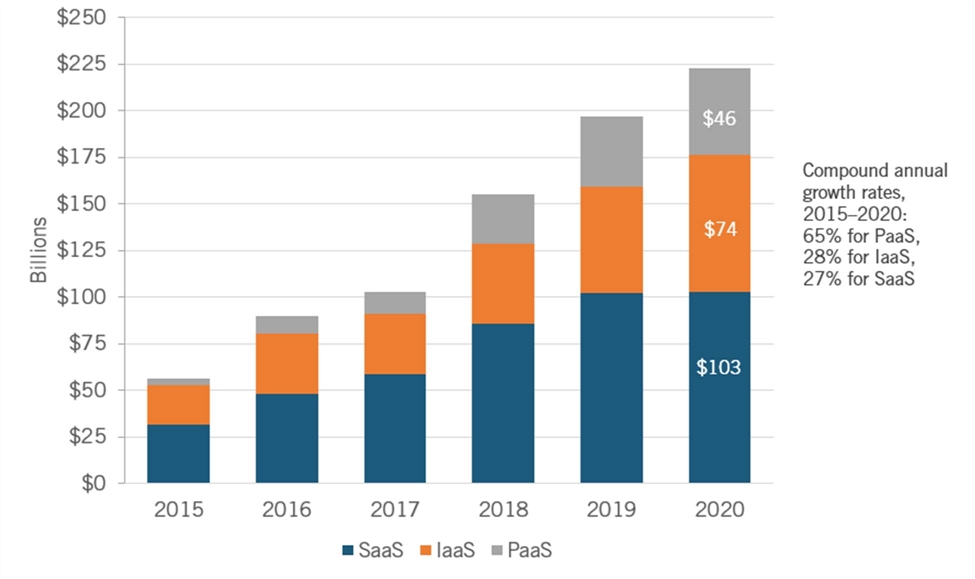

SaaS ($103 billion globally in 2020) and IaaS ($74 billion) are the two largest markets by revenue, with PaaS the smallest ($46 billion).[30] The SaaS market scaled first, building on the prior Application Service Provider model. SaaS adoption is much higher than PaaS or IaaS, exceeding 25 percent of the software application market, led by cloud-native companies such as Salesforce.com, Workday, and ServiceNow.[31] Most traditional software companies such as Microsoft, Oracle, SAP, and Adobe are moving from traditional licensed, on-premises software to the SaaS model.[32] All three markets are growing significantly faster (32 percent combined global growth rate) than is global IT spending (approximately 4 percent growth). As customers become more skilled at using the cloud, adoption grows, in turn increasing the spending, scale, and number of services used. Most customers use foundational services such as compute and storage. However, more advanced services such as ML, Internet of Things, and specialized databases still have low adoption.[33]

Figure 3: Spending on global cloud computing, 2015–2020[34]

There Are Multiple Market Segments With Different Customer Needs

Different customers are at different stages of adopting the cloud and have reached different levels of cloud maturity. The key buying factors and customer needs vary across customer maturity and market segments. Some of the earliest cloud adopters were start-ups that valued the speed of near instant provisioning of IT, cash-conserving pay-as-you-go pricing, and access to world-class technologies. For some users, core capabilities such as lower cost, business continuity, and remote access matter most. For others, the ability to outsource IT at global scale and achieve granular management and security is key. While for advanced users, cloud-native architectures and software development practices enable them to rapidly test products, innovate, and achieve superior business agility. Ultimately, cloud computing is integral to organizational change and culture, becoming a source of competitive advantage. This is most identifiable in start-ups that built cloud-native architectures from the start (Airbnb, SmugMug, Pinterest). Yet, established firms are also going all in on the cloud in sectors ranging from banking, where Capital One has closed eight data centers, to education content providers such as Blackboard, Ellucian, and Instructure.

Cloud Providers Have Large Economies of Scale and High Fixed Costs

Large economies of scale are a defining characteristic of the cloud computing businesses. IDC tracks $74 billion of investment in global cloud infrastructure in 2020, and the leading cloud providers each spend billions annually.[35] Microsoft and Amazon each have dozens of geographic regions and more than 150 data centers globally.[36] They don’t disclose cloud-only CapEx data but it takes tens of billions of dollars to build cloud data centers globally, provision gigabit networks, and develop the software infrastructure. Opening a new region is typically a >$1 billion investment.[37] The competition for scarce technical talent is also fierce.

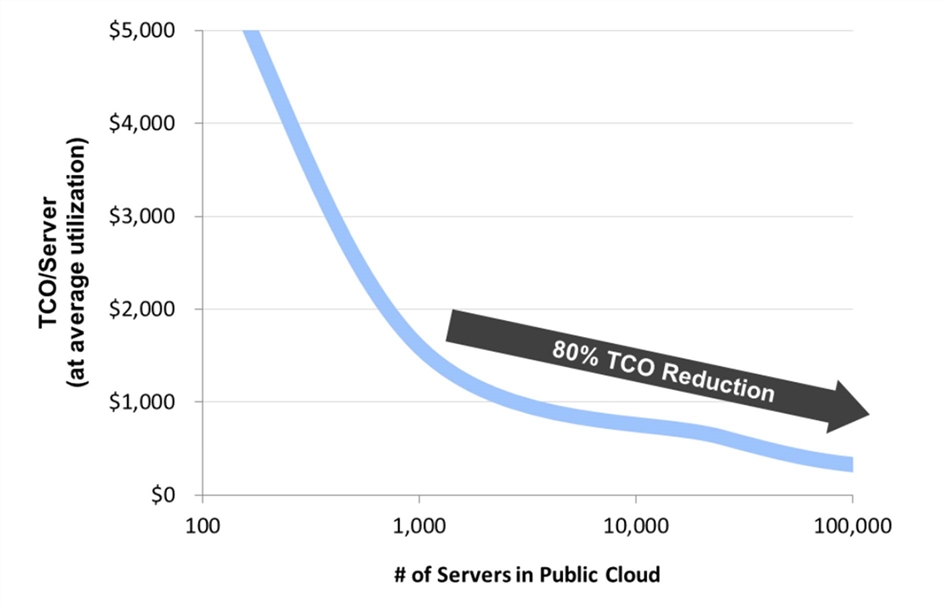

Once providers incur this big fixed cost, the average cost for each unit of compute and storage declines as volume and utilization grows (figure 4). Cloud infrastructure is heavily automated, and providers have developed proprietary knowledge about design and management. Providers scale to millions of computing cores and petabytes of data without adding people, blowing past traditional industry benchmarks for the number of system administrators per server or database. This ultimately results in lower prices for users. Cloud providers also have greater economies of scale in purchasing, becoming the largest buyers of storage, memory, and compute. For example, the cloud is one-third of the global server market, up from just single digits a few years ago.[38] In addition, there are large soft costs such as compliance, which take years to achieve for the dozens of security and compliance regimes needed. All of these create substantial entry barriers for IaaS platform providers. However, lower-cost, large-scale IaaS providers reduce entry barriers for PaaS and SaaS, which can more quickly and cheaply leverage the massive cloud IaaS infrastructure. PaaS and SaaS can run their products on top of IaaS, choosing to buy IaaS rather than build it.

Figure 4: Cloud computing economies of scale[39]

Customers Benefit From Declining Prices and Improved Price Transparency

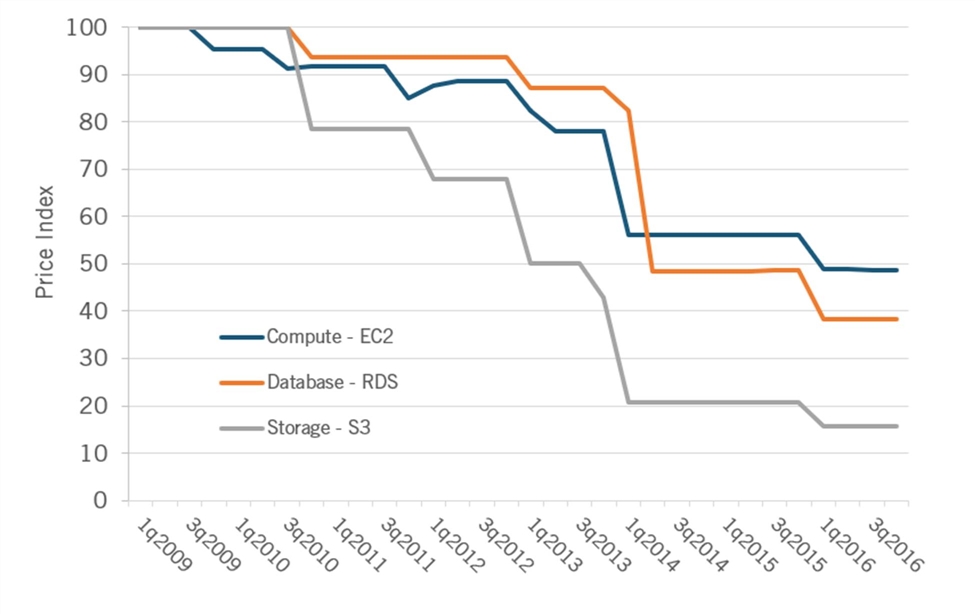

Compute, storage, and database are the largest segments of the market and show large price declines. AWS alone has cut prices over 70 times across services and regions since launching. Economists Byrne, Corrado, and Sichel analyzed published AWS prices from 2009 to 2016 and found that quality-adjusted prices fell rapidly (see figure 5).[40]

▪ Compute prices (EC2) fell on average by 6.9 percent a year from 2009 to 2016 and, more rapidly, by 10.5 percent a year on average from 2014-2016 once Microsoft and Google started publishing prices publicly in 2014.

▪ Storage (S3) pricing fell on average by 17.3 percent a year from 2009 to 2016 and on average 25.1 percent a year from 2014 to 2016.

▪ Database (RDS) pricing fell on average by 11.6 percent a year from 2010 to 2016 and on average -22.6 percent a year from 2014-2016.

Falling prices are driven by 1) cost declines from economies of scale and technical innovation, 2) competition to win over customers from traditional on-premises providers that capture >90 percent of IT spending, and 3) competitive rivalry among the major cloud providers. For example, in a six-month period in 2012–2013 when cloud adoption started to pick up, AWS, Microsoft, and Google made over 20 price cuts.[41]

Figure 5: Declining prices for cloud computing[42]

Cloud service pricing has become more complicated as the number of services and pricing models have grown. But this comes with greater choice and visibility. Prices for specific services are publicly published on cloud provider websites. Pricing differs by type of service (e.g., compute) and within a service (e.g., size and mix of computing power, memory, and networking optimized for different workloads). Cloud providers also offer a growing number of pricing models in addition to the standard no-up-front-charges, pay-only-for-what-you-use model. Customers achieve even greater discounts for committing to larger volumes and longer time periods, and pre-paying. There is even a “spot market” for computing power and a secondary market with cloud brokers and resellers. As users get more confident and capable managing their cloud spending, they are able to shift their mix of usage to even lower cost “reserved” capacity, thereby reducing their overall costs even further than standard price discounts. These pricing options are more transparent than most traditional software licensing. However, with more pricing choices, customers must know both their usage patterns and how to use cost management tools to get the most benefit.

The Top Three Providers Have 60 Percent Global Market Share

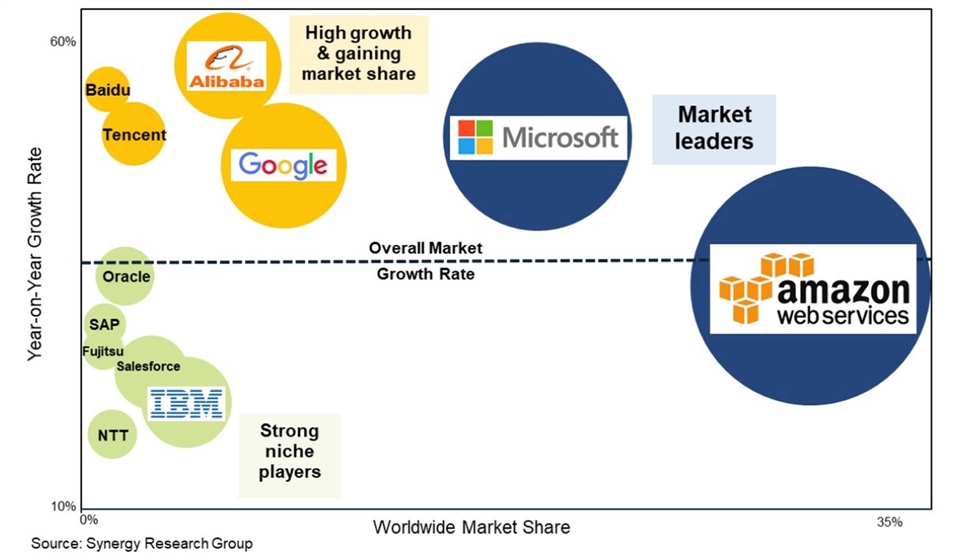

Synergy Research data measuring the combined IaaS, PaaS, and hosted private cloud revenues for Q4 2020 shows that Amazon has 32 percent share, Microsoft has 20 percent, Google has 9 percent, followed by Alibaba and IBM each at around 5 percent, and then Oracle.[43] This concentration reflects the large economies of scale previously noted. Our estimated Herfindahl-Hirschman Index (HHI)—a measure of market concentration—is around 1,600, which is at the low end of “moderately concentrated” markets, according to Justice Department guidelines.[44] Note, HHI is a static snapshot and doesn’t capture market dynamics. The top three market share leaders have stayed in the same order. AWS invented the market for public cloud computing, is the leader measured by revenue, and has led the Gartner magic quadrant for 10 successive years.[45] Microsoft is number two, gaining 10 points of share from 10 percent to 20 percent from 2017-2020, as it pivoted to the cloud. Google and Alibaba are also making smaller share gains. Providers outside the top 10 are consistently losing share, and are down to a combined 20 percent share. We examine switching costs in the next section. Each company has its own focus:

▪ AWS emphasizes technical performance, customer-driven product roadmap, and offers unmatched breadth and depth of its services. It has led the push into new cloud services from analytics to satellite data.

▪ Microsoft has built out its cloud service portfolio to narrow the gap to AWS. It leverages its existing relationships with customers, installed base of Office/Office 365 and Windows operating systems, and extensive go-to-market ecosystem of sellers and partners.

▪ GoogleCloud has technical strengths, including its heritage in data and analytics. Under CEO Thomas Kurian, Google is improving its enterprise selling and support capabilities, and targeting vertical sectors.

▪ IBM emphasizes hybrid cloud computing, leveraging its large on-premises customer base. Especially with its Red Hat acquisition, IBM is focusing on multi-cloud and hybrid cloud management.

▪ Oracle is focused on large enterprises and mission-critical workloads. It leverages its proprietary and widely deployed database and enterprise application (ERP) software.

▪ VMWare runs on top of AWS, Azure, Google, IBM, and other clouds but no longer offers IaaS data center hardware infrastructure. VMWare leverages its leading position in on-premises virtualization and offers a common solution across the private cloud, the hybrid cloud, and the public cloud.

▪ Major telecoms (VZ, AT&T) and legacy IT providers (HPE, Cisco, Sun (now part of Oracle)) have exited the market, unable to keep up with the large investments required in services and infrastructure. Telecoms still have a presence in “hosting” customer IT equipment.

▪ China’s state-led economy has systematically nurtured and protected Alibaba, Tencent, Huawei, and Baidu. Alibaba dominates in China and is a major player in Asia. Tencent is growing rapidly too. The Chinese government requires non-Chinese cloud providers to operate through a Chinese partner in China.

▪ Europe is trying to build its own European data infrastructure. The EU is driving the GAIA-X project and the European Cloud Initiative, in which it expects to invest €2 billion.[46]

Figure 6: Cloud provider market share and growth rate, Q1 2021 (IaaS, PaaS, and hosted private cloud)[47]

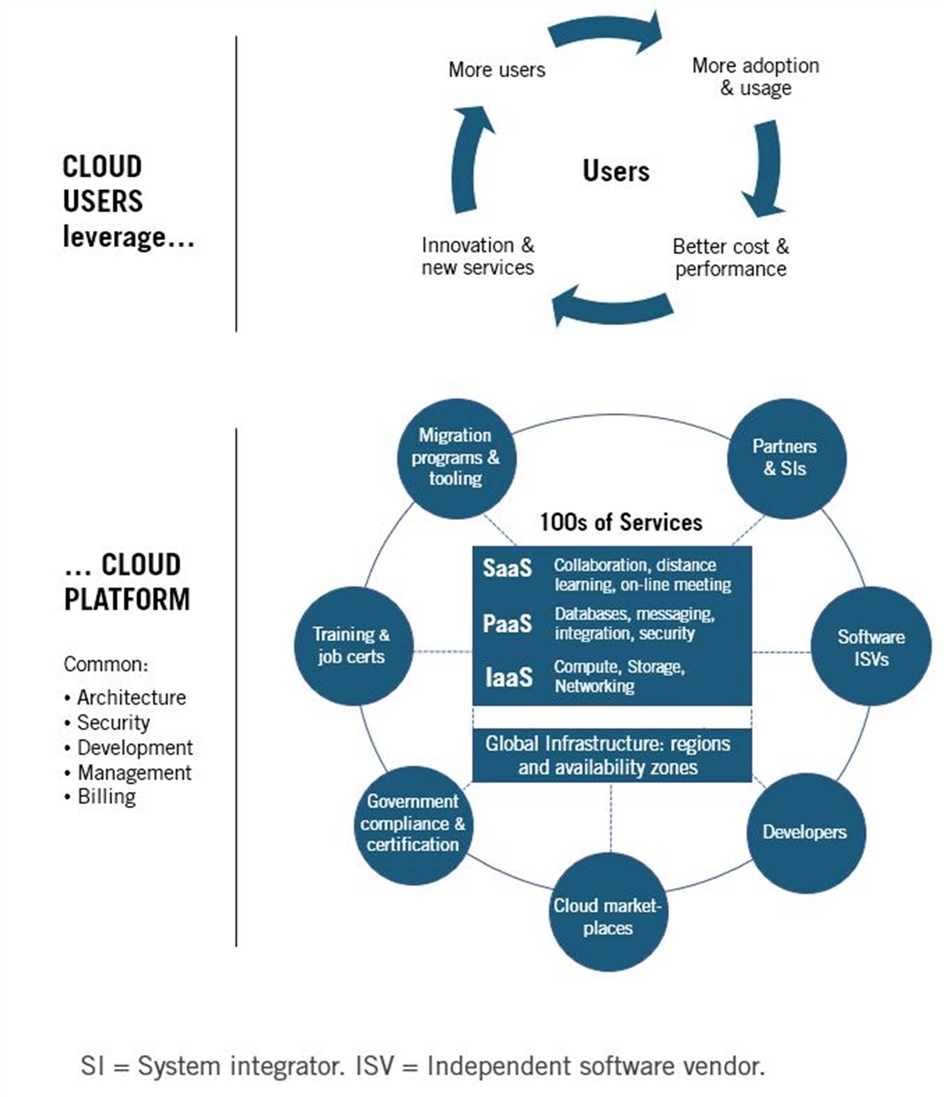

The Cloud Is a Platform With a Partner Innovation Ecosystem

AWS, Microsoft, Google, Alibaba, Tencent, and others enable thousands of competitors and tens-of-thousands of partners to leverage their underlying cloud platforms and provide more specialized services. This includes hundreds of independent software vendors (ISVs) which offer additional services where the cloud platforms have no offering as well as competitive offerings. For example, data analytics ISV Snowflake has a $60 billion market cap and competes directly with and runs on top of AWS, Microsoft Azure, and Google clouds. Similarly, VMWare offers its widely used virtual machine software in partnerships with AWS, Microsoft Azure, Google, IBM, and directly competes with them.

While cloud computing is disrupting on-premises IT environments, partners are building cloud practices to both stay relevant to their customers and participate in cloud growth. For example, Rackspace manages its customers’ accounts on AWS, Azure, and Google Cloud. All the major system integrators have built large businesses advising customers on how to move to the cloud and performing migrations. Accenture has created a dedicated business group called Accenture Cloud First with 70,000 employees, and it has $11 billion in cloud-related revenue.[48] Cloud providers are encouraging this migration by building formal partner ecosystems that offer joint market-adoption programs, technical assistance, and business incentives. They also provide resources to software developers to incentivize adoption of their tools and technologies. AWS, Microsoft, and Google have built online marketplaces wherein customers can find software, data, and services from pre-approved partners and pay for pre-tested and pre-integrated software. AWS has over 7,000 listed products and 300,000 active customers that have purchased 1.6 million subscriptions.[49] All of this gives government and enterprise customers access to innovative solutions from new and small companies they may not normally interact with, while giving small companies access to new customers.

As we’ll discuss in more detail, the major clouds are expansive platforms that each have a common architecture and management. They are developing network effects and attracting partners. The ecosystems with the most scale and best services win. Cloud computing also reduces lock-in by providing lower switching costs and more portability than traditional IT environments. In a rapidly changing IT industry, partners have to innovate and move “higher up the stack” to products important to their customers’ businesses. History shows that products (e.g., the browser) commoditize over time and can be absorbed as features in the underlying platform. The biggest competitor to the cloud is the more than 90 percent of the enterprise IT market that remains on-premises. In addition, given the early stage of cloud adoption, venture capitalists are pouring billions of dollars into new company formation, and there is an active market for cloud mergers and acquisitions. Leading VC firm Bessemer Ventures in 2020 tracked $186 billion in private cloud investments and “record breaking cloud M&A activity.”[50]

Products Are Differentiated “Up the Stack”

Computing is often characterized as a commodity, despite the complexities of managing and securing IT. Core services such as compute and storage are more homogenous and portable between IT environments. Cloud computing technologies such as virtual machines, containers (software that packages application code and its dependencies so that they run across IT environments), and de facto standards help create interoperability and portability. For an example, see the widely used open source Kubernetes containers.[51] Published cloud prices for similar compute offerings are typically very similar between Azure and AWS, indicating homogeneity. Yet, there are differences even in these core services. For example, AWS’s Nitro system moves virtualization and management to a separate chip and uses a custom Graviton processor, thereby improving security and performance and lowering costs by freeing up resources for the customer that were previously used by the system. In addition, Nitro’s modularity enables faster innovation. Cloud providers are also trying to differentiate by creating 40 types and sizes of compute offerings that are optimized for different workloads.

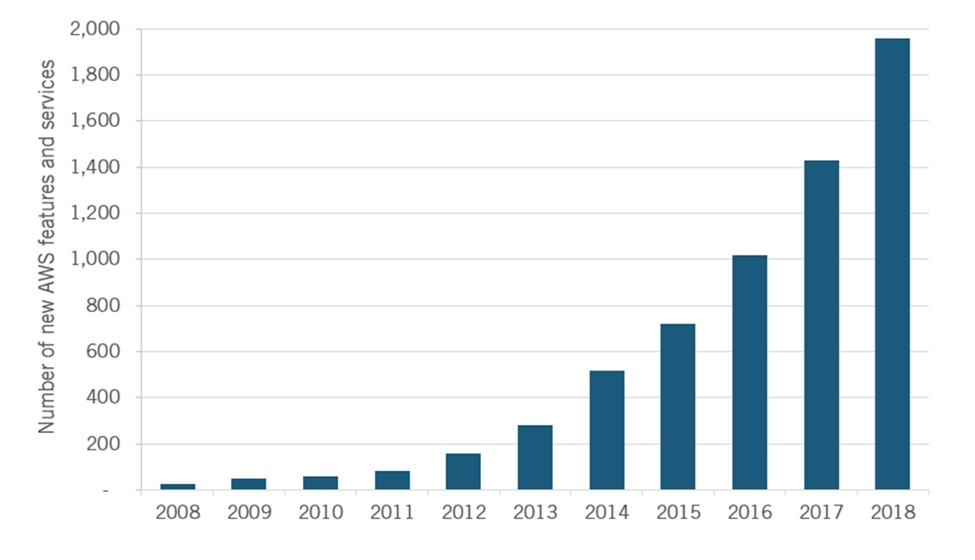

Another example is object storage, which is the native technology to store different kinds of data in the cloud—and is often considered a cheap commodity. However, Azure, AWS, and Google are adding dozens of features and services, many of which are sufficiently differentiated to be separately priced, including security, compliance, availability, retrieval performance, and content management. Add database, analytics, ML, messaging, media services, security, developer tools, Internet of Things, AR/VR, and robotics, and cloud computing shows real differentiation. These higher-level differentiated services will in time become a growing part of the cloud. Though counting services is arbitrary, Azure and AWS offer >200 different named services. In addition, providers are adding many new features to these services, which itself points to differentiation (figure 7).

Figure 7: Cloud innovation

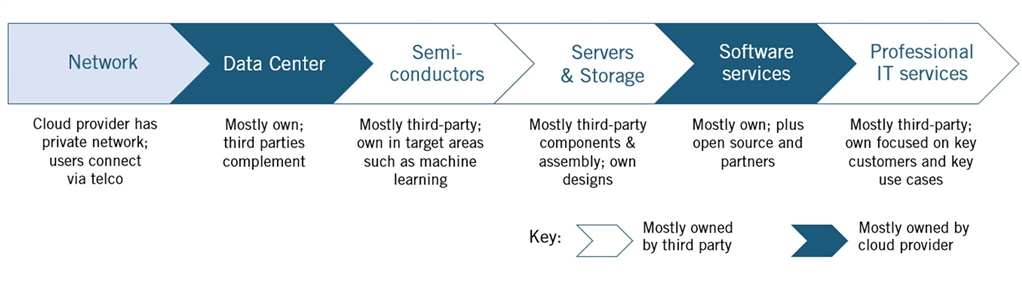

Integration Across the Industry Value Chain Is Low, But Increasing

Cloud providers purchase servers, semiconductors, memory, and storage, choosing to “buy rather than build” as their primary approach (figure 8). However, given their scale, the big three are increasing their role in the value chain, using their own designs and components. A good example is in semiconductors, where they design custom chips for ML to provide better performance, costs, and product differentiation (e.g., Google Tensor Processor; AWS Inferentia and Trainium). They also heavily customize servers, storage, and switches for better energy and operational performance, though they are built from largely standard industry components by original equipment manufacturers. Microsoft and AWS have also designed their own branded computing hardware for edge applications, such as Azure Stack and AWS’s Outposts and Snowball, which are still in the early stages of adoption.

In order to improve the performance of their services, cloud providers largely own or lease and operate their own global internal communications networks linking their data centers and regions. Their customers, however, purchase bandwidth from telecom carriers in order to reach cloud connection points. In addition to the networks themselves, the big three providers also primarily own and operate their own data centers, seeing them as a source of differentiation. Yet, they also use third-party data centers to supplement capacity and enter international markets quickly. Cloud providers’ core value-added is their software. Open source also plays an important role, and for some services (e.g., Kubernetes containers, Cassandra databases, Kafka event streams), cloud providers build a “managed service” based on open source code. Cloud providers rely heavily on third parties for professional IT services such as consulting, workload migration, and application modernization. While providers have their own professional services staff, they are often used for key customers or technically challenging deployments.

Figure 8: Cloud value chain

Profitability Is Similar to Large Software Companies

We estimate cloud operating margins at scale are in the 30 percent range, similar to the largest software companies.[52]Operating profit margins for the largest scale cloud (AWS) were 29.8 percent in 2020. Adjusting Microsoft’s “Commercial Cloud” business’s gross margin to operating margins (so it is comparable to AWS) shows 36.2 percent.[53] However, this may be overstated due to cross subsidization between Microsoft’s Azure cloud and its traditional on-premises software. Microsoft is also able to decide how to categorize revenue within large, multi-product enterprise agreements that include both cloud services and traditional software. Google Cloud is not yet profitable, with -32.4 percent operating margins in Q4 2020, though it has improved over the last 3 years. Losses reflect both the big investments Google is making to compete with AWS and Microsoft and that it has not yet achieved the scale for profitability.[54] Note, cloud services have a different revenue recognition model than traditional licensed software, which may make it appear less profitable in margin percentage terms with GAAP (generally accepted accounting principles) accounting. Most cloud revenue is on a usage basis, so revenue recognition is spread out over time—whereas most traditional software has a large license revenue component that’s recognized at the start.

Cloud economics are still evolving. Cloud services may have lower gross margins than traditional software, because it has substantial cost of goods sold (COGS) due to energy, data centers, and network bandwidth, plus the aforementioned usage-based revenue recognition. However, the step down to operating margins may be less severe. Traditional software companies have large sales and marketing costs, typically 20–25 percent of revenue. The cloud has potentially lower sales and marketing costs given the cross-selling of higher value-added services from the same platform, more scalability, and greater self-service use. From an industry value chain perspective, we expect cloud to accelerate the shift in revenue and profits from hardware and components to the cloud, and from legacy software to the cloud. Long term we also expect cloud to shift profits away from people-based IT services, since those services (e.g., IT outsourcing, maintaining servers) are increasingly provided by cloud automation. However, over the next three to five years, we expect cloud computing to create offsetting demand for people-based IT services to help users migrate to the cloud, refactor applications, and manage and secure their cloud environment.

The Cloud Is a Good Fit for Most, But Not All, Applications

Cloud computing is a general-purpose technology, but there are always exceptions where the cloud is not the best fit. For example, some specialized applications in high-performance computing (HPC) are not designed for the scale-out horizontal architecture of the cloud and still run best on HPC-optimized infrastructure. While most applications can be run in the cloud, they may not take advantage of cloud-native architectures and get the full benefits of the cloud. Some workloads are very sensitive to latency and are best located close to large on-premises datasets or in an edge device such as a self-driving car. These are not yet ideal workloads for the cloud. Some legacy applications may simply not have the business case to move to the cloud. Historical regulatory models may also keep some workloads on premises. However, the bulk of IT workloads are well suited to the cloud. Further, like many newer technologies, as cloud performance increases, the workloads not suited to the cloud will become an increasingly small “island.” The cloud is not perfect for everything, but be skeptical of statements claiming a given workload won’t run well in the cloud.

Where Cloud Computing Excels

Cloud Drives a Better Business and Technology Model

Cloud computing uses new technologies, but just as importantly, it employs a new business model that provides compelling value for businesses and governments. The cloud is not right for all applications. However, with millions of customers using cloud computing, it has proven it can provide superior value in cost, productivity, and business agility. Government customers with the most stringent security needs, such as the CIA, are heavy users of cloud computing. Media customers with the biggest high-bandwidth video needs such as Netflix and ViacomCBS are “all in” on the cloud. Banks and brokerages with the biggest need for transactional certainty such as Capital One, JP Morgan, and Goldman Sachs are heavy users of the cloud. Gaming customers with the biggest need for real-time interactivity such as Zynga are built on the cloud. Early on, companies thought they could innovate faster and operate IT better than the cloud. Today, they’re realizing that even if they have the money and technical skills, they’re unlikely to be better at IT than the cloud. This is similar to the way they realized that the electric grid is better at providing power. Zynga’s story is especially compelling, as they moved off the cloud to their own data centers, believing they could customize their own infrastructure for technically demanding game-specific requirements. They ended up moving back onto the public cloud when they found it had greater scaling, better economics, and faster innovation.[55] While cloud computing is still maturing, its direction is clear. Over the long term, we’re moving from a world wherein most enterprise IT is on premises to a world in which most enterprise IT is in the cloud. History shows spending time and resources to maintain past models is unlikely to succeed. It’s better to embrace the “cloud-future” than fight it.

Cost savings is often the starting point for new technology adoption, and enables executives to make the required business case to move to the cloud. In addition to the double-digit annual price-decline data previously shown, an IDC study sponsored by AWS shows 31 percent operational cost savings comparing cloud computing with similar on-premises infrastructure, and 51 percent savings when including IT staff costs and unplanned down time.[56] McKinsey has shown that the cloud saves costs across each step of the business system, including 5–10 percent of research and development (R&D) costs, 10–20 percent in supply chain and manufacturing, and 15–20 percent in business support costs.[57] These cost savings are backed up by thousands of customer case studies and testimonials.[58] To fully achieve these savings users must put in place proper governance and management. Nonetheless, the major drivers of these cost savings are clear:

1. Greater economies of scale and scope, boosted by technical innovation.

2. Better capacity utilization from aggregating millions of customers with different usage profiles. Traditional servers have a 20 percent average utilization rate and, even with virtualization, typically achieve ~50 percent rates, according to IDC. With the public cloud, utilization is well beyond that, and will increase as cloud spot markets grow.

3. Lower management costs from programmable infrastructure and automated tools that drive 62 percent better IT staff productivity.[59]

4. Variable costs that can scale up and down with business need rather than pay-up-front fixed costs. This avoids capacity shortages or expensive excess capacity, as well as “lumpy” upgrade cycles that are risky and often delayed.

5. Better availability, durability, and resilience through horizontal “scale out” architectures that dynamically shift workloads to resources that run in parallel. Workloads can be spread across different availability zones (60 miles apart with separate telecom, power, flood plains, earthquake zones) and, if desired, in different countries.[60] Durability for objects stored in Azure and AWS is at least 99.999999999 percent.

The bigger value from the cloud comes from greater agility, speed, and focus on the core business. At its simplest, the cloud outsources IT functionality and IT management that is not a core competency. Users choose what and how much or little IT they shift to the cloud, depending on their business needs and IT skills. They can outsource a full end-to-end application to the cloud via a SaaS solution, or they can just move back-up and recovery for select workloads. This enables users to move faster, innovate more quickly, and focus on their customers. We’ll discuss how cloud enables agility and speed in more detail below.

The cloud provides global reach. Even the smallest organizations can tap billions of dollars of investment in global infrastructure. Google Cloud service is “available in 200 countries and territories;” AWS “serves over a million active customers in more than 240 countries and territories;” and Microsoft Azure says it is “available in over 140 countries” and is likely well above that.[61] Organizations can provide services close to their target markets in order to improve network latency, and store data in designated countries for regulatory purposes. Users can specify where their data is stored and their computing operations run, and can secure them with encryption and a broad range of security tools.

Cloud Is a Rich and Capable Platform, Not Just a Collection of Services

Like many newer technologies, cloud computing is rapidly getting better as it matures. Further, the cloud is not just a “product.” It’s becoming a richer, more capable platform that provides all the major IT services, rapid access to new services such as Internet of Things and ML, and thousands of complimentary services from a large partner ecosystem (figure 9).

Expansive services: IT and beyond. The cloud increasingly provides just about every IT-based service customers need today—and maybe tomorrow. The cloud goes far beyond basic services such as compute, storage and networking. Initially, in services such as relational databases, cloud providers didn’t provide all the features and high-end performance needed by the most advanced users. However, now cloud providers can scale relational databases (from Oracle, Microsoft, MySQL, Postgres) often at lower cost and comparable availability (e.g., multi-region, multi-master services). In addition to traditional relational databases, AWS offers new database technologies optimized for different kinds of workloads, including NoSQL (unstructured data in web applications), graph relationships (social media), document stores, block chain ledgers, and time-series databases. Even more, cloud providers offer solutions for new application domains, including Internet of things, content delivery and media services, satellite ground stations, robotics, ML, and mixed reality (AR/VR).

Common architecture, security, and management across the platform. The major clouds are not just a collection of services. These services leverage common software development architectures, operational models, and management tools within each cloud. They are built on common core technologies and provide common services, including integration, data management, security, and compliance. Cloud platforms are built with application programming interfaces (APIs), which are key to modern service-oriented architectures. APIs expose functionality in standard, reusable ways so other services in the cloud can use them. Cloud platforms also use common billing and cost-management tools. All this lowers costs, increases innovation, and expands capabilities. As a result, customers are able to add new services in just hours or days, without having to learn new skills or enduring slow and expensive deployments.

Partner ecosystems build on the cloud platform. The major clouds are platforms that are so rich and deep that they can tackle IT challenges that were not doable before, or were cost-prohibitive.[62] In addition to hundreds of first-party cloud services, thousands of partners use APIs to “plug in” to the major clouds. Because APIs expose functionality in standard, reusable ways, partners build on top of and re-use the functionality of the underlying cloud platform. Keeping with the electric grid analogy, an electric socket is analogous to an API: Any device can plug in to the service and receive electricity so long as its plug is built according to the standard (size, dimensions, load). Cloud APIs enable a huge ecosystem of third parties that offer additional services to plug in to the platform. (Note, APIs also mitigate lock-in by making it easier to unplug). These partner offerings increase the depth and richness of cloud offerings, thereby making the cloud more useful.[63] The cloud platform and partner services are economic complements—each supports the other. The major clouds are ecosystems that offer superior value from the combined offering of the platform, which is greater than what any single company can offer. As a result, the major clouds are starting to exhibit demand-side economies of scale called “network effects,” whereby customers and partners go to the platforms that offer the most services and biggest market.

Figure 9: Power and richness of the cloud platform

Cloud Lowers Switching Costs Despite Lock-In Concerns, Multi-cloud Best Practices

Customers have concerns about their ability to leave clouds or move workloads across providers. This stems in part from IT industry historical practices. Yet, cloud computing breaks down lock-in. Cloud lowers switching costs (to move to a new provider), increases portability (to move applications and data), and provides more interoperability (to exchange and use information). While concerns are real, cloud technology, economics, and licensing lower lock-in. Customers should use the five approaches below, combined with exit planning, to mitigate risks.

First, the cloud model of pay as you go, only for-what-you-use, ramp-up, and ramp-down, lowers switching costs and increases cost transparency. This stands in contrast to most traditional software agreements that typically require license payments at the start, regardless of actual usage. Traditional multi-year software enterprise agreements also have non-transparent cross subsidization among multiple products. Cloud users receive granular billing that can show what resources were used by which users, in what region, and at what time. Customers should make sure they use the cloud’s built-in tools and cost alarms to manage and lower costs. As an incentive, cloud providers offer volume discounts at the users’ choice, such as Google’s straightforward “Sustained Use Discounts.” To be clear, there still are costs to moving users’ data to exit the cloud, but they are transparent and there are tools to move the data and manage the costs.[64] Customers should also consider the likelihood of actually needing to switch versus. a general concern about lock-in.

Second, cloud providers and partners offer server migration and data-transfer tools to move workloads to and from the cloud, diminishing lock-in. These tools automate and simplify the migration process. Cloud providers also offer tools to export users’ virtual machine images. These tools are widely used by customers and partners on a weekly basis. For example, VMware’s leading virtual machine technology works on AWS, Microsoft Azure, Google Cloud, IBM, and others with “push button” automation moving VMware workloads between premises and the cloud. The cloud providers themselves also have nascent tools that aim to provide more ambitious multi-cloud management. This is in part to win workloads from each other. For example, Google’s Anthos and Microsoft’s Azure Arc help provide an integrated view of users’ IT resources across clouds. IBM and Red Hat also offers multi-cloud tools, technology (e.g., open source frameworks such as Open Stack), and professional services.

Three, users can architect applications using services such as containers, microservices, and APIs to make their workloads more cloud agnostic. These technologies, such as Kubernetes containers, run a workload in smaller independent “packages” that are not dependent on specific features of any one cloud. In that way, they can be moved across different clouds and on-premises. By breaking large applications into smaller pieces (e.g., microservices), applications can be designed so that proprietary services are limited to smaller, clearly defined pieces that can be “designed around” or, if needed, re-implemented. Architecting IT workloads into independent “loosely coupled” services with well-defined interfaces (APIs) makes portability a lot easier. Further, key cloud services are based on publicly available open source alternatives, such as Linux VMs, Kubernetes containers, and Postgres databases.

Fourth, what one customer sees as a proprietary service with switching costs, another customer chooses as value-added innovation. Cloud providers offer higher-level services such as ML that leverage proprietary capabilities. Cloud providers invest in new functionality that is specific to their cloud. While this creates new capabilities, when customers choose to use new proprietary services this also creates some switching costs. However, some level of switching costs (which can be mitigated) is not the same thing as lock-in, and should be weighed against the value of new innovation.[65] Customers can choose what they value most.

Fifth, portability across clouds has costs as well. Designing cloud-agnostic architectures typically creates more complexity and costs for customers. And re-factoring applications so they can be moved in portable containers also has costs, which not every application may justify. Further, most organizations don’t have enough skills to build and manage cloud computing. Adding multiple cloud providers increases this skill challenge. While the major clouds offer similar services for core capabilities, they are implemented differently. Multiple clouds create a more complex environment, which is one reason why most customers are not using multi-cloud management tools.[66] Multiple clouds can also lead to “least common denominator” approaches wherein customers don’t take full advantage of advanced services, which can have big opportunity costs for the business. Customers should evaluate whether multi-cloud tools achieve their goal of provisioning, securing, and managing operations across clouds and on-premises. It remains to be seen whether multi-cloud management offerings such as Google Anthos, Azure Arc, and IBM Red Hat will add sufficient value to offset the additional cost and complexity.

Primary cloud and multi-cloud best practices. Many customers concentrate usage on one primary cloud for IaaS and PaaS. They benefit from innovative features, volume discounts, and being able to build skills and IT productivity in their primary cloud. They should design for portability and create exit plans to mitigate lock-in concerns. Exit plans should include clarifying data formats, database schemas, and system dependencies, as well as the actual business need for portability. Deploying cost and usage tools are key to managing costs. These tools are available from cloud providers and from partners such as CloudCheckr, Apptio Cloudability, and CloudChomp. For basic needs, users should avoid operating the same workload across multiple clouds, as the cost and complexity are likely to yield disappointing savings in practice. However, it likely makes sense to add services from other clouds that provide additional capabilities important for their business. Users with distinct business and mission needs are likely to use multiple IaaS-PaaS cloud providers. This is an opportunity to place workloads in the “best of breed” cloud for that service or domain, for example, adding an additional cloud to use their ML service or medical applications from Microsoft Cloud for Healthcare. In this case, it’s better to run a new workload all in one cloud and use different clouds for different workloads. Similarly, it’s more difficult to architect and operate the same workload across multiple clouds. If we include SaaS applications, most customers will be multi-cloud because they are likely to use many SaaS applications, for example, Microsoft Teams for collaboration, SAP Concur for expense management, or Salesforce.com for customer relationship management.

In summary, multi-cloud should be less about spreading basic compute tasks across multiple clouds in an effort to save money and reduce lock in—these goals can be better achieved in other ways. Rather, multi-cloud is better used to capture best-of-breed services across multiple clouds to maximize clouds’ value.

Cloud Boosts Agility, Speed, and Innovation

Cost savings are often a starting point for moving to the cloud—and cost is easier to quantify. However, customers say agility, speed, and innovation matter most. Cloud computing enables users to bring products to market faster by experimenting quickly at low cost. An on-demand general-purpose server (8 cores, Linux, 32 GiB memory) costs just $0.384 per hour, lowering the cost of trials and experimentation.[67] For example, biotech company Moderna completed the computing for its vaccine in 42 days, compared with the normal 20 months.[68] Supersonic-aircraft designer Boom tested hundreds of designs with thousands of flight simulations, using 53 million core hours. Because the cloud can scale-up capacity “horizontally” (using 1,000 machines for 1 hour rather than 1 machine for a 1,000 hours), it can reduce time spent on product design. Boom is a start-up—the cloud democratizes access to the latest capabilities and scale putting small companies on more equal footing with large corporations and governments.

The cloud enables a business to be more agile by rapidly standing up and tearing down IT capabilities that underpin their operations and workflows. With the cloud, it no longer takes weeks or months to order servers and have IT administrators rack, install, and provision new resources. Instead, compute resources can be provisioned via software tools in just hours or days through the cloud management console. IT is no longer a fixed cost, as it becomes “temporary,” shut off when the task is done. Cloud software development and “DevOps” also bring speed and automation to building and deploying new code, thereby increasing developer productivity. It brings a new culture and organizational approach that enables more rapid software release cycles and rapid product development. This extends into IT management and operations, which are automated via programmatic software controls. The contrast from traditional on-premises environments could not be greater. One customer, following completion of a robust-but-expensive year-long ERP-application on-premises installation said, “Thanks, you’ve just poured concrete over my business.” The cloud is the opposite. It enables speed, scalability, and rapid development that brings a more-nimble culture and organizational approach.

Cloud Infrastructure-as-Code Enables Automation, Productivity, and Specialization

We’ve emphasized that in the cloud, IT resources such as servers, storage, and databases are not fixed but can be added or taken down at scale in near real time through software. In contrast, manually provisioned systems with hard-wired dependencies between components are increasingly legacy practices. This has significant implications for how users manage their infrastructure. It drives productivity and flexibility in IT operations, as well as improved security. Software templates digitally describe collections of IT resources (databases, storage, compute virtual machines), their parameters, and any dependencies among them. These templates become “configuration files” the cloud treats as executable software code. That means the templates can be launched, deleted, and managed as a single complete system or “stack.” Further, IT administrators can use these templates to change the IT infrastructure in repeatable, predictable, and auditable ways. In the cloud, we no longer just program an individual application. The whole IT infrastructure itself becomes programmable.

An entire infrastructure with thousands of user accounts can be provisioned across geographic regions at huge scale with only a few key strokes. IT administrators can test, tune, and launch the IT infrastructure in hours from the management console. IT architectures can be consistently cloned with version controls and re-used. The infrastructure itself can also be programmed to automatically respond to events. Run-time metrics can be set to trigger alarms or automated actions. For example, an action command might be: “If increased demand causes performance to fall by 20 percent, then automatically add 5 large servers and load-balance across them.” Performance and compliance can be monitored and optimized in an automated fashion. For advanced users, the software development process itself can be incorporated into this. Software code-pipelines can be built and deployed, and then integrated into the existing IT infrastructure. Notice the specialization and division of labor: The cloud automates the underlying IT so that businesses and governments can devote resources and focus to applications and business processes that help customers and improve outcomes. Once again, the cloud brings cost savings, IT productivity, and business agility. Cloud IT becomes a scalable service in a way that was simply not possible before.

Challenges for Cloud Success

Like most newer technologies, there are challenges that get in the way of more robust and deep adoption of cloud computing. Some of these issues are not unique to the cloud. Nevertheless, we call out how the cloud heightens their importance or changes how they are addressed. Recurring themes include making cloud simpler and easier to use and providing best-practice guidance and better tooling and automation.[69] The industry is starting to recognize and address these issues. Over time, this will help accelerate adoption and ensure customers get full value from the cloud.

Achieving Stronger Security and Compliance: Automation and Management

Security and compliance can be better in the cloud than on premises, but automation and management are needed to achieve these benefits. The first question many customers ask when moving to the cloud is about security. Security in the cloud is now as good, or in many cases better, than on premises, as the CIO of CIA recently concluded.[70] However, the cloud has a different “shared security” model between the cloud provider and the customer. The cloud provider is responsible for securing the underlying infrastructure of the cloud, while the customer is responsible for what they put in the cloud (e.g., guest operating system, applications, data) or connect to the cloud. The most security-conscious users around the world from intelligence agencies, defense ministries, and banks now rely on cloud computing. Security remains a challenge for all, but these large and sophisticated organizations are demonstrating their confidence in cloud security by moving their workloads to the cloud. They also rely on 90 compliance regimes around the world to prove security is implemented in production, for example, FedRAMP for unclassified U.S. government workloads, SRG IL-6 for secret U.S. Defense Department data, C5 in Germany, G-Cloud in the United Kingdom, GDPR for data privacy in Europe, plus industry-specific regimes such as HIPPA in health care and PCI-DCC for financial payments.[71]

Cloud computing presents new patterns of security,whereas many organizations are comfortable with security models they already know. The cloud provider takes care of physical security (data-center perimeter, rack access, back-up power, etc.) and security controls are implemented in software (hypervisor, operating software, access controls). This is different from users owning or leasing a data center they operate with their own personnel. However, it also offers advantages. Security is now provided by a hardened cloud infrastructure, wherein “best in class” security is a core competency and crucial to the business. Cloud security is the top priority and receives billions of dollars in investment. And customers now only have to worry about a smaller scope (“attack surface”) of IT.[72] They can focus on securing their data, applications, and intellectual property. It also means security is built by design into the cloud platform and each service.

Granular and scalable security policies. Customers use identity and access management (IAM) to set policies that, for example, allow only named users in a specific geographic region to have access to specific IT resources down to specific rows in specific databases at certain times of day. Multifactor authentication validates users not just with a name and password but with an additional code, typically generated from a small hardware device. Users decide which geography to store their data in and where their workloads run. Only users move their data.

Multi-layered security and zero trust. Cloud providers use many layers of security, starting with virtual private networks, sub-network isolation, and web application firewalls. Cloud-scale infrastructure is well positioned to protect against distributed denial of service (DDOS) attacks. Perimeter defense is insufficient and security extends beyond the network to applications and the data itself. For example, new data loss prevention services use ML to identify anomalous access patterns, such as data that is being accessed by a non-typical user or in an unusual way. Cloud services continuously verify whether a user, machine, or application is “known” and what access and privileges they have. Data is encrypted both at rest when stored and in transit. Further, customers can generate and hold their own encryption keys the cloud provider does not have access to. These approaches are especially important in a cloud world, because the physical location of the resource is not a primary determinant of security.

Platform security, continuous monitoring, and transparency. The cloud is well positioned to improve security because it is a platform with common security models, IAM policies, API models, encryption, logging, and monitoring. Cloud configuration tools can ensure predictable and consistent infrastructure builds with pretested security. Continuous monitoring software enforces policies about who has access to what IT resources. This prevents unauthorized configuration changes to software. It also tracks events down to individual API calls, showing who requested what service and when, which is key to security incident response. Monitoring software also provides alerts of common security misconfigurations, such as leaving open certain network ports, allowing public access to storage buckets, or not using strong multifactor authentication on root accounts. Just as infrastructure becomes code, security becomes code.

Improving security integration, automation, and management. The richness of the platform can create a stronger security posture. However, it can also be complicated and, as with on-premises, requires dedicated skills. Security was identified as the top cloud challenge by 81 percent of users in the Flexera 2021 State of the Cloud Report.[73] Many common vulnerabilities are driven by misconfigured software, not keeping up with a changing IT environment, or customers simply not using available security features. Higher-level services that integrate prescriptive guidance, best practices, and security artifacts into easier-to-use packages would help customers take full advantage of cloud security. Prior efforts can be rationalized into a simpler coherent approach. At premium is simple, specific guidance about who is responsible for what in a shared-responsibility model—and how to best implement it with cloud tools and processes. Cloud providers can work with NIST and others to curate and publish these best practices, and where appropriate, develop them into common industry frameworks.

The cloud is also well positioned to provide better tools that automatically detect and correct vulnerabilities. As the industry has created new defenses, adversaries have responded with new counter-techniques. This “security leapfrog” has created dozens of tools that have their own data formats, interfaces, and management tools. Integrating across these different tools will give customers a more complete view of their security posture. Automation built in to the cloud should also take over more of the continuous monitoring and management. This can make security more consistently deployed, up to date, and easier to use. There are also more opportunities to apply ML (already embedded in the cloud) to sort through the thousands of security events captured in system logs. The cloud itself can proactively correct straightforward vulnerabilities, and surface complex situations for experts to intervene in. Just like in storage and servers, the cloud should manage more of the lower-level security and enable security experts to focus on the most-serious challenges.

Enhancing Governance, Managing IT and Data, and Reducing Complexity

Successful cloud adopters have found that strong governance and management is still required after moving to the cloud. Customer business leaders and IT executives need to jointly govern IT and data, paying special attention to the new methods of the cloud. Surveys show 75 percent of cloud users call out “governance” as a cloud challenge.[74] Customer IT leaders need to set access and security policies and ensure compliance, sometimes across thousands of users. Who gets access to what IT resources and on what terms is especially critical in a cloud environment because thousands of servers can be provisioned in minutes and there is quick console access to powerful services such as facial recognition. IT leaders need to establish baseline infrastructure configurations and set guard rails. Operational performance against service levels should also be managed and improved. This takes on new importance in the cloud, where IT is a service, not a physical asset. IT costs, including any cost-recovery, can be tracked, enforced with automated controls, and allocated across different business owners. Best practices need to be set so IT resources are not unintentionally left running. Again, the cloud provides enhanced visibility and granularity of spending, but also risks from more rapid access via the management console. Software development and operational best practices (DevOps)—the traditional preserve of the IT organization—also should be recast to take advantage of cloud-native approaches such as continuous integration and continuous delivery (CI/CD). Training and certifications in new techniques will be required in order to use the cloud well. The cloud is well positioned to bring together the many separate tools and services into easier-to-use packages. They can be more automated, have built-in diagnostics and alerts, and come with best-practice instructions.

Data governance is critical to capturing data’s value, and deserves special focus. Data is increasingly recognized as a valuable asset. Customers need to set data-classification tiers, access policies, back-up and recovery (RTO/RPO), and retention policies.[75] Data governance is needed to manage disparate data formats, indexing, and tooling requirements. Technical and organizational guidelines need to ensure data is not bottled up in silos. Especially with cloud scaling, data volumes can get big quickly. Reference architectures that show customers how to stage data differently in the cloud and increase shared access can help. Cloud providers are also maturing new services called “data lakes” that address these needs. Techniques that move compute to the data, rather than the increasingly difficult approach of moving data to compute, are promising.

Reducing complexity. As cloud capabilities grow, complexity grows with it. Complexity is at the root of many challenges. 451 Research’s Cloud Price Index tracks 2 million product offerings (SKUs) across AWS, Google, Microsoft, Alibaba, and IBM.[76] Even basic functions like billing can be complicated. Integrating sprawling “point” features and reframing them into higher level services or suites that solve real business needs would help many customers. Cloud services can also be easier for customers to use, with priority focus on better automation. Best-practice content exists in many forms, and can be curated and packaged so it’s easier to use. Promising areas include service-level measurement, service-development frameworks, and migration templates. Reducing complexity will also help harden the cloud as it becomes integral to the economy.

More broadly, for all of these needs, the cloud is a new operating model with a distinct culture. To fully capture cloud benefits and mitigate risks, successful adopters have found that strong IT governance is key to building a cloud culture and organization. This involves change, which can challenge existing organizations and depreciate hard-won skills. These concerns need to be addressed so that the change brought on by cloud can be implemented. Culture and organizational change require special attention and are key enablers in the shift to a cloud world.

Building New Skills and Inclusivity

Cloud computing requires different skills, ranging from cloud-native application development to data architecture to operations. With high unemployment and industry clamoring for skilled employees, this is win-win. Demand for cloud skills far exceeds supply. However, these jobs require new certifications and new experiences. The IT industry and cloud providers offer free training in many formats including online courses, interactive labs, virtual day-long training sessions, and job-based learning paths, in multiple languages. Yet, training doesn’t scale well and requires substantial investment in time and resources. Cloud requires not only new technical skills but also new domain skills and modern workflows throughout the organization. In 2020, Microsoft announced a Global Skills Initiative to reach 25 million people by year-end. AWS announced a goal of training 29 million people globally by 2025, supported by free training.[77] From a business perspective, the providers recognize that the shift to the cloud can be quickened by training customers and partners to better adopt and use the cloud.

From an economic perspective, technology’s impact on the level and mix of employment is not new.[78] Like prior skill-based change, the cloud will cause labor market disruptions as demand falls for jobs in traditional areas (server and storage administrators) where cloud automation is more productive (patching, updates). At least since early 19th century England, when “Luddites” smashed textile machinery, technology change has challenged existing skills and organizations. Yet, over time, technology also creates new jobs and organizations adapt. Governments and universities have studied technology-driven job losses and skill shortages and launched workforce training programs to help. Prior Information Technology and Information Foundation (ITIF) research on workforce adjustment also recommends actions to be taken.[79] At the same time, companies and governments need to invest more in training and reskilling. In particular, training can focus on gaps in priority areas such as IT security, data and analytics, and ML. The benefits of the cloud need to be equitably shared and all communities need to be able to participate. Industry can be part of the solution. Building skills and inclusivity can be improved if not quickly solved. We return to this in the government policy section.

Making Cloud Adoption Easier

To accelerate cloud adoption, moving workloads to the cloud needs to be simpler and faster. While cloud automation can make technical deployment easier, for example, provisioning new servers and user accounts, it can be a complicated year-long initiative. The State of the Cloud Report shows understanding application dependencies (51 percent of users), assessing technical feasibility (48 percent), and assessing costs (44 percent) are the top challenges.[80]