False Alarmism: Technological Disruption and the U.S. Labor Market, 1850–2015

Contrary to popular perceptions, the labor market is not experiencing unprecedented technological disruption. In fact, occupational churn in the United States is at a historic low. It is time stop worrying and start accelerating productivity with more technological innovation.

Contents

The Myth of Tech-Driven Labor-Market Disruption. 3

Technology’s Impact on the Labor Market 5

Job Losses From Occupational Churn. 22

But Will the Future Be Different? 26

Introduction

It has recently become an article of faith that workers in advanced industrial nations face almost unprecedented levels of labor-market disruption and insecurity. From taxi drivers being displaced by Uber, to lawyers losing their jobs to artificial intelligence-enabled legal-document review, to robotic automation putting blue-collar manufacturing workers on unemployment, popular opinion is that technology is driving a relentless pace of Schumpeterian “creative destruction,” and we are consequently witnessing an unprecedented level of labor market “churn.”[1] One Silicon Valley gadfly now even predicts that technology will eliminate 80 to 90 percent of U.S. jobs in the next 10 to 15 years.[2]

As the Information Technology and Innovation Foundation (ITIF) has documented, such grim assessments are the products of faulty logic and erroneous empirical analysis, making them simply irrelevant to the current policy debate.[3] For example, pessimists often assume that robots can do most jobs, when in fact they can’t, or that once a job is lost there are no second-order job-creating effects from increased productivity and spending. But the pessimists’ grim assessments also suffer from being ahistorical. When we actually examine the last 165 years of American history, statistics show that the U.S. labor market is not experiencing particularly high levels of job churn (defined as the sum of the absolute values of jobs added in growing occupations and jobs lost in declining occupations). In fact, it’s the exact opposite: Levels of occupational churn in the United States are now at historic lows. The levels of churn in the last 20 years—a period of the dot-com crash, the financial crisis of 2007 to 2008, the subsequent Great Recession, and the emergence of new technologies that are purported to be more powerfully disruptive than anything in the past—have been just 38 percent of the levels from 1950 to 2000, and 42 percent of the levels from 1850 to 2000.

Other than being of historical interest, why does this matter? Because if opinion leaders continue to argue that we are in unchartered economic territory and warn that just about anyone’s occupation can be thrown on the scrap heap of history, then the public is likely to sour on technological progress, and society will become overly risk averse, seeking tranquility over churn, the status quo over further innovation. Such concerns are not theoretical: Some jurisdictions ban ride-sharing apps such as Uber because they fear losing taxi jobs, and someone as prominent and respected as Bill Gates has proposed taxing robots like human workers—without the notion being roundly rejected as a terrible idea, akin to taxing tractors in the 1920s.

In fact, the single biggest economic challenge facing advanced economies today is not too much labor market churn, but too little, and thus too little productivity growth. Increasing productivity is the only way to improve living standards—yet productivity in the last decade has advanced at the slowest rate in 75 years.[4]

Levels of U.S. occupational churn are now at historic lows.

This report reviews U.S. occupational trends from 1850 to 2015, drawing on Census data compiled by the University of Minnesota’s demographic research program, the Minnesota Population Center, to compare the mix of occupations in the economy from decade to decade. We also assign a code to each occupation to judge whether increases or decreases in employment in a given decade were likely due to technological progress or other factors. Overall, three main findings emerge from this analysis.

First, contrary to popular perception, rather than increasing over time, the rate of occupational churn in recent decades is at the lowest level in American history—at least as far back as 1850. Occupational churn peaked at over 50 percent in the two decades from 1850 to 1870 (meaning the absolute value sum of jobs in occupations growing and occupations declining was greater than half of total employment at the beginning of the decade), and it fell to its lowest levels in the last 15 years—to around just 10 percent. When looking only at absolute job losses in occupations, again the last 15 years have been comparatively tranquil, with just 70 percent as many losses as in the first half of the 20th century, and a bit more than half as many as in the 1960s, 1970s, and 1990s.

Second, many believe that if innovation only accelerates even more then new jobs in new industries and occupations will make up for any technology-created losses. But the truth is that growth in already existing occupations is what more than makes up the difference. In no decade has technology directly created more jobs than it has eliminated. Yet, throughout most of the period from 1850 to present, the U.S. economy as a whole has created jobs at a robust rate, and unemployment has been low. This is because most job creation that is not explained by population growth has stemmed from productivity-driven increases in purchasing power for consumers and businesses. Such innovation allows workers and firms to produce more, so wages go up and prices go down, which increases spending, which in turn creates more jobs in new occupations, though more so in existing occupations (from cashiers to nurses and doctors). There is simply no reason to believe that this dynamic will change in the future for the simple reason that consumer wants are far from satisfied.

Third, in contrast to the popular view that technology today is destroying more jobs than ever, our findings suggest that is not the case. The period from 2010 to 2015 saw approximately 6 technology-related jobs created for every 10 lost, which was the highest ratio—meaning lowest share of jobs lost to technology—of any period since 1950 to 1960.

Many believers in the inaccurately named so-called “fourth industrial revolution” will argue that this relative tranquility is just the calm before a coming storm of robot- and artificial-intelligence-driven job destruction. But as we discuss below, projections based on this view—including from such venerable sources as the World Economic Forum and Oxford University—are either immaterial or inaccurate.

Policymakers should take away three key points from this analysis:

1. Take a deep breath, and calm down. Labor market disruption is not abnormally high; it’s at an all-time low, and predictions that human labor is just one tech “unicorn” away from redundancy are likely vastly overstated, as they always have been.

2. If there is any risk for the future, it is that technological change and resulting productivity growth will be too slow, not too fast. Therefore, rather than try to slow down change, policymakers should do everything possible to speed up the rate of creative destruction. Otherwise, it will be impossible to raise living standards faster than the current snail’s pace of progress. Among other things, this means not giving in to incumbent interests (of companies or workers) who want to resist disruption.

3. Policymakers should do more to improve labor-market transitions for workers who lose their jobs. That is true regardless of the rate of churn or whether policy seeks to retard or accelerate it. Likewise, it doesn’t matter whether the losses stem from short-term business-cycle downturns or from trends that lead to natural labor-market churn. While this report lays out a few broad proposals, a forthcoming ITIF report will lay out a detailed and actionable policy agenda to help workers better adjust to labor-market churn.

The Myth of Tech-Driven Labor-Market Disruption

Today a growing number of futurists and pundits argue that the current technology system is unique, particularly in its pace of change. Go to any technology conference, and you are likely to hear from an enthusiastic futurist breathlessly claiming that the pace of innovation is not just accelerating, but that it is accelerating “exponentially.” Indeed, it’s become de rigueur for authors claiming to be futurists to paint dystopian pictures of technology running roughshod over jobs and whole occupations. All this is happening because, according to them, the pace of change is accelerating.

Futurist Daniel Burrus tells us “that we’re in a world of exponential transformational change.”[5] Joseph Jaffe talks about “explosive and exponential” advances.[6] John Kotter writes that the rate of change is “not just going up. It’s increasingly going up not just in a linear slant, but almost exponentially.”[7] Peter Diamandis and Steven Kotler write that we are entering an era in which the pace of innovation is growing exponentially.[8]

For some of these techno-pundits, this so-called exponential change (i.e., change is twice as fast next year as this, four times faster in two years, and eight times faster in three) is an unalloyed good. But a larger share of “exponentialists” are at best torn in their view, liking the progress but fearing it at the same time. MIT professors Erik Brynjolfsson and Andrew McAfee capture this equivocation in their book The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies. Yes, they, too, tout that “technical progress is improving exponentially.” But they also argue that the “second machine age” (the first one was during the Industrial Revolution) is “doing for mental power … what the steam engine and its descendants did for muscle power. They’re allowing us to blow past previous limitations and taking us into new territory.”[9]The tone suggests that humans who do not work with their “hands,” jobs thought safe from automation, may soon be at risk, with no clear path forward for new employment prospects.

The single biggest economic challenge facing advanced economies today is not too much labor market churn, but too little, and thus too little productivity growth.

Perhaps no one captures and spreads this at once optimist and anxious zeitgeist better than Claus Schwab, the chairman of the World Economic Forum. In writing about the so-called fourth industrial revolution, Schwab argues:

The speed of current breakthroughs has no historical precedent. When compared with previous industrial revolutions, the Fourth is evolving at an exponential rather than a linear pace. Moreover, it is disrupting almost every industry in every country. And the breadth and depth of these changes herald the transformation of entire systems of production, management, and governance.[10]

To be sure, evidence of technological change is all around us—smartphones, self-driving cars, amazing drug discoveries, and even drone warfare. But despite these techno-utopian claims, the pace of technical change has not accelerated over the last 200 years, and little evidence exists that this will change going forward. Certainly there have been cycles of change, with some periods seeing the development of new “general purpose technologies” (GPTs) and other periods seeing the “installation” and improvements of these technologies.[11] But past periods of GPT development have been just as robust, if not more, than current. Indeed, if we could go back in time and ask someone in 1900 about the pace of technological change, they would likely tell a similar story about its acceleration, citing the proliferation of amazing innovations (e.g., cars, electric lighting, the telephone, the record player). But notwithstanding iconic innovations such as electricity, the internal combustion engine, the computer, and the Internet, change is almost always more gradual than many think. Indeed, as historian Robert Friedel notes, “even the technological order seems more characterized by stability and stasis than is often recognized.”[12] And as discussed below, that is likely to be the case regarding technology-induced labor

market change.

Technology’s Impact on the Labor Market

To better understand technology’s impact on labor markets and occupations, it’s important to understand that labor markets and occupational shifts occur through several different channels. One channel by which technology changes occupational structures is through transforming products and industries. This is the proverbial buggy-whip manufacturer case. As autos became widespread, buggy business declined, and the economy needed fewer workers making buggy whips (and buggies) but more making cars and their components.[13] We can also see this dynamic by looking at changes in the technology of recorded-music production. In 1939, recorded music meant phonograph records playing at 78 rpms. And the industry needed a set of occupations to produce vinyl records. These included assembling adjuster, backer-up, matrix-bath attendant, matrix-groove roller, matrix-number stamper, needle lacquerer, pick-up assembler, pick-up coil winder, record finisher, record press adjuster, record-press man, sapphire-stylus grinder, and sieve gyrator.[14] A sieve gyrator is someone who “breaks up and sifts material (basically shellac) for making phonograph records; places material in a breaker which crushes it; dumps crushed materials in sieve machine which automatically sifts it; returns pieces to breaker that do not pass through screen.”[15] Needless to say, there are likely very few if any sieve-gyrator jobs left today, as most people consume their music on CD players and various kinds of MP3 players. In a few years, it’s likely that even the occupations involved in making music CDs will go by the technological wayside, as most music will involve downloading bits from a server to a digital music player.[16]

It’s become de rigueur for authors claiming to be futurists to breathlessly paint a dystopian picture of technology running roughshod over jobs and whole occupations.

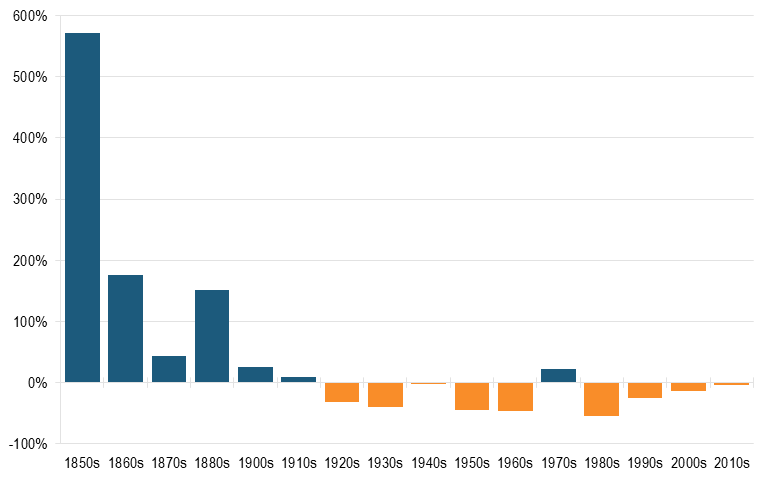

Technology clearly creates jobs when it enables the creation of whole new industries and occupations. A case in point is the rise of the railroad industry in the 1850s. Between 1850 and 1860, the number of locomotive engineers, conductors, and brakemen increased by almost 600 percent (albeit from a small base), but continued to expand at a robust rate for the rest of the century. (See figure 1.) But that growth turned negative by the 1920s, in part as railroads got more productive (needing fewer operators), but also as cars and trucks began to take market share away from railroads. Since 1920, these rail occupations have shrunk every decade except the 1970s.

Figure 1: Locomotive Engineers, Railroad Conductors, and Railroad Brakemen[17]

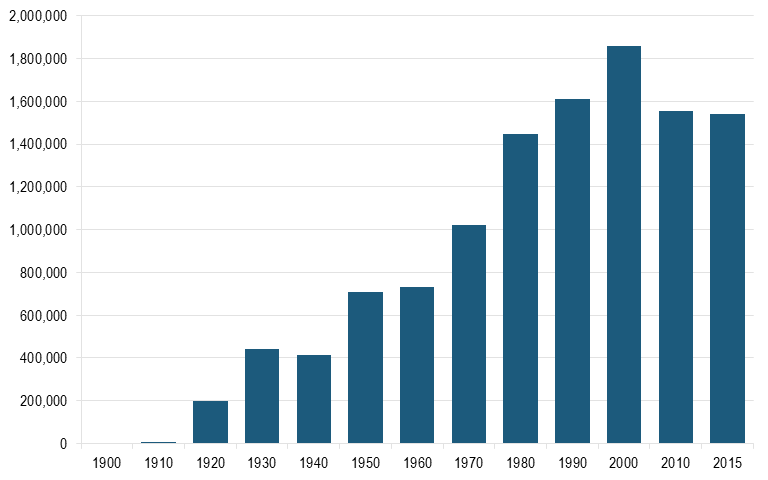

But as the number of rail workers was declining, the number of workers related to the automobile industry grew. For example, the number of automobile mechanics grew from almost none in 1910 to over 1.8 million in 2000, as the number of automobiles grew dramatically, as the real price fell and quality increased. (See figure 2.) However, since 2000, the number has fallen, perhaps in part because the mechanical quality of autos has improved significantly, and autos require fewer repairs. For example, cars use to need frequent tune-ups to adjust mechanical settings (e.g., adjust and replace the points in a distributer). But with electronic systems, such mechanical tune-ups are needed much less often.

Figure 2: Mechanics and Repairmen, Automobile[18]

Technology can also eliminate jobs by allowing some occupations to be more productive. In these cases, technology reduces the number of jobs relative to the overall economy. For example, automation has enabled many manufacturing industries to produce the same or more with fewer workers. In fact, by enabling self-service or complete automation, technology can eliminate certain occupations completely, freeing up labor for work that machines can’t do. Seventy years ago, tens of thousands of young men and boys worked in bowling alleys as pinsetters, setting up the pins after the bowlers had knocked them down. But the development of the automated pin-setting machine by AMF in the 1940s eliminated the need for pin-setting jobs while simultaneously ushering in a golden era for American bowling, as prices fell and convenience increased.[19] (See figure 3.)

Figure 3: Technology Can Eliminate Occupations: The Case of Bowling Pinsetters

|

|

|

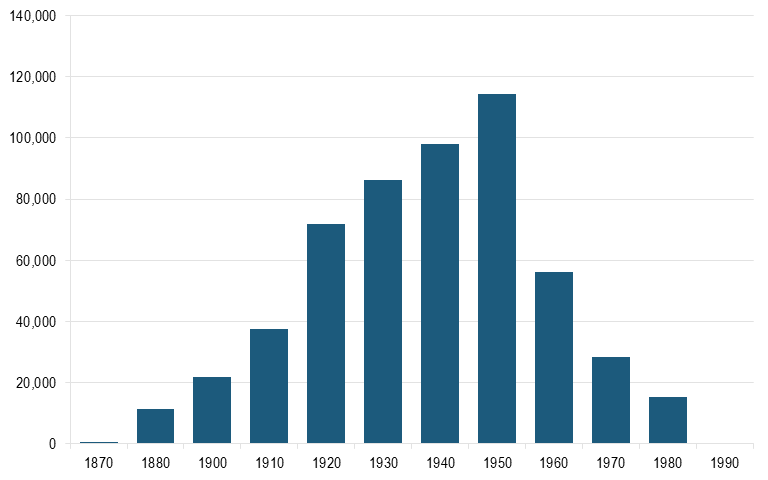

Likewise, the development of the self-service elevator by Otis Elevators in the 1920s did away with the need for virtually all elevator operators.[20] We can see this trend in figure 4. In 1860, there were no elevator operators in the United States because there were no elevators. In the absence of high-strength steel, it was impossible to construct tall buildings and difficult to build passenger elevators themselves. But once this was developed, and the price declined in the 1880s, both took off. As a result, while there were just 497 elevator operators in 1870, the number steadily grew to 114,473 by 1950.[21] But as self-service elevators were developed and adopted, this occupation shrank to essentially zero by 1990. Indeed, one of the symbolic actions the House Republicans took in 1994 when they gained a majority in the U.S. House of Representatives was to eliminate the staffed elevators for members. Now members would have to push the buttons on their own. To be sure a few “high-end” buildings still have an elevator operator, but they are few and far between. This evolution can provide some guidance about what we might expect in the future. Despite the development of self-service elevators in the 1920s, it was another 70 years before the occupation was for all intents and purpose eliminated.

Figure 4: Number of Elevator Operators, 1870–1990[22]

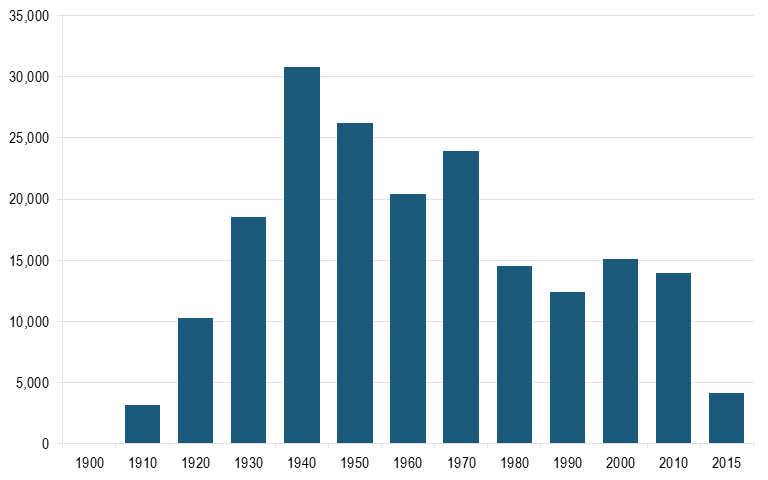

We see a somewhat similar but not quite so stark pattern for motion-picture projectionists. (See figure 5.) Clearly no projectionists existed before the invention of motion pictures in the first decade of the 20th century. As movies gained popularity, employment quickly took off, peaking in 1940 at 31,000. But with the invention of the TV in 1927 and then rapid sales in the 1950s and 1960’s, the number declined as more Americans chose to stay home and watch TV instead of going out to the “talkies.” As more movie theaters became multiplexes in the 1970s and 1980s, the number of projectionists fell again, since one projectionist could now manage more than one projector.

Figure 5: Motion Picture Projectionists Jobs in the United States, 1900–2015[23]

There are more recent technology-induced declines. For example, while 180,000 Americans were employed as travel agents at the turn of the millennium, with the emergence of Internet-based travel booking, just over 90,000 were employed in 2015. Likewise, there are 57 percent fewer telephone operators, 41 percent fewer data-entry clerks, and 3 percent fewer postal-mail carriers than there were in 2000, even though the volume of information transactions has grown, all because of digital automation and substitution. However, technology is not the only driver of employment in these industries. Perhaps because of growing demand for travel, there were more travel agents in 2015 than in 2010.[24]

But the converse is also true. Specific occupations usually grow when it is hard to improve worker productivity. Forty years ago, economist William Baumol described what became known as “Baumol’s disease,” where some industries that could not raise productivity (or at least did not raise it as quickly as the rate of economy-wide productivity growth) would become a larger share of the economy, at least in terms of the percentage share of the workforce. A case in point is the education industry and teachers. It still takes one teacher to teach 30 students in elementary school, just as it did 40 years ago. As a result, the total number of elementary- and secondary-school teachers increased by 1.5 million, or 39 percent, from 1980 to 2015, but as a share of all employment hovered at 3 percent over the past three decades.[25]

But technology doesn’t just eliminate jobs; it also creates them, although as noted above, normally not as many as it eliminates. If we look at some of the occupations of today that largely didn’t exist 30 years ago (e.g., distance-learning coordinators, green marketers, informatics nurse specialists, nanosystems engineers, and cytotechnologists), we can see this dynamic. These occupations emerged because technological innovation made them possible. There was no need, for example, for informatics nurse specialists when virtually all medical information was on paper. Likewise, why have a distance-learning coordinator when broadband communications were largely nonexistent, or a green marketer when clean tech was a niche product at best? In 2012, there were 466,000 U.S. jobs related to mobile apps, up from zero in 2007. Indeed, if you examine the fastest-growing U.S. industries over the last 15 years, certainly some are due to technological innovation. For example, support activities for oil and gas operations grew by 537 percent, in part to support natural-gas “fracking,” which was in turn enabled by innovations, much of it with U.S. Department of Energy origins.[26] Many fast-growing industries are, not surprisingly, in the IT industry, such as Internet publishing, Internet services providers, software, and cellular communications systems. Others—such as biological products and surgical and medical instrument manufacturing—are also spurred by innovation, enabling new products to come to market (but also by globalization, which enables access to larger markets for an industry that the United States still has competitive advantage in).

As expected, we also see this in the growth of computer occupations. The Bureau of Labor Statistics (BLS) did not record any until the 1970s, when mainframe computers, and then minicomputers started to take off. With the growth of personal computers (PCs) in the 1980s, the web in the 1990s, and a whole suite of information technologies since then, computer occupations grew twice as fast as overall jobs from the 1970s to 2000s. With the maturing of the industry, coupled with some offshoring of more routine software jobs in the 2000s, growth slowed in the 2000s, but rebounded in the last five years. (See figure 6.)

Figure 6: Growth of Computer Occupations Relative to Overall Employment Growth[27]

The third source of occupation churn is from changes in the types of goods and services demanded by consumers (whether these are businesses, governments, or individuals). Various factors can alter the composition of demand, including demographics, culture, and government. For example, as advanced economies age, a greater share of jobs will be in health-care occupations. The fact that the number of child-care workers increased from 1 million in 1990 to over just under 2 million in 2010 is in part a reflection of a change in cultural norms and personal attitudes about having both parents of young children in the labor force. Likewise, gross output in military armored vehicle, tank, and tank component manufacturing grew 700 percent from 1998 to 2011 as we produced vehicles for American armed forces in Iraq and Afghanistan.

Methodology

To assess the history of occupational change, the best source of data comes from the United States Census Bureau. While the Bureau of Labor Statistics (BLS) is the chief agency that defines and estimates occupations, its publicly available data only goes back to 1988. The Census Bureau, through its decennial American Community Survey (and its other historical iterations), has long collected data on U.S. occupations: farmers, architects, bakers, sailors, and sales workers, to name just a few. Because occupations are not static, and some new ones emerge while others are eliminated, the BLS has occasionally changed its occupational categories (the most recent overhaul of the categories happened in 2010, and the previous significant overhaul came in 1980).[28]

Easily accessible data in machine-readable form is not available on either the Census or BLS websites. To remedy this, the Minnesota Population Center at the University of Minnesota created two such datasets: one using 1950 occupational classifications to examine change from between 1850 (the earliest year of data collection) and 2015, and another using 2010 occupational classifications looking at change from 1950 to 2015. This report analyzes both series.

Researchers at the Minnesota Population Center constructed these datasets by estimating employment by occupation at the national level by weighting the individual responses of a census. Because occupation categories change from decade to decade, they harmonize historical occupation categories to either occupation codes in 1950 or 2010. This is done by, for example, mapping employment in an occupational category in 1850 to what its equivalent category would have been in 1950 (in cases where an 1850 occupation did not have a 1950 equivalent, that employment data would have been subsumed by a broader and more aggregated category). While harmonizing 150 years of occupation categories to occupations that existed specifically in 1950 fails to capture some detailed occupational shifts (especially in the decades at the front end and back end of this data set), it nonetheless allows this analysis to make conclusions about general occupation trends over such a long period.

Technology doesn’t just eliminate jobs; it also creates them.

As this analysis will go on to show using this dataset, job churn over the past 150 years is at an all-time low. But to address possible concerns that our analysis fails to capture “newer” technology-related job churn since 2000, this analysis is complemented by the dataset that measures employment from 1950 to 2015 using the 2010 occupation classification. This dataset shows different levels of churn but the same general trend.

Notwithstanding the usefulness of these datasets, a number of sources of potential error exist. The first is a primary data collection error, where someone may be incorrectly classified. A second is an error in transcribing to the original or secondary database. A third possible error is that some occupations record employment in one decade but none in the prior or subsequent decade. In some cases, if it were clear that this is a new occupation (or one on its last legs), then these numbers were accepted. For example, it is logical that there were no aeronautical engineers in 1910, but 401 in 1920. However, in other cases, the gaps appeared to be data-coding or reclassification changes, or that the data is simply missing. For example, the datasets show that there were 3,765 college presidents and deans in 1930 and 5,728 in 1950, but none in 1940. In these cases, we estimated the midpoint between the two decades for inclusion in the missing field.

In some cases, an occupation goes to zero in a particular decade. For the decade pair including the prior decade, both years are assigned a zero if it appears that the entry of zero was because of a recoding of the occupation, rather than the actual decline of the occupation. For example, decorators and window dressers were growing for at least 70 years up to 1960, and in 1970 suddenly went to zero. In this case, they were both recorded as zero for the decade from 1960 to 1970.

Another limitation is the use of decade-long comparisons. It is possible that in any particular decade an occupation could see a decline in jobs in the mid-decade and then rebound by the end. The experience for the individual workers could be disruptive, but this measure would not record that. However, since the purpose of this study is to measure historical change using decade-long periods, it should still provide reasonably accurate trend information. Using decade data weighted by the overall size of the workforce also significantly reduces the influence of short-term fluctuations due to recessions. Our method controls for business-cycle impacts on employment change by measuring individual occupational change as a percentage of overall employment and by using time frames that span multiple business cycles. To the extent recessions create occupational churn, this method would not measure it.

As such, we report two churn indicators. The first measures change in each occupation relative to overall occupational change. With this method, even if an occupation doesn’t lose jobs, if it didn’t grow as fast as the overall labor market, the delta between that growth and overall labor force growth would be calculated as churn. In other words, if a particular occupation grew 4 percent in a decade but the overall number of jobs grew 10 percent, the rate of change would be negative 6 percent. Likewise, if employment in an occupation grew 15 percent in a decade, but the overall number of jobs grew 10 percent, the rate of change would be 5 percent. Absolute values were taken of negative numbers, and the sum of employment change was calculated for all occupations. This was then divided by the number of jobs at the beginning of the decade to measure the rate of churn.

The second indicator uses a related measure. For declining occupations, it only includes a sum of occupational changes where there was an absolute loss in jobs. For example, the occupation in the above example (negative 6 percent after controlling for total employment change) was not included. On the growth side, only occupations that grew faster than the national average were included. By definition, the churn rates using this method were lower than the first method (72 percent of the first method using the 2010 definitions). However, the correlation between these two measures of churn was quite robust (0.92 for 1950 occupation codes and 0.90 for 2010 occupation codes respectively) suggesting that either measure captures generally the same historical changes.

ITIF also attempted to measure how much churn was due to technological change, either from new jobs and occupations created because of technology. To be sure, the assessments of whether technology played a role in an occupation’s growth or decline is clearly a judgement call and subject to errors. We used the following rules of thumb to guide the choice. When it appeared that an innovation had occurred that led to the creation of new jobs in an occupation or the decline of others, that was coded technological change. For example, in the 1910s, 7,000 automobile-repair jobs were created. This looked like a clear case of technological innovation creating the need for new jobs in an occupation. In contrast, by the middle of the century, when automobile-repair jobs grew, this was not attributed to technological change. There was nothing all that specific about technological innovation that led to more repair jobs then. The likely cause was increased standards of living, coupled with lifestyle changes (e.g., suburbanization), both of which led more Americans to buy cars, and in turn to consume more auto-repair services.

There were also cases where it appeared likely that technology led to job reduction. One reason was because technology was used to improve productivity. This was the case with agriculture jobs through much of the 20th century, as discussed below. Another was the development of technological substitutes. For example, with the development of low-cost household appliances (e.g., vacuum cleaners, washing machines) middle- and upper-middle-class Americans turned to self-service (cleaning their houses themselves), and the number of household workers declined dramatically, at least until the 1960s, when incomes increased significantly and more women joined the workforce.

Often what might appear to be change from technology is not. For example, the number of avionics technicians in the 1980s declined, but this did not appear to be a result of any changes in technology (for example, no new technologies came along that resulted in less aviation; there was no evidence that any technology significant increased avionic technician’s productivity, etc.).

Finally, some occupations lose or gain jobs because of trade, and it can be difficult to separate which are lost or gained from trade rather than technology. As ITIF has written elsewhere, most net losses of U.S. manufacturing up through the 1990s appear to be due to superior productivity relative to nonmanufacturing.[29] But this changed somewhat in the 2000s with, among other things, the China trade shock, when the United States ran very large trade deficits in manufactured goods. As a result, for manufacturing sector losses in the 2000 to 2010 period, half of the manufacturing-occupation losses were assumed to be due to trade, not technology. This method would not change overall job-churn numbers for the decade, but it is one reason why tech-based churn for the decade is slightly lower than what many might expect. Similarly, the 1980s tech-based churn was also adjusted, given the significant growth of the manufacturing trade deficit in the 1980s, coupled with the moderate job loss in manufacturing. In this case, it was assumed that one-quarter of the manufacturing job losses were due to trade. However, using these adjustments meant only modest increases in the tech loss to tech creation ratios, as discussed below.

Findings

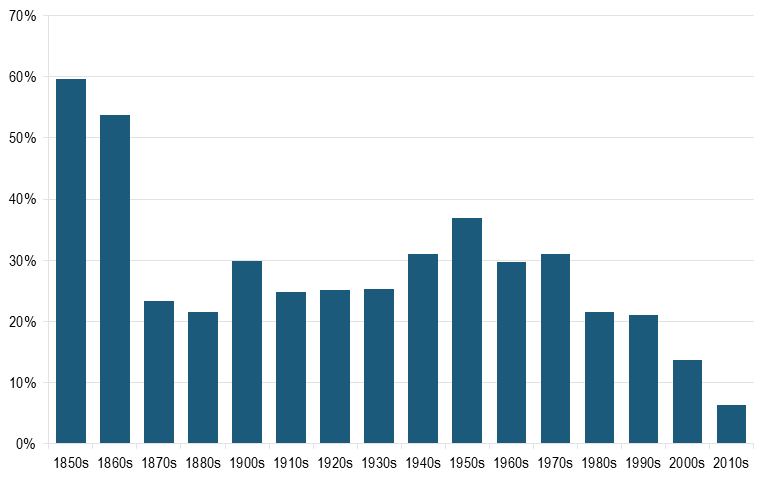

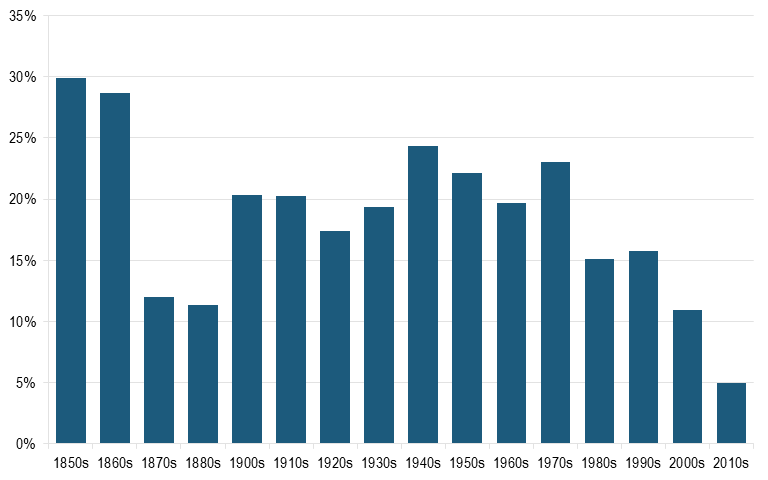

The findings are clear: Rather than increasing, the rate of occupational churn in the last few decades is the lowest in American history, at least since 1850. Under method one, using the occupational categories of 1950, occupational churn peaked at over 50 percent in the decades between 1850 to 1870. (See figure 7.) But it was still above 25 percent for the decades from 1920 to 1980. In contrast, it fell to around 20 percent in the 1980s and 1990s, to just 14 percent in the 2000s, and 6 percent in the first half of the 2010s.

Figure 7: Rate of Occupational Change by Decade (1950 Categories, Method One)[30]

We see similar patterns using the 1950 occupational categories with method two (looking only at occupations that experienced absolute losses or gains in excess of overall percentage change in workforce). (See figure 8.) As noted above, the correlation between the two methods is 0.92. The main divergence in churn rates occurs in the 1800s, where method two produces churn rates half that of method one. (Churn in the 1850 to 1860 pair falls from 60 percent to 30 percent, and churn in the 1880 to 1900 pair falls from 22 percent to 11 percent.) The number falls in method two because fewer occupational categories were defined as having churn.

Figure 8: Rate of Occupational Change by Decade (1950 Categories, Method Two)[31]

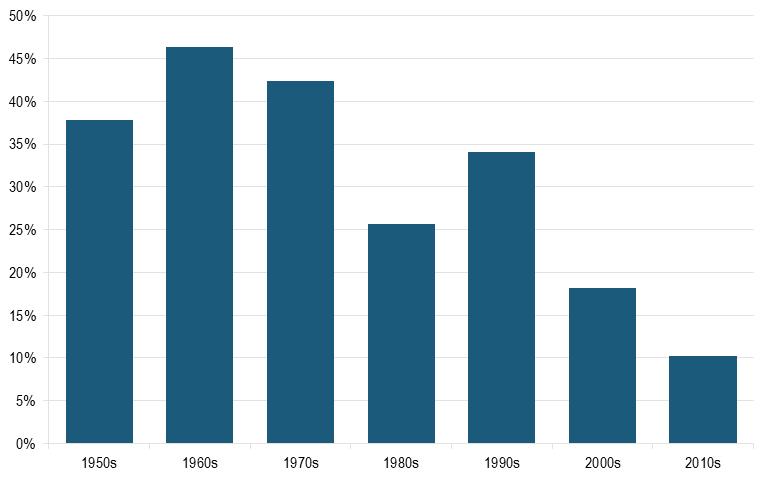

We see similar patterns using the 2010 occupational categories using both methods. Using method one, occupational churning peaked in the 1960s at 46 percent, fell to just 18 percent in the 2000s, and 10 percent for the first half of the current decade. (See figure 9.) Given the turmoil of the 2000s, including the dot-com crash of the first part of the decade, the Great Recession, the financial crisis of the last part, and the unremitting challenge from China trade throughout, it’s perhaps surprising that the churn levels were so low.

Figure 9: Rate of Occupational Change by Decade (2010 Categories, Method One)[32]

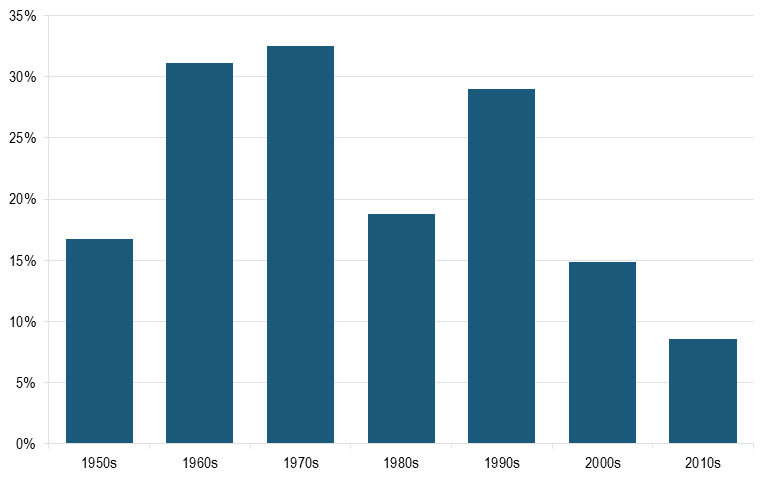

Using method two resulted in similar trends, but a greater difference in churn rates from 1950 to 2000. (See figure 10.) Over these 50 years, the average churn rate between these two methods differed by 11 points. But after 2000, there was not much difference in calculated churn. (Method one estimates a churn of 18 percent for 2000 to 2010, while method two estimates a churn of 15 percent; in calculating churn from 2010 to 2015, those churn rates are 10 percent and 9 percent respectively.)

Figure 10: Rate of Occupational Change by Decade (2010 Categories, Method Two)[33]

Many of these high levels of churn in the earlier decades reflect the transformation in American agriculture. In the 1850s to the 1870s, millions of new workers started farming as the population expanded and as America opened up the West. Between 1850 and 1860, controlling for the growth of the labor force, new farmers accounted for 25 percent of the jobs added. But that also masked an important shift in farming, some of it coming from the emancipation of slaves after 1865, some of it from new technology, and some from the opening up of the West. Controlling for total growth in jobs, farm owners and tenants declined 44 percent, while farm wage laborers grew by 159 percent. In the next decade, owners and tenants declined by 36 percent, while farm laborers grew by 186 percent. But by the 1880s a long process of decline in farm labor started. Between 1880 and 1900, total farm employment grew by 14 percent, but because the overall labor force grew faster, farm share of total employment declined by 13 percent.[34]

The move from either small, owner-operated farms to larger farms or to other occupations was the defining story of the U.S. labor market from the 1850s to the 1950s.

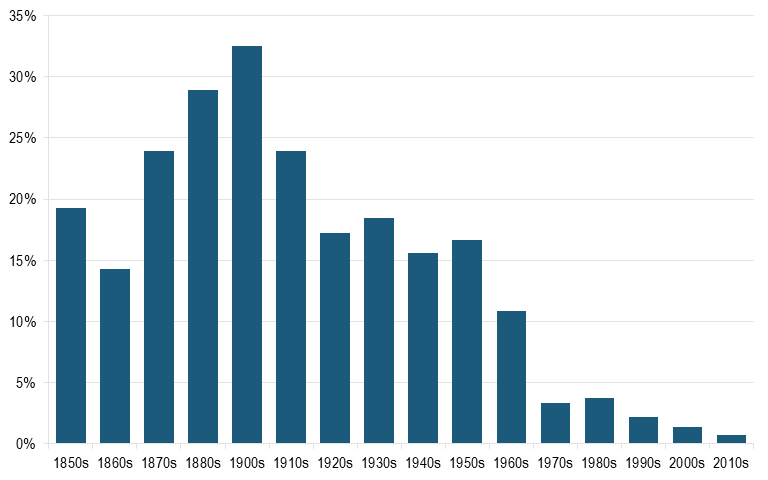

In the first decade of the 1900s, total agricultural employment started to fall in absolute terms as well, falling one percent (with agriculture employment as a share of total employment continuing to fall 10 percent). These numbers were -3 percent and -5 percent respectively for the 1910s, -1 percent and -4 percent in the 1920s, -3 and -5 percent in the 1930s, and -3 and -4 percent in the 1940s. Using the 1950 occupational classifications, absolute changes in farm employment (gains and losses), controlled for labor force growth, peaked in the first decade of the 1900s, with change in farming occupations accounting for one-third of total churning. The move from either small, owner-operated farms to larger farms or to other occupations was the defining story of the U.S. labor market from the 1850s to the 1950s. After the postwar movement of southern farm labor to the north, as harvesting and planting cotton and other crops was mechanized, churn in the farming sector became a declining share of overall job churn, to less than 3 percent since the 1970s. This is one important reason why overall U.S. churn rates have fallen.[35] (See figure 11.)

Figure 11: Farm Employment Churn as a Share of Total Occupational Churn, by Decade[36]

But there are other reasons why past rates were so high. For example, in the 1950s and 1960s, many occupations grew extremely fast, even after controlling for employed worker growth. For example, in the 1960s, 885,000 janitors were added as offices expanded, 700,000 nursing aides as health-care consumption increased, and 600,000 secondary-school teachers as today’s baby boomers started to enter high school. At the same time, many occupations either declined outright or grew much more slowly than overall labor-force growth. For example, office-machine operators (except computers) fell by over 400,000; office clerks fell by 1.8 million; material moving workers fell 1.5 million; and other production workers fell by 1.9 million workers, as manufacturers increased automation.

At 4.1 percent, 2000 to 2010 is the second-slowest decade for job loss. And in the five years from 2010 to 2015, occupational decline represents just 1.8 percent of jobs.

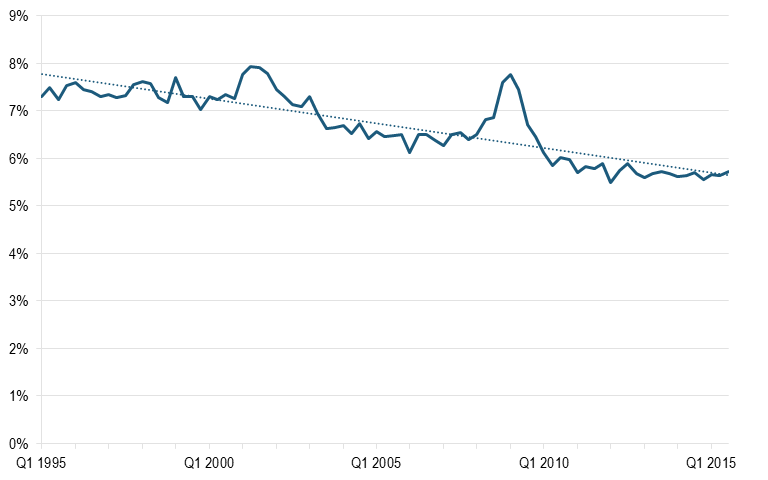

It is also interesting to note that these declines in occupational churn are matched by declines in the risk of U.S. workers losing their jobs, at least in the last two decades for which data are available.[37] It is true that more U.S. workers are concerned about losing their jobs than before—only 47 percent of workers said they felt their jobs were secure in 2014 compared with 59 percent in 1987.[38] But the reality is that job security is on the rise. Job losses due to plant closures, contractions, and mass layoffs have all steadily declined from 1995 to 2015. Total quarterly job losses as a share of total employment was at around 7.5 percent for much of the late 1990s, but has gradually declined to 5.7 percent in 2015.[39] (See figure 12.)

Figure 12: Quarterly Job Losses as a Share of Total Employed[40]

This decline occurred gradually across the two-decade period, though job losses accelerated temporarily during recessions in 2001 and 2008. Conversely, quarterly job gains mirrored job losses, showing that low job churn does not necessarily imply a healthier economy nor stronger employment. The decline in churn is pervasive across industries, and not just a function of decline in high-churn industries such as construction, and leisure and hospitality. Churn among manufacturing firms also decreased from 4.8 percent in 1995 to 3.2 percent in 2015.[41]

Job Losses From Occupational Churn

Job loss is a natural part of occupational churn. Often, technology destroys jobs within an industry while creating higher-paying jobs in the same industry. Automation and technology serve as tools that allow a worker to accomplish more, or substitute for some of the more repetitive tasks. However, in some cases, an occupation will see absolute decline.

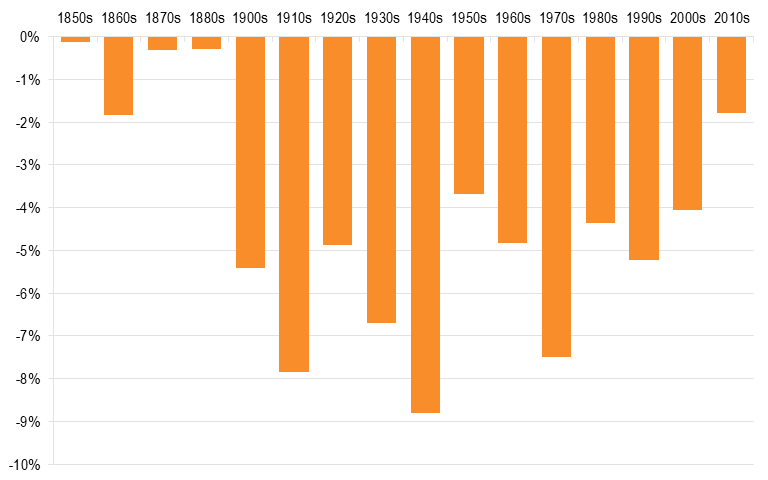

From 1850 to 1880, these losses were very low, chiefly because the high rates growth of the U.S. population during this time, which increased from 23 million in 1850 to 76 million by 1900. This made jobs that declined decade to decade scarce, even in occupations that did see high levels of mechanization or productivity increases.[42] In the 1800s, the rate of labor-force growth was so great (increasing above 40 percent every decade), that even in declining occupations, the overall growth of the labor force compensated for a declining importance of the occupation. Expanded overall demand made up for productivity increases. (See figure 13.)

When looking at the jobs in occupations that experienced absolute losses over a decade, the decade with the highest rate of loss was the 1940s, when almost 9 percent of jobs were in occupations that experienced absolute losses. For example, in the 1940s, telegraph operators lost 18,376 jobs, while private household workers declined by 452,000 (in part as the war effort required workers to operate factories), and farm laborers declined by 557,071 as agricultural mechanization increased.

There was no decade from 1850 to 2010 in which there were more jobs created by technology than eliminated.

In the 20th century, these losses averaged 5.9 percent per decade. Since 2000, the churn rate has been slower. At 4.1 percent, 2000 to 2010 is the second slowest decade for job loss. As can be seen, the rates of the 2000s and 2010s are both quite low (just 1.8 percent of jobs), even though overall job growth was less than a third of rates in the 1950s and 1960s, as fewer people were entering the labor force.

Figure 13: Absolute Losses in Occupations, 1850–2015 (1950s Categories)[43]

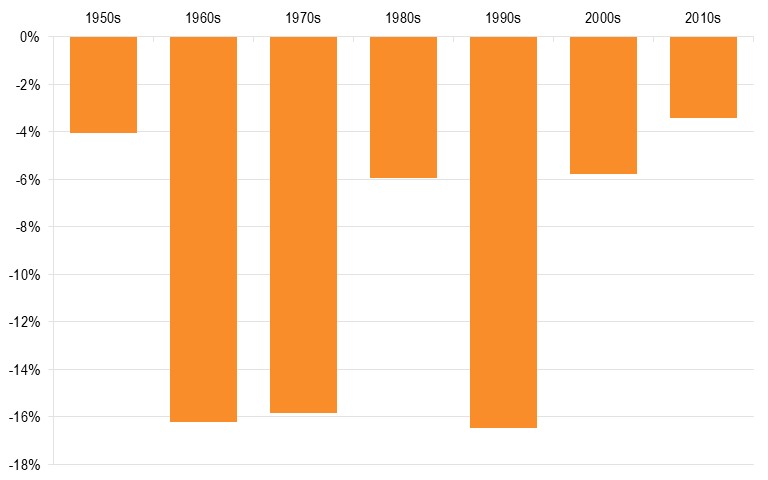

Looking at 2010 occupational definitions tells the same story. These data go into greater detail, so churn measures within industry subsectors are more precise and measure a greater degree of churn. Job losses were strongest in the 1960s, 1970s, and 1990s, but are greatly diminished after 2000. (See figure 14.)

Figure 14: Absolute Losses in Occupations, 1950–2015 (2010 Categories)[44]

Impact of Technology

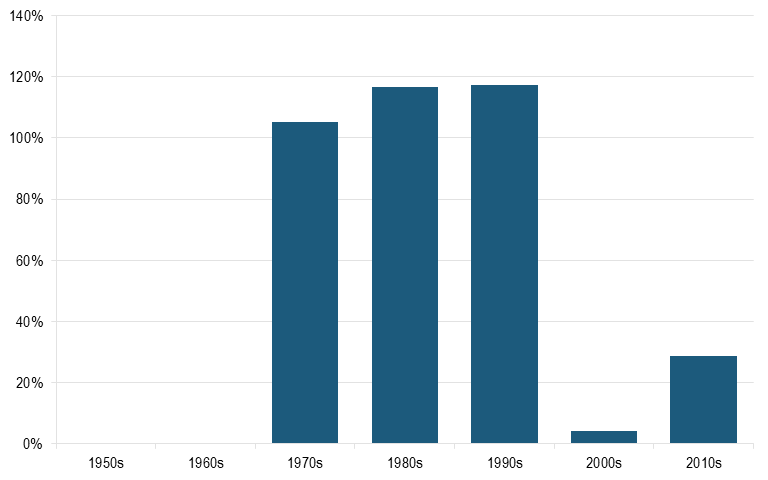

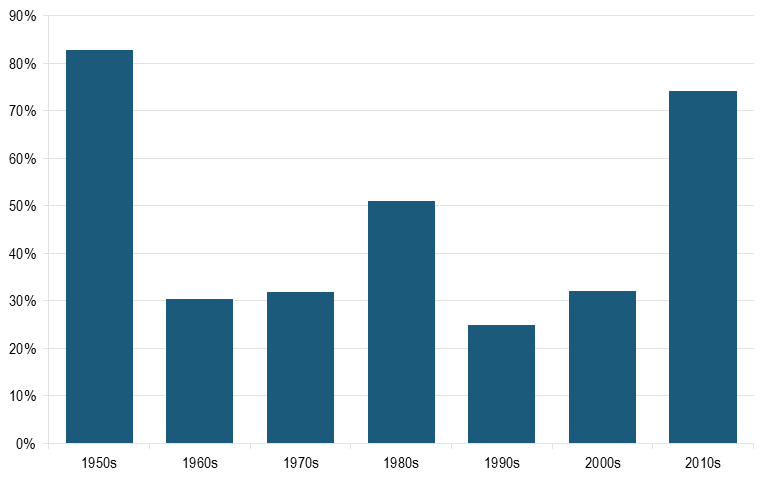

One of the prevailing narratives regarding the current U.S. labor market is that technology is destroying more jobs than it is creating. But that has been true for the last 160 years. Using the 1950 occupational classifications, there was no decade from 1850 to 2010 in which there were more jobs created by technology than eliminated. The same is true using the 2010 occupational categories, where the 1950s had the highest ratio of tech creating to tech eliminating (83 percent). But the first half of the 2010s was the second highest ratio of tech creating jobs to eliminating them. (See figure 15.)

As noted above, the classification of technology eliminating jobs was modified for the 1980s and 2000s, two decades with increasing manufacturing trade deficit and sizeable declines in manufacturing jobs. In both decades, some of the lost manufacturing jobs were lost not due to technology (e.g., automation) but trade and competitiveness. Making this adjustment for the 1980s raises the ratio from 47 percent to 51 percent, and for the 2000s from 32 percent to 33 percent.

Figure 15: Ratio of Technology Creating New Jobs to Technology Eliminating Jobs[45]

While these relatively low ratios may surprise many, particularly those who argue that it will be new industries that create the jobs to make up for these eliminated by technology, the logic is quite clear. No rational organization spends money to increase productivity unless the savings are greater than the costs. If the number of jobs to make the machine is the same as are lost in the companies using the machine, then costs could not have fallen.

So most new jobs will not be created in the new machinery firms. Rather, they will be created across the economy from the new demand that higher productivity enables. To see how, we need to look at second-order effects, something techno-pessimists overlook. If jobs in one firm or industry are reduced or eliminated through higher productivity, then, by definition, production costs decline. These savings are not put under the proverbial mattress; they are recycled into the economy, in most cases though lower prices or higher wages. This money is then spent, which creates jobs in whatever industries supply the goods and services that people spend their increased savings or earnings on. For example, in the 1960s, a decade where productivity and median wages increased more than 30 percent, the fastest-growing occupations were janitors and building cleaners (as commercial real estate expanded significantly); laborers and freight, stock and material movers (as we needed more workers to transport the rapidly growing number of goods American consumers were buying); maids and house cleaners (as more Americans could now afford household help and because of the increase in women entering the labor force); and secretaries and administrative assistants (as the office economy grew). None are occupations that are intrinsically created by new technology. But technological innovation is central to the acceleration of the economy and the creation of these jobs. Together, jobs in these four occupations accounted for 56 percent of all net job creation in the 1960s.[46]

One much-discussed variable is the extent to which computers may have affected job churn. Computers are pervasive across industries, and may have exacted profound changes unnoticed by the macro data, due to their ubiquitousness and relatively uniform adoption across sectors in a short amount of time. Examining this question, Boston University economist James Bessen finds that occupations that use computers grew 1.7 faster per year than occupations that did not. He did find that computer workers can substitute for other workers in the industry, but reveals that inter-occupational substitution is a relatively small effect, at less than half a percent annually.[47] However, computers have not been a major driver of employment loss, despite being a transformative technology. This may help explain why churn rates in the current economy are so modest.

But Will the Future Be Different?

Even if the most ardent fourth industrial revolutionaries were to acknowledge that the labor-market conditions of the last two decades have been the opposite of turbulent (at least by historical U.S. standards), most would argue that this is just the prelude: the calm before the coming technology storm.

A quick side note: It’s not the fourth industrial revolution; it’s the sixth. Advocates of the notion of the fourth industrial revolution account for history as follows. The first revolution was in the late 1700s and early 1800s. Next came the industrialization period of the 1890s to 1920s. Then a few years ago came the third revolution: the information technology revolution. And now the fourth supposedly has arrived.

But among those who study technology cycles, such periods make little sense. Proponents of so-called Schumpeterian technology long-wave theory postulate five waves to date: 1) the first industrial revolution of the steam engine in the 1780s and 1790s, 2) the second revolution of iron in the 1840s and 1850s, 3) the third revolution of the 1890s and 1900s based on steel and electricity, 4) the fourth revolution in the 1950s and 1960s based on electromechanical technologies and chemical technologies, and 5) the current fifth revolution based on information technology and communications technology. According to this long-wave periodization, a sixth wave will emerge, but not before an intervening period of relative stagnation or perhaps as long as 20 to 25 years, a period we appear to be in now. So, yes, a new technology wave is coming, but likely not for a while. Case in point, manufacturing labor productivity has been stagnant since the end of the Great Recession, growing just 1.5 percent over a four-year period from 2012 to 2016.[48] One reason is that robots and AI are still relatively rudimentary.[49]

Instead of fretting about technology eliminating jobs, we should be worrying about how we are going to raise productivity growth, which has been at anemic levels over the last decade.

But none of this deters the life-revolution enthusiasts. Emblematic of the new received wisdom, Larry Elliot, economics editor of The Guardian writes:

Smart machines will soon be able to replace all sorts of workers, from accountants to delivery drivers and from estate agents to people handling routine motor insurance claims. On one estimate, 47% of US jobs are at risk from automation. This is Joseph Schumpeter’s “gales of creative destruction” with a vengeance.[50]

Likewise, a Bank of England blog warns that:

Economists looking at previous industrial revolutions observe that none of these risks have transpired. However, this possibly under-estimates the very different nature of the technological advances currently in progress, in terms of their much broader industrial and occupational applications and their speed of diffusion. It would be a mistake, therefore, to dismiss the risks associated with these new technologies too lightly.[51]

But as ITIF has pointed out, the risks are much more likely to be in the other direction.[52] Rather than too much innovation or productivity, the bigger risk is that economics will not be able to raise productivity fast enough to adequately raise per-capita incomes, especially in an era where nations face growing elderly populations.

Moreover, most of these “alarming” warnings about technology and jobs are not at all terrifying when examined closely. Take Klaus Schwab’s widely repeated warning that robotics and artificial intelligence will destroy 5 million jobs by 2020.[53] This sounds like a lot of destruction and not much creation. In fact, it adds up to the elimination of just 0.25 percent of jobs annually for next five years. That is what 5 million jobs being automated amounts to in percentage terms—barely a rounding error.

Even more ominous sounding is the study by Oxford researchers Osborne and Frey, which warns that technology will destroy 47 percent of U.S. jobs in the next 20 years. But the Oxford study is just plain wrong. The authors, who didn’t submit their work for peer review, neglected to examine all 702 U.S. occupational categories to manually assess how likely it is that technology will substitute for a human worker in each one. Instead, they took a shortcut: They simply relied on task measures from the Department of Labor, which assessed occupations based on factors such as how much manual dexterity and social perceptiveness an occupation requires. And if the risk score of automation was above 0.7, ipso facto, the job was destined for the trash heap of techno-history. The only problem is that their methodology produces results that make little sense, as when they predict that technologies such as robots will eliminate the jobs of fashion models, manicurists, carpet installers, and barbers. Is Versace really going to dress up cute robots in his latest dresses and parade them down the runway? Are we going to be in a Jetsons’ world where you sit down in the magic robot chair and get your hair cut automatically? Is any school district actually going to let a group of sixth graders ride an autonomous school bus with no adult supervision? When ITIF analyzed these 702 occupations manually, using a very generous assumption about how tech could eliminate jobs, we estimated that about 10 percent of jobs were at risk of automation, at most.

Similarly, a recent PricewaterhouseCoopers report predicts that 38 percent of U.S. jobs could potentially be eliminated by 2030.[54] But this relies in part on the flawed Oxford methodology, and its prediction is based on the assumption that, as the price of “robots” falls significantly, their functionality improves dramatically. Both are an uncertain bet.

Instead of fretting about technology eliminating jobs, we should be worrying about how we are going to raise productivity growth, which has been at anemic levels over the last decade.

Policy Implications

These findings have several implications. The first and most important is that everyone should take a deep breath and calm down: Labor market disruption is not abnormally high; it’s occurring at its lowest rate since the Civil War. Claims that we are all one fast-growing tech “unicorn” away from redundancy only raise fears and lead policymakers to, at minimum, ignore policies that would spur automation and technological innovation, and at worse support policies that would limit them.

This is important because, as Nobel Prize-winning economist Edmund Phelps writes, steps such as limiting regulatory barriers to innovation and ensuring a reasonable business tax system are important to spur innovation, “but without a supportive culture, these steps will not be sufficient: they will not even be taken. The genius of [America’s] high dynamism was a restless spirit of conceiving, experimenting and exploring throughout the economy from the bottom up—leading with insight and luck, to innovation.”[55] Constant fearmongering about the grim reaper of technology coming for our jobs is corrosive to “high dynamism.”

Constant fearmongering about the grim reaper of technology coming for our jobs is corrosive to “high dynamism.”

To be sure, this does not mean that we should be smug and just sit back and hope for innovation to occur. Indeed, as ITIF has argued previously, policymakers should do everything possible to speed up the rate of creative destruction. Otherwise, it will be impossible to raise living standards faster than the current snail’s pace. And, among other things, this means not giving into incumbent interests (of companies or workers) who want to resist disruption, and who paint their efforts as progressive and on the side of the working man and women. It is telling that computer scientist Alan Kay, who famously said that “the best way to predict the future is to invent it,” now says, “the best way to predict the future is to prevent it.”[56]

But regardless of the rate of churn or whether policy seeks to retard or speed it up, policymakers can and should take action to ease labor-market transitions for workers who do lose their jobs, whether due to natural labor-market churn or business-cycle downturns. A forthcoming ITIF report will lay out a detailed and actionable policy agenda to help workers better adjust to labor-market churn—something that is sorely needed in the United States, in particular.

But one idea that should not be on the agenda is universal basic income. Under this widely touted scheme, the state would somehow take money from somewhere and proceed to write monthly checks to all adults, whether they are working or not, poor or rich. This, allegedly, would establish a stable floor upon which everyone would build their own brighter future. Never mind that this would simply lead to the very thing its advocates warn us technology will bring: large-scale unemployment, as the government incentivizes workers to sit out of the workforce rather than help pave pathways for those displaced by technology or trade to find success in new industries.

To be sure, the alternative should not be a call for a return to the Hobbesian world of the 1800s, when, if you lost your job you were completely on your own (hopefully with the help of your extended family). We can and should do a better job of providing temporary income support for workers who lose their jobs through no fault of their own. We should also make it easier for workers to transition into new occupations. To that end, policymakers should consider establishing stronger requirements for U.S. state governments to let workers collecting unemployment insurance enroll in certified training without losing their benefits. Policymakers also should create a system of lifelong-learning accounts akin to 401(k) accounts for retirements. And they should reform the country’s higher-education system to separate responsibility for education from the franchise of credentialing.[57]

If we want to preserve Americans’ willingness to embrace, or at least accept creative destruction, then we need a state that effectively enables people to thrive.

This is important because if we are going to have any hope of regaining America’s historical willingness to embrace change and innovation, then government needs to reduce risk, at least somewhat. As New York Times columnist David Brooks writes on the issue of health insurance:

The core of the new era is this: If you want to preserve the market, you have to have a strong state that enables people to thrive in it. If you are pro-market, you have to be pro-state. You can come up with innovative ways to deliver state services, like affordable health care, but you can’t just leave people on their own. The social fabric, the safety net and the human capital sources just aren’t strong enough.[58]

To paraphrase Brooks, if we want to preserve Americans’ willingness to embrace, or at least accept creative destruction, then we need a state that effectively enables people to thrive. Moreover, if we are going to realize the American dream of continuing progress and increasing standards of living, then the last thing we want to do is to constantly stoke people’s unwarranted and unfounded fears that their jobs are on the techno-chopping block. Certainly, the past 170 years of American history suggest these fears are misplaced. But it doesn’t mean that the fears themselves are not real or that individuals should not be helped to make occupational transitions.

Acknowledgments

The author wishes to thank the following individual for providing input to this report: Randolph Court, Adams Nager, and Kaya Singleton. Any errors or omissions are the authors’ alone.

About the Authors

Robert D. Atkinson is the founder and president of ITIF. Atkinson’s books include Innovation Economics: The Race for Global Advantage (Yale, 2012), Supply-Side Follies: Why Conservative Economics Fails, Liberal Economics Falters, and Innovation Economics Is the Answer (Rowman & Littlefield, 2006), and The Past and Future of America’s Economy: Long Waves of Innovation That Power Cycles of Growth (Edward Elgar, 2005). Atkinson holds a Ph.D. in city and regional planning from the University of North Carolina, Chapel Hill, and a master’s degree in urban and regional planning from the University of Oregon.

John Wu is an economic research assistant at ITIF His research interests include green technologies, labor economics, and time use. He graduated from the College of Wooster with a bachelor of arts in economics and sociology, with a minor in environmental studies.

About ITIF

The Information Technology and Innovation Foundation (ITIF) is an independent 501(c)(3) nonprofit, nonpartisan research and educational institute that has been recognized repeatedly as the world’s leading think tank for science and technology policy. Its mission is to formulate, evaluate, and promote policy solutions that accelerate innovation and boost productivity to spur growth, opportunity, and progress. For more information, visit itif.org/about.

Endnotes

[1]. James O’Toole, “Here Come the Robot Lawyers,” CNN Tech, March 28, 2014, http://money.cnn.com/2014/03/28/technology/innovation/robot-lawyers/.

[2]. Rob Lever, “Tech World Debate on Robots and Jobs Heats Up,” Phys.org, March 26, 2017, https://phys.org/news/2017-03-tech-world-debate-robots-jobs.html.

[3]. Robert D. Atkinson, “In Defense of Robots,” National Review, April 17, 2017, https://www.nationalreview.com/magazine/2017-04-17-0100/robots-taking-jobs-technology-workers; Ben Miller and Robert D. Atkinson, “Are Robots Taking Our Jobs, or Making Them?” (Information Technology and Innovation Foundation, September 2013), https://itif.org/publications/2013/09/09/are-robots-taking-our-jobs-or-making-them.

[4]. Bureau of Labor Statistics, Labor Productivity and Costs (productivity change in the nonfarm business sector, 1947–2016; accessed March 22, 2017), https://www.bls.gov/lpc/prodybar.htm.

[5]. Daniel Burrus, “A Lesson from Google: Why Innovation is the Key to Your Company’s Future,” Burrus Research, April 2, 2012, https://www.burrus.com/2012/04/a-lesson-from-google-why-innovation-is-the-key-to-your-companys-future/.

[6]. “Speaking,” on Joseph Jaffe’s website, accessed April 28, 2017, http://www.jaffejuice.com/speaking.html.

[7]. John Kotter, “Can You Handle an Exponential Rate of Change?” Forbes, July 19, 2011, https://www.forbes.com/sites/johnkotter/2011/07/19/can-you-handle-an-exponential-rate-of-change/.

[8]. Peter H. Diamandis and Steven Kotler, Abundance: The Future is Better Than You Think (New York: Free Press, 2012).

[9]. Erik Brynjolfsson and Andrew McAfee, The Second Machine Age (New York: W.W. Norton, 2014).

[10]. Klaus Schwab, “The Fourth Industrial Revolution: What It Means, How to Respond,” World Economic Forum, January 14, 2016, https://www.weforum.org/agenda/2016/01/the-fourth-industrial-revolution-what-it-means-and-how-to-respond/.

[11]. Robert D. Atkinson, The Past and Future of America’s Economy: Long Waves of Innovation that Power Cycles of Growth (Cheltenham, UK: Edward Elgar, 2004); Carlota Perez, Technological Revolutions and Financial Capital: The Dynamics of Bubbles and Golden Ages (Cheltenham, UK: Edward Elgar, 2003).

[12]. Unpublished PowerPoint presentation, Professor Robert Friedel, University of Maryland.

[13]. However, even as late as 1939, at least half a million buggy whips were sold, despite the increased sales of “horseless carriages.” See, “Buggy Whip Sale Totals Millions,” Kentucky New Era, July 8, 1939, http://news.google.com/newspapers?nid=0NVGjzr574C&dat=19390708&printsec=frontpage&hl=en.

[14]. U.S. Department of Labor (DOL), “Dictionary of Occupational Titles, Part 1: Definitions of Titles” (Washington, DC: DOL, 1939).

[15]. Ibid.

[16]. There will still be musicians, producers, and others involved in creating the music (provided that music piracy is limited), but jobs in reproducing it will likely shrink because this will be done by computer and self-service functions.

[17]. ITIF analysis of IPUMS occupation data: Steven Ruggles, Katie Genadek, Ronald Goeken, Josiah Grover, and Matthew Sobek. Integrated Public Use Microdata Series: Version 6.0 (IPUMS) “U.S. Census Data for Social, Economic, and Health Research” (accessed March 14, 2017), https://usa.ipums.org/usa.

[18]. Ibid.

[19]. Zachary Crockett, “The Rise and Fall of Professional Bowling,” Priceonomics, March 21, 2014, https://priceonomics.com/the-rise-and-fall-of-professional-bowling/.

[20]. The U.S. House of Representatives was one of the last organizations employing elevator operators for Members of Congress, only eliminating this luxury after the Republicans gained control of the House in 1995.

[21]. ITIF analysis of IPUMS occupation data.

[22]. Ibid.

[23]. Ibid.

[24]. Ibid.

[25]. Ibid.

[26]. Alex Trembath et al., “US Government Role in Shale Gas Fracking History: An Overview,” The Breakthrough Institute, March 2, 2012, http://thebreakthrough.org/archive/shale_gas_fracking_history_and.

[27]. ITIF analysis of IPUMS occupation data.

[28]. Integrated Public Use Microdata Series: Version 6.0 (IPUMS), “Integrated Occupation and Industry Codes and Occupational Standing Variables in the IPUMS” (accessed March 13, 2017), https://usa.ipums.org/usa/chapter4/chapter4.shtml.

[29]. Robert D. Atkinson et al., Worse Than the Great Depression: What Experts Are Missing About American Manufacturing Decline (Information Technology and Innovation Foundation, March 2012), http://www2.itif.org/2012-american-manufacturing-decline.pdf.

[30]. ITIF analysis of IPUMS occupation data.

[31]. Ibid.

[32]. Ibid.

[33]. Ibid.

[34]. We consider the following occupations as farm employment: farm foremen, farm laborers, farm managers, farm service laborers, and farmers.

[35]. ITIF Analysis of IPUMS occupation data.

[36]. Ibid.

[37]. J. John Wu and Robert D. Atkinson, “The U.S. Labor Market Is Far More Stable Than People Think” (Information Technology and Innovation Foundation, June 2016), http://www2.itif.org/2016-us-labor-market-stable.pdf.

[38]. Susan Adams, “Most Americans Are Unhappy at Work,” Forbes, June 20, 2014, http://www.forbes.com/sites/susanadams/2014/06/20/most-americans-are-unhappy-at-work/#5c37252d5862.

[39]. Bureau of Labor Statistics, Business Employment Dynamics (gross jobs gains, series id BDS0000000000000000110001LQ5; accessed June 14, 2016), http://data.bls.gov/cgi-bin/srgate; Bureau of Labor Statistics, Current Employment Statistics (all employees, thousands, series id CES0500000001; accessed March 11, 2016), http://data.bls.gov/cgi-bin/srgate. In the ratio of quarterly job losses to total employment, the job-loss data are quarterly and total-employment is a three-month average for the respective quarter.

[40]. Ibid.

[41]. Bureau of Labor Statistics, Business Employment Dynamics (gross job losses by sector; accessed June 14, 2016), http://data.bls.gov/cgi-bin/srgate; Bureau of Labor Statistics, Current Employment Statistics (all employees by sector, thousands; accessed March 11, 2016), http://data.bls.gov/cgi-bin/srgate. In the ratio of quarterly job losses to total employment, the job-loss data are quarterly and total-employment is a three-month average for the respective quarter.

[42]. “U.S. Population, 1790–2000: Always Growing,” United States History, http://www.u-s-history.com/pages/h980.html.

[43]. ITIF analysis of IPUMS occupation data.

[44]. Ibid.

[45]. Ibid.

[46]. Ibid.

[47]. James Bessen, “How Computer Automation Affects Occupations: Technology, Jobs, and Skills” (working paper no. 15–49, Boston University School of Law, Boston, MA, October 2016), http://siepr.stanford.edu/system/files/SSRN-id2690435.pdf.

[48]. Adams Nager, “Trade vs. Productivity: What Caused U.S. Manufacturing’s Decline and How to Revive It” (Information Technology and Innovation Foundation, February 2017), http://www2.itif.org/2017-trade-vs-productivity.pdf.

[49]. Atkinson, The Past and Future of America's Economy; Perez, Technological Revolutions and Financial Capital.

[50]. Larry Elliott, “Fourth Industrial Revolution Brings Promise and Peril for Humanity,” The Guardian, January 24, 2016, https://www.theguardian.com/business/economics-blog/2016/jan/24/4th-industrial-revolution-brings-promise-and-peril-for-humanity-technology-davos.

[51]. BofE Staff Blog, “Millions of Jobs at Risk in Fourth Industrial Revolution,” Finextra, March 1, 2017, https://www.finextra.com/newsarticle/30209/millions-of-jobs-at-risk-in-fourth-industrial-revolution---bofe-staff-blog.

[52]. Robert D. Atkinson, “Think Like an Enterprise: Why Nations Need Comprehensive Productivity Strategies” (Information Technology and Innovation Foundation, May 2016), https://itif.org/events/2016/05/05/think-enterprise-why-nations-need-comprehensive-productivity-strategies.

[53]. Jill Ward, “Rise of the Robots Will Eliminate More Than 5 Million Jobs,” Bloomberg Technology, January 18, 2016, https://www.bloomberg.com/news/articles/2016-01-18/rise-of-the-robots-will-eliminate-more-than-5-million-jobs.

[54]. PricewaterhouseCoopers, “Consumer Spending Prospects and the Impact of Automation on Jobs,” UK Economic Outlook, March 2017, http://www.pwc.co.uk/services/economics-policy/insights/uk-economic-outlook.html.

[55]. Edmund Phelps, Mass Flourishing: How Grassroots Innovation Created Jobs, Challenge, and Change (Princeton, NJ: Princeton University Press, 2013), 324.

[56]. Chunka Mui, “How Traditional Automakers Can Still Win Against Google’s Driverless Car: Part 5,” Forbes, March 1, 2013, http://www.forbes.com/sites/chunkamui/2013/03/01/googles-trillion-dollar-driverless-car-part-5-how-automakers-can-still-win/.

[57]. Joseph V. Kennedy, Daniel Castro, and Robert D. Atkinson, “Why It’s Time to Disrupt Higher Education by Separating Learning From Credentialing” (Information Technology and Innovation Foundation, August 2016), http://www2.itif.org/2016-disrupting-higher-education.pdf.

[58]. David Brooks, “The Republican Health Care Crackup,” The New York Times, March 10, 2017, https://www.nytimes.com/2017/03/10/opinion/the-republican-health-care-crackup.html.

Editors’ Recommendations

April 4, 2017

In Defense of Robots

September 9, 2013